Dieter Fritsch

Photometric Multi-View Mesh Refinement for High-Resolution Satellite Images

May 12, 2020

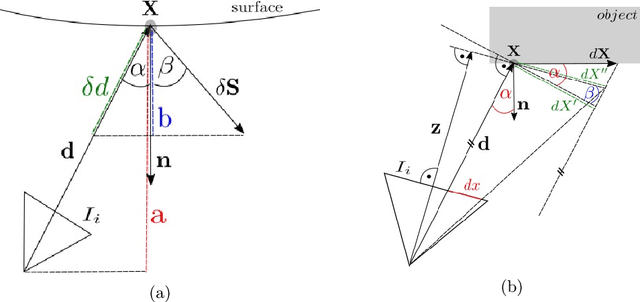

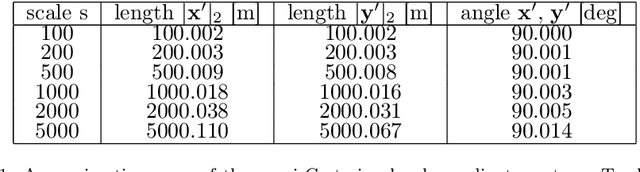

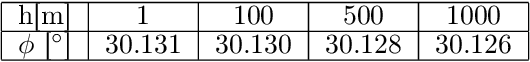

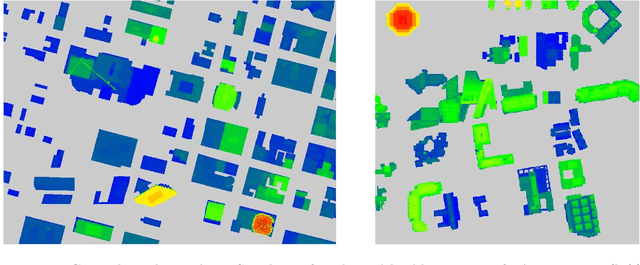

Abstract:Modern high-resolution satellite sensors collect optical imagery with ground sampling distances (GSDs) of 30-50cm, which has sparked a renewed interest in photogrammetric 3D surface reconstruction from satellite data. State-of-the-art reconstruction methods typically generate 2.5D elevation data. Here, we present an approach to recover full 3D surface meshes from multi-view satellite imagery. The proposed method takes as input a coarse initial mesh and refines it by iteratively updating all vertex positions to maximize the photo-consistency between images. Photo-consistency is measured in image space, by transferring texture from one image to another via the surface. We derive the equations to propagate changes in texture similarity through the rational function model (RFM), often also referred to as rational polynomial coefficient (RPC) model. Furthermore, we devise a hierarchical scheme to optimize the surface with gradient descent. In experiments with two different datasets, we show that the refinement improves the initial digital elevation models (DEMs) generated with conventional dense image matching. Moreover, we demonstrate that our method is able to reconstruct true 3D geometry, such as facade structures, if off-nadir views are available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge