Deeksha Dixit

Evaluation of Cross-View Matching to Improve Ground Vehicle Localization with Aerial Perception

Mar 26, 2020

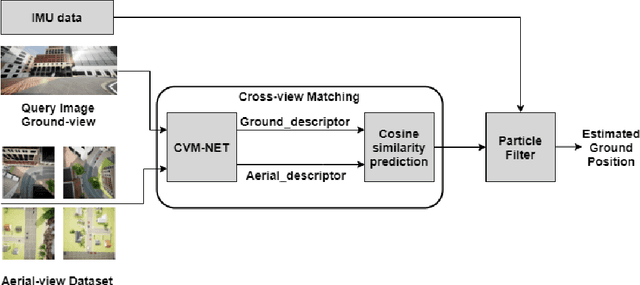

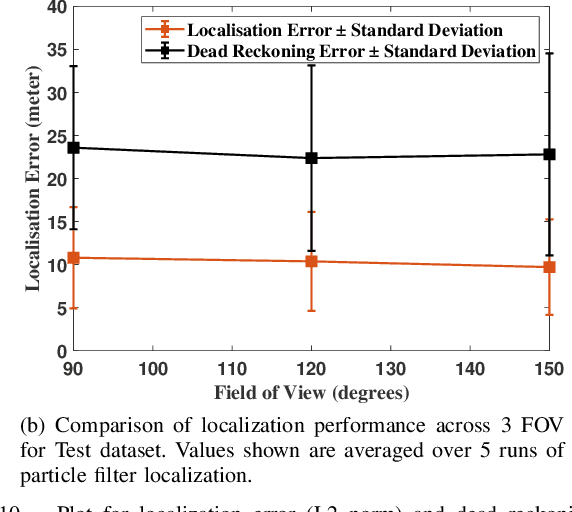

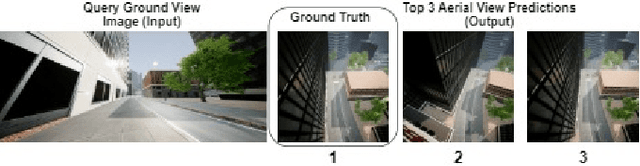

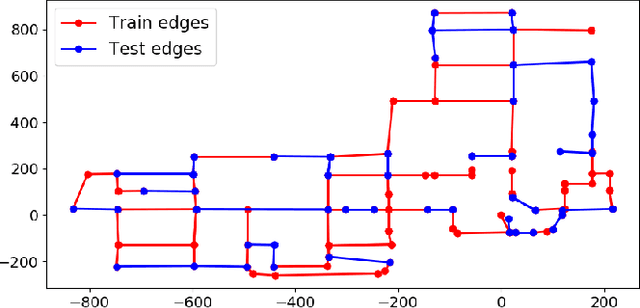

Abstract:Cross-view matching refers to the problem of finding the closest match to a given query ground-view image to one from a database of aerial images. If the aerial images are geotagged, then the closest matching aerial image can be used to localize the query ground-view image. Recently, due to the success of deep learning methods, a number of cross-view matching techniques have been proposed. These techniques perform well for the matching of isolated query images. In this paper, we evaluate cross-view matching for the task of localizing a ground vehicle over a longer trajectory. We use the cross-view matching module as a sensor measurement fused with a particle filter. We evaluate the performance of this method using a city-wide dataset collected in photorealistic simulation using five parameters: height of aerial images, the pitch of the aerial camera mount, field-of-view of ground camera, measurement model and resampling strategy for the particles in the particle filter.

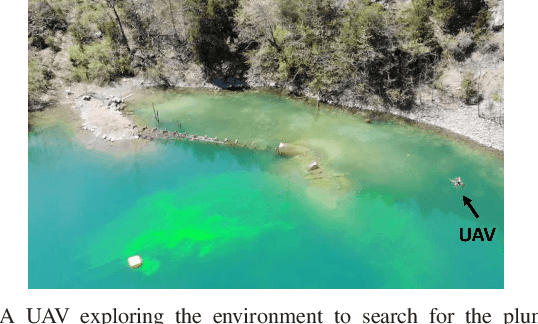

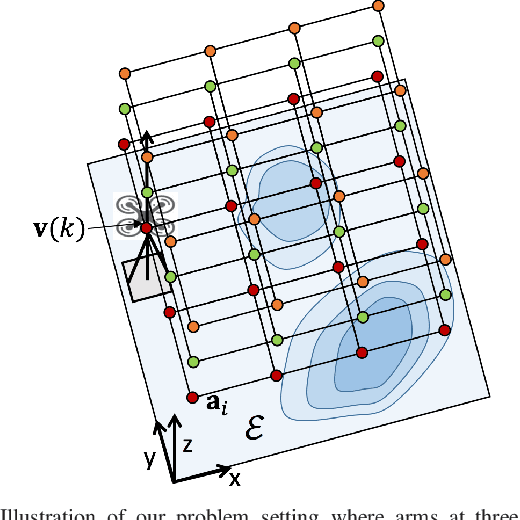

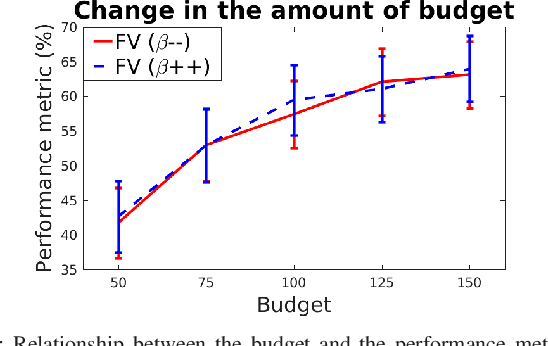

Environmental Hotspot Identification in Limited Time with a UAV Equipped with a Downward-Facing Camera

Sep 18, 2019

Abstract:We are motivated by environmental monitoring tasks where finding the global maxima (i.e., hotspot) of a spatially varying field is crucial. We investigate the problem of identifying the hotspot for fields that can be sensed using an Unmanned Aerial Vehicle (UAV) equipped with a downward-facing camera. The UAV has a limited time budget which it must use for learning the unknown field and identifying the hotspot. Our first contribution is to show how this problem can be formulated as a novel variant of the Gaussian Process (GP) multi-armed bandit problem. The novelty is two-fold: (i) unlike standard multi-armed bandit settings, the arms ; and (ii) unlike standard GP regression, the measurements in our problem are image (i.e., vector measurements) whose quality depends on the altitude at which the UAV flies. We present a strategy for finding the sequence of UAV sensing locations and empirically compare it with a number of baselines. We also present experimental results using images gathered onboard a UAV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge