Debvrat Varshney

AI-ready Snow Radar Echogram Dataset (SRED) for climate change monitoring

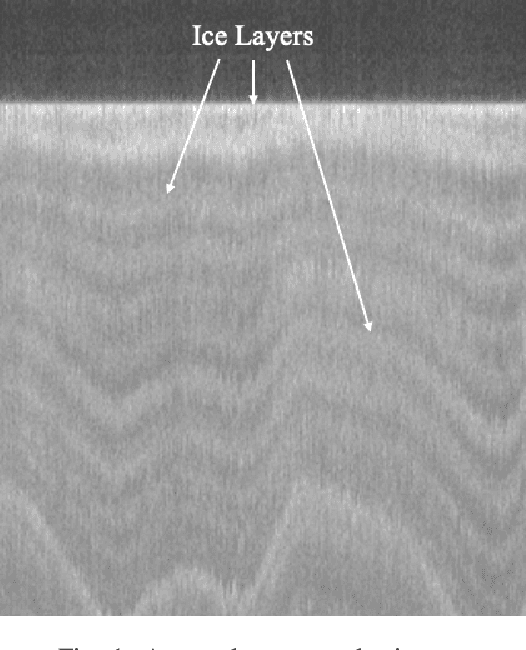

May 01, 2025Abstract:Tracking internal layers in radar echograms with high accuracy is essential for understanding ice sheet dynamics and quantifying the impact of accelerated ice discharge in Greenland and other polar regions due to contemporary global climate warming. Deep learning algorithms have become the leading approach for automating this task, but the absence of a standardized and well-annotated echogram dataset has hindered the ability to test and compare algorithms reliably, limiting the advancement of state-of-the-art methods for the radar echogram layer tracking problem. This study introduces the first comprehensive ``deep learning ready'' radar echogram dataset derived from Snow Radar airborne data collected during the National Aeronautics and Space Administration Operation Ice Bridge (OIB) mission in 2012. The dataset contains 13,717 labeled and 57,815 weakly-labeled echograms covering diverse snow zones (dry, ablation, wet) with varying along-track resolutions. To demonstrate its utility, we evaluated the performance of five deep learning models on the dataset. Our results show that while current computer vision segmentation algorithms can identify and track snow layer pixels in echogram images, advanced end-to-end models are needed to directly extract snow depth and annual accumulation from echograms, reducing or eliminating post-processing. The dataset and accompanying benchmarking framework provide a valuable resource for advancing radar echogram layer tracking and snow accumulation estimation, advancing our understanding of polar ice sheets response to climate warming.

Skip-WaveNet: A Wavelet based Multi-scale Architecture to Trace Firn Layers in Radar Echograms

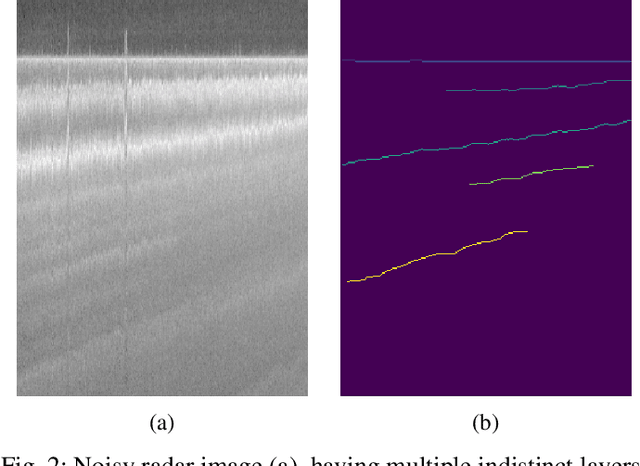

Oct 30, 2023Abstract:Echograms created from airborne radar sensors capture the profile of firn layers present on top of an ice sheet. Accurate tracking of these layers is essential to calculate the snow accumulation rates, which are required to investigate the contribution of polar ice cap melt to sea level rise. However, automatically processing the radar echograms to detect the underlying firn layers is a challenging problem. In our work, we develop wavelet-based multi-scale deep learning architectures for these radar echograms to improve firn layer detection. We show that wavelet based architectures improve the optimal dataset scale (ODS) and optimal image scale (OIS) F-scores by 3.99% and 3.7%, respectively, over the non-wavelet architecture. Further, our proposed Skip-WaveNet architecture generates new wavelets in each iteration, achieves higher generalizability as compared to state-of-the-art firn layer detection networks, and estimates layer depths with a mean absolute error of 3.31 pixels and 94.3% average precision. Such a network can be used by scientists to trace firn layers, calculate the annual snow accumulation rates, estimate the resulting surface mass balance of the ice sheet, and help project global sea level rise.

Multiresolution Fully Convolutional Networks to detect Clouds and Snow through Optical Satellite Images

Jan 07, 2022

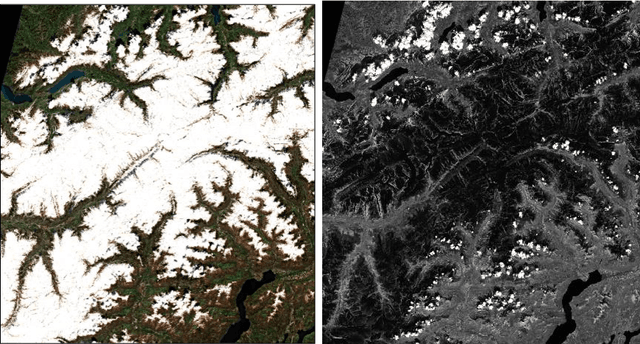

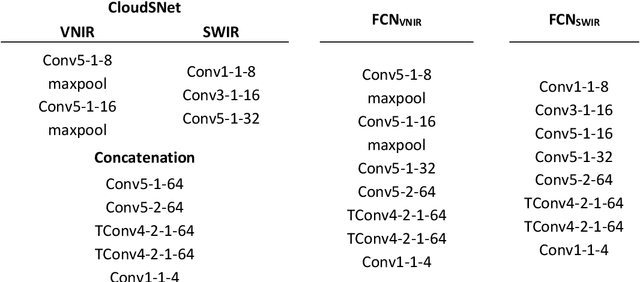

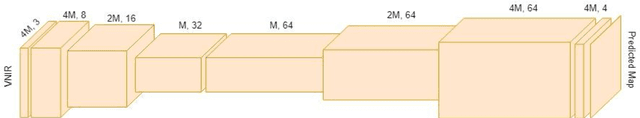

Abstract:Clouds and snow have similar spectral features in the visible and near-infrared (VNIR) range and are thus difficult to distinguish from each other in high resolution VNIR images. We address this issue by introducing a shortwave-infrared (SWIR) band where clouds are highly reflective, and snow is absorptive. As SWIR is typically of a lower resolution compared to VNIR, this study proposes a multiresolution fully convolutional neural network (FCN) that can effectively detect clouds and snow in VNIR images. We fuse the multiresolution bands within a deep FCN and perform semantic segmentation at the higher, VNIR resolution. Such a fusion-based classifier, trained in an end-to-end manner, achieved 94.31% overall accuracy and an F1 score of 97.67% for clouds on Resourcesat-2 data captured over the state of Uttarakhand, India. These scores were found to be 30% higher than a Random Forest classifier, and 10% higher than a standalone single-resolution FCN. Apart from being useful for cloud detection purposes, the study also highlights the potential of convolutional neural networks for multi-sensor fusion problems.

Regression Networks For Calculating Englacial Layer Thickness

Apr 10, 2021

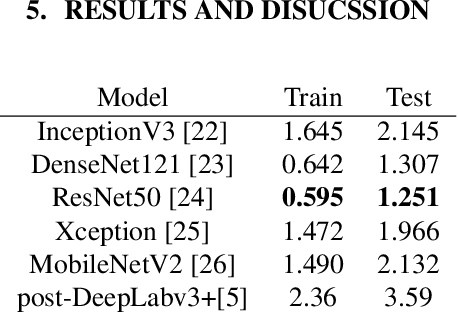

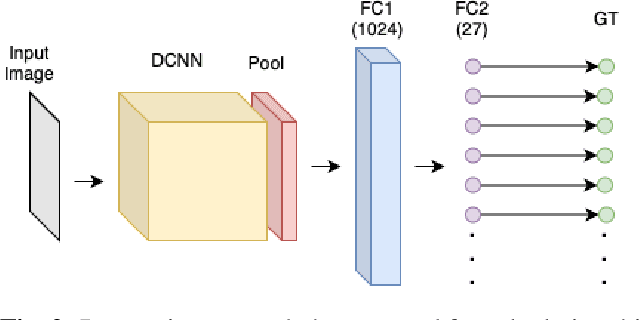

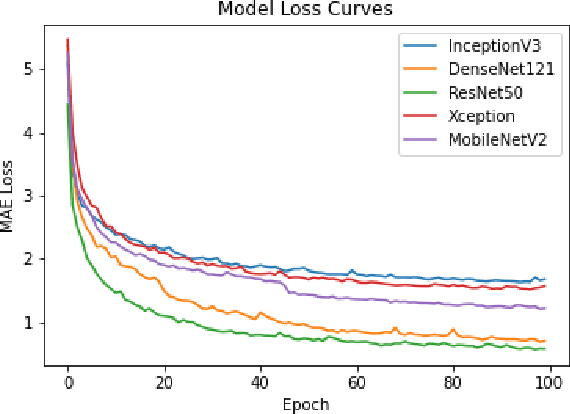

Abstract:Ice thickness estimation is an important aspect of ice sheet studies. In this work, we use convolutional neural networks with multiple output nodes to regress and learn the thickness of internal ice layers in Snow Radar images collected in northwest Greenland. We experiment with some state-of-the-art networks and find that with the residual connections of ResNet50, we could achieve a mean absolute error of 1.251 pixels over the test set. Such regression-based networks can further be improved by embedding domain knowledge and radar information in the neural network in order to reduce the requirement of manual annotations.

FloodNet: A High Resolution Aerial Imagery Dataset for Post Flood Scene Understanding

Dec 05, 2020

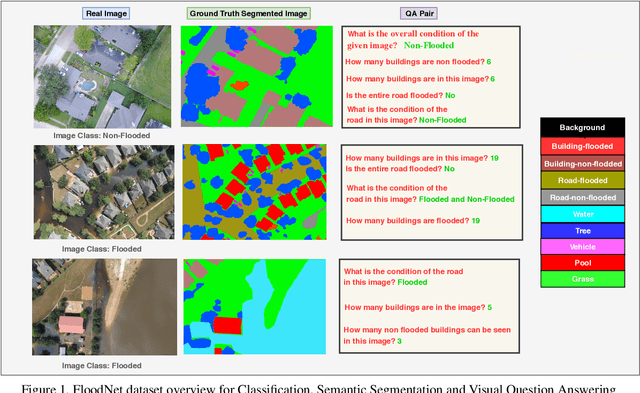

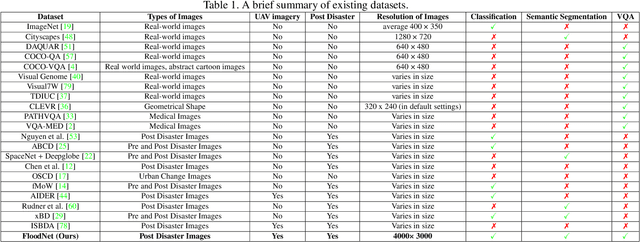

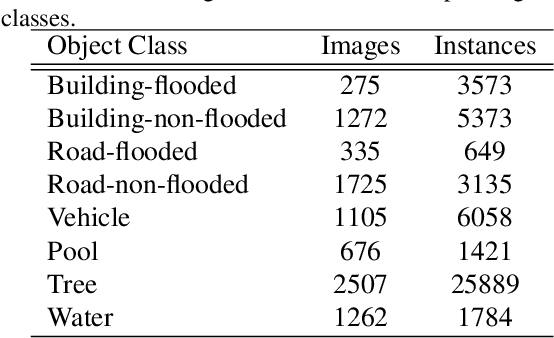

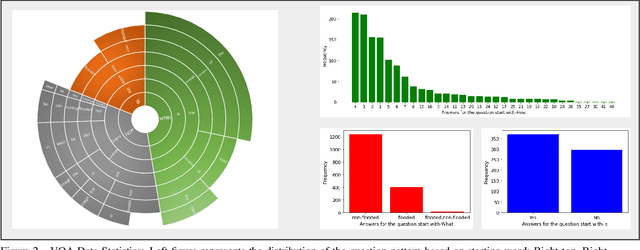

Abstract:Visual scene understanding is the core task in making any crucial decision in any computer vision system. Although popular computer vision datasets like Cityscapes, MS-COCO, PASCAL provide good benchmarks for several tasks (e.g. image classification, segmentation, object detection), these datasets are hardly suitable for post disaster damage assessments. On the other hand, existing natural disaster datasets include mainly satellite imagery which have low spatial resolution and a high revisit period. Therefore, they do not have a scope to provide quick and efficient damage assessment tasks. Unmanned Aerial Vehicle(UAV) can effortlessly access difficult places during any disaster and collect high resolution imagery that is required for aforementioned tasks of computer vision. To address these issues we present a high resolution UAV imagery, FloodNet, captured after the hurricane Harvey. This dataset demonstrates the post flooded damages of the affected areas. The images are labeled pixel-wise for semantic segmentation task and questions are produced for the task of visual question answering. FloodNet poses several challenges including detection of flooded roads and buildings and distinguishing between natural water and flooded water. With the advancement of deep learning algorithms, we can analyze the impact of any disaster which can make a precise understanding of the affected areas. In this paper, we compare and contrast the performances of baseline methods for image classification, semantic segmentation, and visual question answering on our dataset.

Deep Ice Layer Tracking and Thickness Estimation using Fully Convolutional Networks

Sep 01, 2020

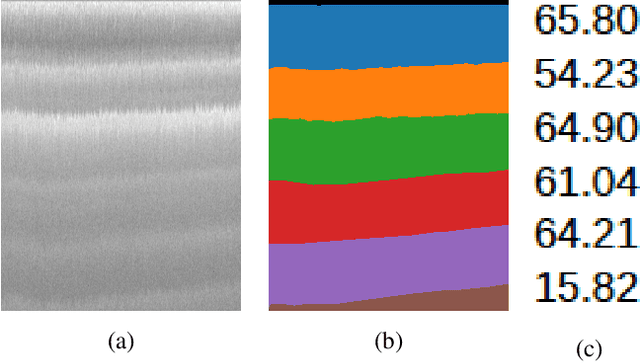

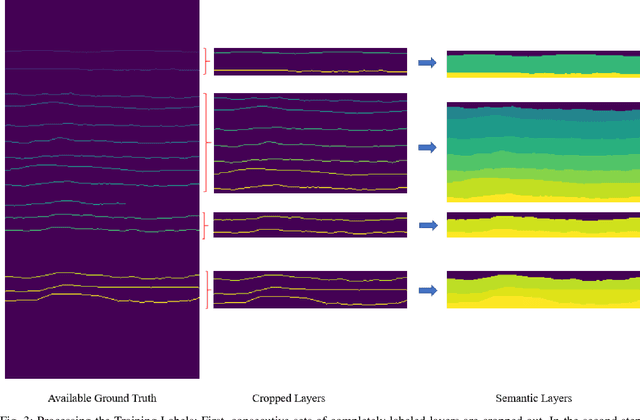

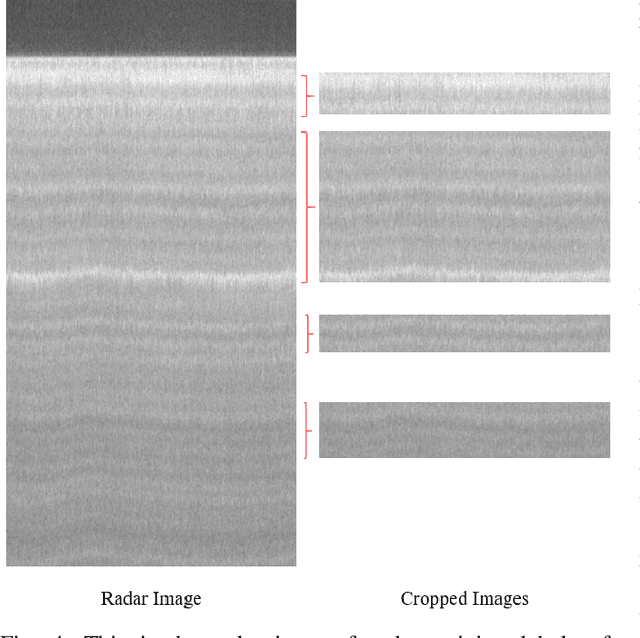

Abstract:Global warming is rapidly reducing glaciers and ice sheets across the world. Real time assessment of this reduction is required so as to monitor its global climatic impact. In this paper, we introduce a novel way of estimating the thickness of each internal ice layer using Snow Radar images and Fully Convolutional Networks. The estimated thickness can be analysed to understand snow accumulation each year. To understand the depth and structure of each internal ice layer, we carry out a set of image processing techniques and perform semantic segmentation on the radar images. After detecting each ice layer uniquely, we calculate its thickness and compare it with the available ground truth. Through this procedure we were able to estimate the ice layer thicknesses within a Mean Absolute Error of approximately 3.6 pixels. Such a Deep Learning based method can be used with ever-increasing datasets to make accurate assessments for cryospheric studies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge