How Well Do Sparse Imagenet Models Transfer?

Dec 02, 2021Eugenia Iofinova, Alexandra Peste, Mark Kurtz, Dan Alistarh

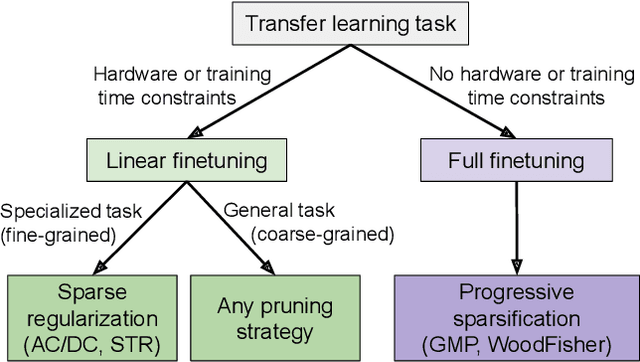

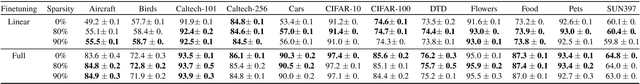

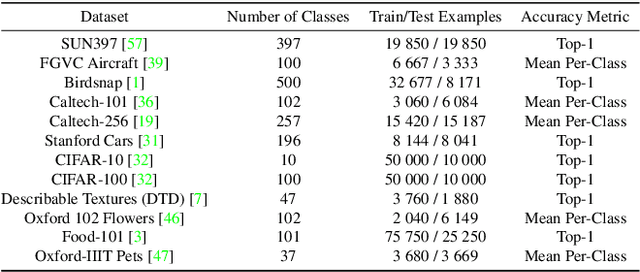

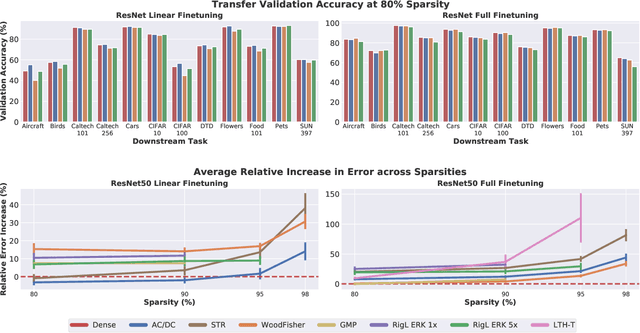

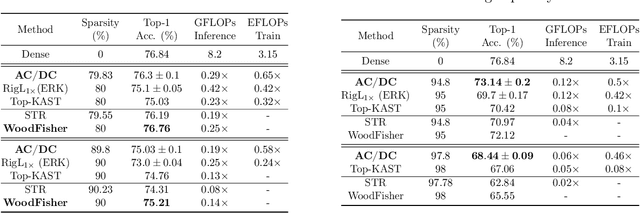

Transfer learning is a classic paradigm by which models pretrained on large "upstream" datasets are adapted to yield good results on "downstream," specialized datasets. Generally, it is understood that more accurate models on the "upstream" dataset will provide better transfer accuracy "downstream". In this work, we perform an in-depth investigation of this phenomenon in the context of convolutional neural networks (CNNs) trained on the ImageNet dataset, which have been pruned - that is, compressed by sparsifiying their connections. Specifically, we consider transfer using unstructured pruned models obtained by applying several state-of-the-art pruning methods, including magnitude-based, second-order, re-growth and regularization approaches, in the context of twelve standard transfer tasks. In a nutshell, our study shows that sparse models can match or even outperform the transfer performance of dense models, even at high sparsities, and, while doing so, can lead to significant inference and even training speedups. At the same time, we observe and analyze significant differences in the behaviour of different pruning methods.

Project CGX: Scalable Deep Learning on Commodity GPUs

Nov 17, 2021Ilia Markov, Hamidreza Ramezanikebrya, Dan Alistarh

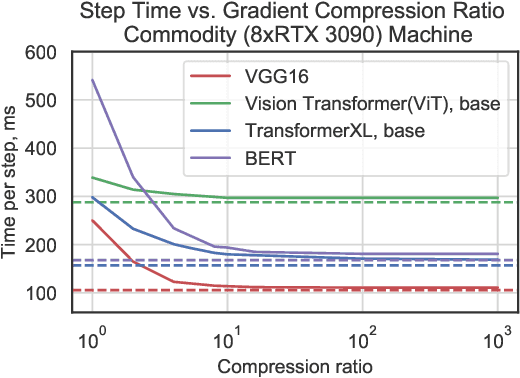

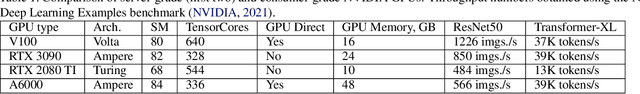

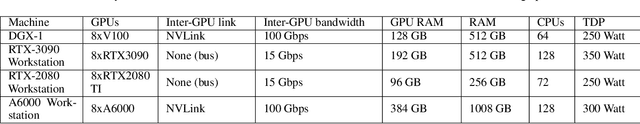

The ability to scale out training workloads has been one of the key performance enablers of deep learning. The main scaling approach is data-parallel GPU-based training, which has been boosted by hardware and software support for highly efficient inter-GPU communication, in particular via bandwidth overprovisioning. This support comes at a price: there is an order of magnitude cost difference between "cloud-grade" servers with such support, relative to their "consumer-grade" counterparts, although server-grade and consumer-grade GPUs can have similar computational envelopes. In this paper, we investigate whether the expensive hardware overprovisioning approach can be supplanted via algorithmic and system design, and propose a framework called CGX, which provides efficient software support for communication compression. We show that this framework is able to remove communication bottlenecks from consumer-grade multi-GPU systems, in the absence of hardware support: when training modern models and tasks to full accuracy, our framework enables self-speedups of 2-3X on a commodity system using 8 consumer-grade NVIDIA RTX 3090 GPUs, and enables it to surpass the throughput of an NVIDIA DGX-1 server, which has similar peak FLOPS but benefits from bandwidth overprovisioning.

Efficient Matrix-Free Approximations of Second-Order Information, with Applications to Pruning and Optimization

Jul 09, 2021Elias Frantar, Eldar Kurtic, Dan Alistarh

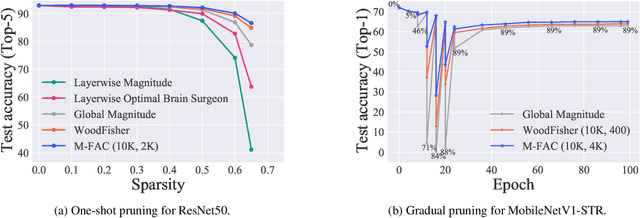

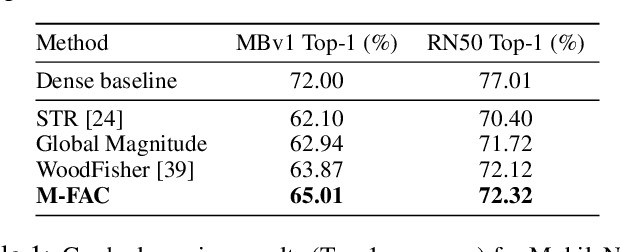

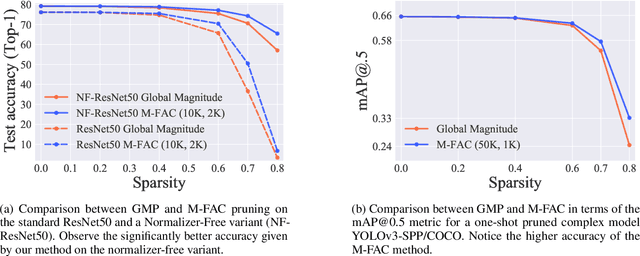

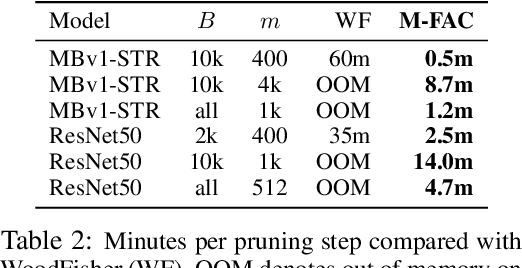

Efficiently approximating local curvature information of the loss function is a key tool for optimization and compression of deep neural networks. Yet, most existing methods to approximate second-order information have high computational or storage costs, which can limit their practicality. In this work, we investigate matrix-free, linear-time approaches for estimating Inverse-Hessian Vector Products (IHVPs) for the case when the Hessian can be approximated as a sum of rank-one matrices, as in the classic approximation of the Hessian by the empirical Fisher matrix. We propose two new algorithms as part of a framework called M-FAC: the first algorithm is tailored towards network compression and can compute the IHVP for dimension $d$, if the Hessian is given as a sum of $m$ rank-one matrices, using $O(dm^2)$ precomputation, $O(dm)$ cost for computing the IHVP, and query cost $O(m)$ for any single element of the inverse Hessian. The second algorithm targets an optimization setting, where we wish to compute the product between the inverse Hessian, estimated over a sliding window of optimization steps, and a given gradient direction, as required for preconditioned SGD. We give an algorithm with cost $O(dm + m^2)$ for computing the IHVP and $O(dm + m^3)$ for adding or removing any gradient from the sliding window. These two algorithms yield state-of-the-art results for network pruning and optimization with lower computational overhead relative to existing second-order methods. Implementations are available at [10] and [18].

SSSE: Efficiently Erasing Samples from Trained Machine Learning Models

Jul 08, 2021Alexandra Peste, Dan Alistarh, Christoph H. Lampert

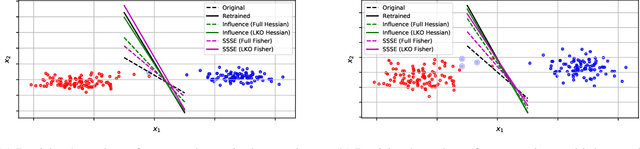

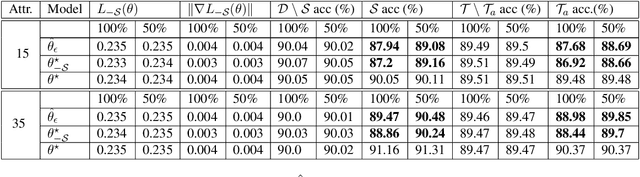

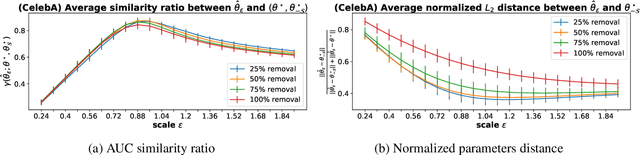

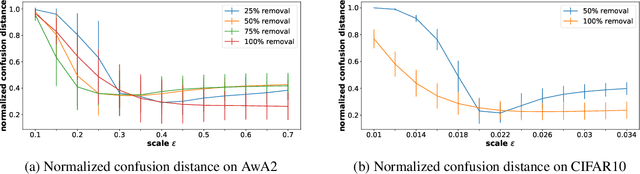

The availability of large amounts of user-provided data has been key to the success of machine learning for many real-world tasks. Recently, an increasing awareness has emerged that users should be given more control about how their data is used. In particular, users should have the right to prohibit the use of their data for training machine learning systems, and to have it erased from already trained systems. While several sample erasure methods have been proposed, all of them have drawbacks which have prevented them from gaining widespread adoption. Most methods are either only applicable to very specific families of models, sacrifice too much of the original model's accuracy, or they have prohibitive memory or computational requirements. In this paper, we propose an efficient and effective algorithm, SSSE, for samples erasure, that is applicable to a wide class of machine learning models. From a second-order analysis of the model's loss landscape we derive a closed-form update step of the model parameters that only requires access to the data to be erased, not to the original training set. Experiments on three datasets, CelebFaces attributes (CelebA), Animals with Attributes 2 (AwA2) and CIFAR10, show that in certain cases SSSE can erase samples almost as well as the optimal, yet impractical, gold standard of training a new model from scratch with only the permitted data.

AC/DC: Alternating Compressed/DeCompressed Training of Deep Neural Networks

Jun 23, 2021Alexandra Peste, Eugenia Iofinova, Adrian Vladu, Dan Alistarh

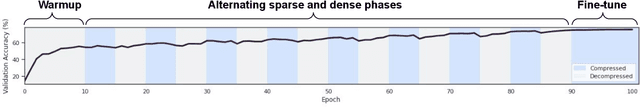

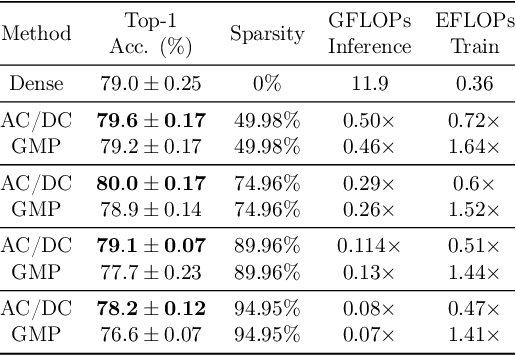

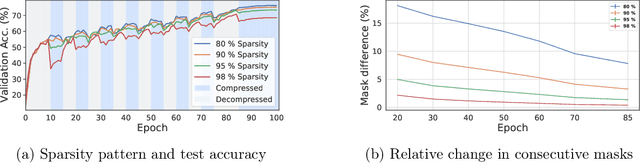

The increasing computational requirements of deep neural networks (DNNs) have led to significant interest in obtaining DNN models that are sparse, yet accurate. Recent work has investigated the even harder case of sparse training, where the DNN weights are, for as much as possible, already sparse to reduce computational costs during training. Existing sparse training methods are mainly empirical and often have lower accuracy relative to the dense baseline. In this paper, we present a general approach called Alternating Compressed/DeCompressed (AC/DC) training of DNNs, demonstrate convergence for a variant of the algorithm, and show that AC/DC outperforms existing sparse training methods in accuracy at similar computational budgets; at high sparsity levels, AC/DC even outperforms existing methods that rely on accurate pre-trained dense models. An important property of AC/DC is that it allows co-training of dense and sparse models, yielding accurate sparse-dense model pairs at the end of the training process. This is useful in practice, where compressed variants may be desirable for deployment in resource-constrained settings without re-doing the entire training flow, and also provides us with insights into the accuracy gap between dense and compressed models.

NUQSGD: Provably Communication-efficient Data-parallel SGD via Nonuniform Quantization

May 01, 2021Ali Ramezani-Kebrya, Fartash Faghri, Ilya Markov, Vitalii Aksenov, Dan Alistarh, Daniel M. Roy

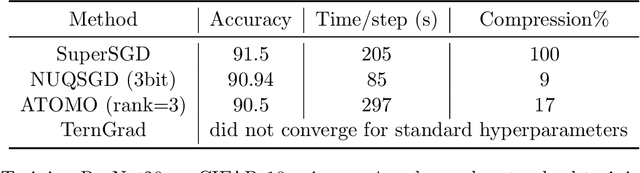

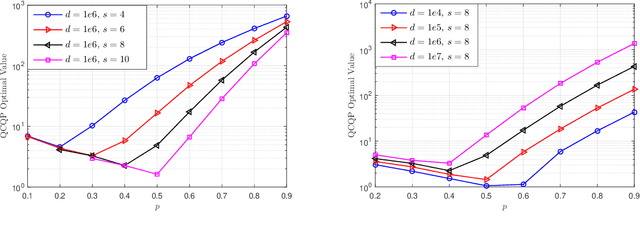

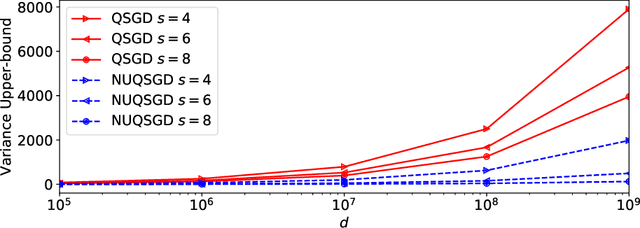

As the size and complexity of models and datasets grow, so does the need for communication-efficient variants of stochastic gradient descent that can be deployed to perform parallel model training. One popular communication-compression method for data-parallel SGD is QSGD (Alistarh et al., 2017), which quantizes and encodes gradients to reduce communication costs. The baseline variant of QSGD provides strong theoretical guarantees, however, for practical purposes, the authors proposed a heuristic variant which we call QSGDinf, which demonstrated impressive empirical gains for distributed training of large neural networks. In this paper, we build on this work to propose a new gradient quantization scheme, and show that it has both stronger theoretical guarantees than QSGD, and matches and exceeds the empirical performance of the QSGDinf heuristic and of other compression methods.

Sparsity in Deep Learning: Pruning and growth for efficient inference and training in neural networks

Jan 31, 2021Torsten Hoefler, Dan Alistarh, Tal Ben-Nun, Nikoli Dryden, Alexandra Peste

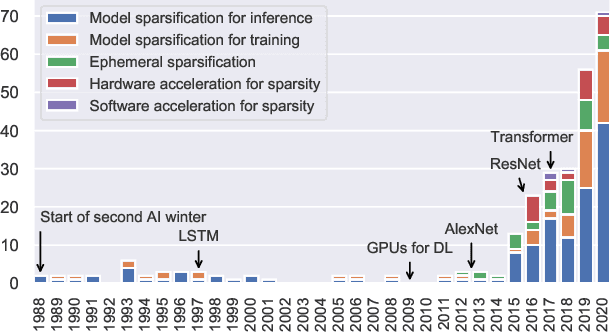

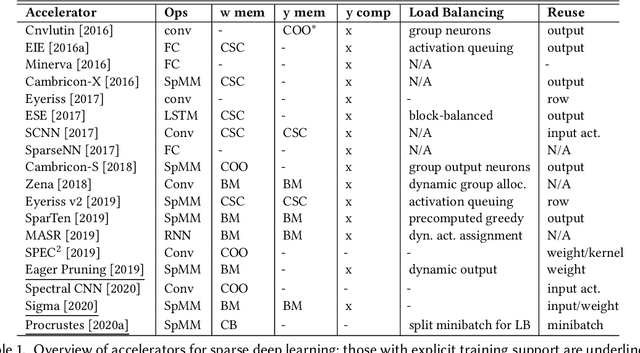

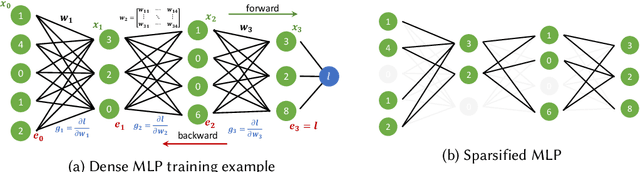

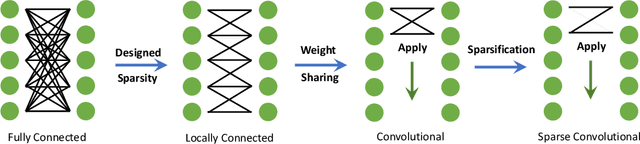

The growing energy and performance costs of deep learning have driven the community to reduce the size of neural networks by selectively pruning components. Similarly to their biological counterparts, sparse networks generalize just as well, if not better than, the original dense networks. Sparsity can reduce the memory footprint of regular networks to fit mobile devices, as well as shorten training time for ever growing networks. In this paper, we survey prior work on sparsity in deep learning and provide an extensive tutorial of sparsification for both inference and training. We describe approaches to remove and add elements of neural networks, different training strategies to achieve model sparsity, and mechanisms to exploit sparsity in practice. Our work distills ideas from more than 300 research papers and provides guidance to practitioners who wish to utilize sparsity today, as well as to researchers whose goal is to push the frontier forward. We include the necessary background on mathematical methods in sparsification, describe phenomena such as early structure adaptation, the intricate relations between sparsity and the training process, and show techniques for achieving acceleration on real hardware. We also define a metric of pruned parameter efficiency that could serve as a baseline for comparison of different sparse networks. We close by speculating on how sparsity can improve future workloads and outline major open problems in the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge