Cristina Improta

Enhancing AI-based Generation of Software Exploits with Contextual Information

Aug 06, 2024

Abstract:This practical experience report explores Neural Machine Translation (NMT) models' capability to generate offensive security code from natural language (NL) descriptions, highlighting the significance of contextual understanding and its impact on model performance. Our study employs a dataset comprising real shellcodes to evaluate the models across various scenarios, including missing information, necessary context, and unnecessary context. The experiments are designed to assess the models' resilience against incomplete descriptions, their proficiency in leveraging context for enhanced accuracy, and their ability to discern irrelevant information. The findings reveal that the introduction of contextual data significantly improves performance. However, the benefits of additional context diminish beyond a certain point, indicating an optimal level of contextual information for model training. Moreover, the models demonstrate an ability to filter out unnecessary context, maintaining high levels of accuracy in the generation of offensive security code. This study paves the way for future research on optimizing context use in AI-driven code generation, particularly for applications requiring a high degree of technical precision such as the generation of offensive code.

Poisoning Programs by Un-Repairing Code: Security Concerns of AI-generated Code

Mar 11, 2024

Abstract:AI-based code generators have gained a fundamental role in assisting developers in writing software starting from natural language (NL). However, since these large language models are trained on massive volumes of data collected from unreliable online sources (e.g., GitHub, Hugging Face), AI models become an easy target for data poisoning attacks, in which an attacker corrupts the training data by injecting a small amount of poison into it, i.e., astutely crafted malicious samples. In this position paper, we address the security of AI code generators by identifying a novel data poisoning attack that results in the generation of vulnerable code. Next, we devise an extensive evaluation of how these attacks impact state-of-the-art models for code generation. Lastly, we discuss potential solutions to overcome this threat.

AI Code Generators for Security: Friend or Foe?

Feb 02, 2024

Abstract:Recent advances of artificial intelligence (AI) code generators are opening new opportunities in software security research, including misuse by malicious actors. We review use cases for AI code generators for security and introduce an evaluation benchmark.

* Dataset available at: https://github.com/dessertlab/violent-python

Automating the Correctness Assessment of AI-generated Code for Security Contexts

Oct 28, 2023

Abstract:In this paper, we propose a fully automated method, named ACCA, to evaluate the correctness of AI-generated code for security purposes. The method uses symbolic execution to assess whether the AI-generated code behaves as a reference implementation. We use ACCA to assess four state-of-the-art models trained to generate security-oriented assembly code and compare the results of the evaluation with different baseline solutions, including output similarity metrics, widely used in the field, and the well-known ChatGPT, the AI-powered language model developed by OpenAI. Our experiments show that our method outperforms the baseline solutions and assesses the correctness of the AI-generated code similar to the human-based evaluation, which is considered the ground truth for the assessment in the field. Moreover, ACCA has a very strong correlation with human evaluation (Pearson's correlation coefficient r=0.84 on average). Finally, since it is a fully automated solution that does not require any human intervention, the proposed method performs the assessment of every code snippet in ~0.17s on average, which is definitely lower than the average time required by human analysts to manually inspect the code, based on our experience.

Vulnerabilities in AI Code Generators: Exploring Targeted Data Poisoning Attacks

Aug 04, 2023

Abstract:In this work, we assess the security of AI code generators via data poisoning, i.e., an attack that injects malicious samples into the training data to generate vulnerable code. We poison the training data by injecting increasing amounts of code containing security vulnerabilities and assess the attack's success on different state-of-the-art models for code generation. Our analysis shows that AI code generators are vulnerable to even a small amount of data poisoning. Moreover, the attack does not impact the correctness of code generated by pre-trained models, making it hard to detect.

Enhancing Robustness of AI Offensive Code Generators via Data Augmentation

Jun 08, 2023Abstract:In this work, we present a method to add perturbations to the code descriptions, i.e., new inputs in natural language (NL) from well-intentioned developers, in the context of security-oriented code, and analyze how and to what extent perturbations affect the performance of AI offensive code generators. Our experiments show that the performance of the code generators is highly affected by perturbations in the NL descriptions. To enhance the robustness of the code generators, we use the method to perform data augmentation, i.e., to increase the variability and diversity of the training data, proving its effectiveness against both perturbed and non-perturbed code descriptions.

Who Evaluates the Evaluators? On Automatic Metrics for Assessing AI-based Offensive Code Generators

Dec 12, 2022

Abstract:AI-based code generators are an emerging solution for automatically writing programs starting from descriptions in natural language, by using deep neural networks (Neural Machine Translation, NMT). In particular, code generators have been used for ethical hacking and offensive security testing by generating proof-of-concept attacks. Unfortunately, the evaluation of code generators still faces several issues. The current practice uses automatic metrics, which compute the textual similarity of generated code with ground-truth references. However, it is not clear what metric to use, and which metric is most suitable for specific contexts. This practical experience report analyzes a large set of output similarity metrics on offensive code generators. We apply the metrics on two state-of-the-art NMT models using two datasets containing offensive assembly and Python code with their descriptions in the English language. We compare the estimates from the automatic metrics with human evaluation and provide practical insights into their strengths and limitations.

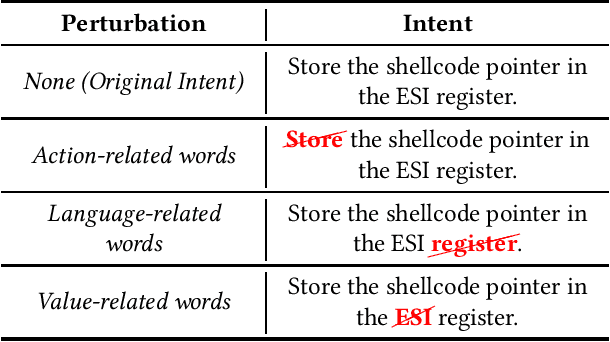

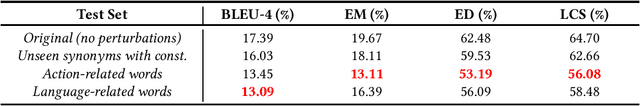

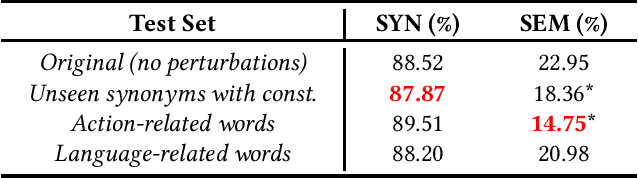

Can NMT Understand Me? Towards Perturbation-based Evaluation of NMT Models for Code Generation

Mar 30, 2022

Abstract:Neural Machine Translation (NMT) has reached a level of maturity to be recognized as the premier method for the translation between different languages and aroused interest in different research areas, including software engineering. A key step to validate the robustness of the NMT models consists in evaluating the performance of the models on adversarial inputs, i.e., inputs obtained from the original ones by adding small amounts of perturbation. However, when dealing with the specific task of the code generation (i.e., the generation of code starting from a description in natural language), it has not yet been defined an approach to validate the robustness of the NMT models. In this work, we address the problem by identifying a set of perturbations and metrics tailored for the robustness assessment of such models. We present a preliminary experimental evaluation, showing what type of perturbations affect the model the most and deriving useful insights for future directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge