Craig Gidney

Learning to Decode the Surface Code with a Recurrent, Transformer-Based Neural Network

Oct 09, 2023

Abstract:Quantum error-correction is a prerequisite for reliable quantum computation. Towards this goal, we present a recurrent, transformer-based neural network which learns to decode the surface code, the leading quantum error-correction code. Our decoder outperforms state-of-the-art algorithmic decoders on real-world data from Google's Sycamore quantum processor for distance 3 and 5 surface codes. On distances up to 11, the decoder maintains its advantage on simulated data with realistic noise including cross-talk, leakage, and analog readout signals, and sustains its accuracy far beyond the 25 cycles it was trained on. Our work illustrates the ability of machine learning to go beyond human-designed algorithms by learning from data directly, highlighting machine learning as a strong contender for decoding in quantum computers.

Learning Non-Markovian Quantum Noise from Moiré-Enhanced Swap Spectroscopy with Deep Evolutionary Algorithm

Dec 09, 2019

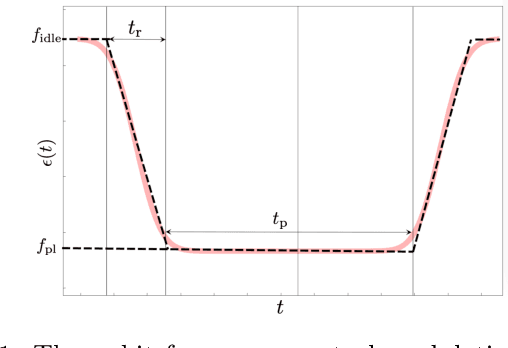

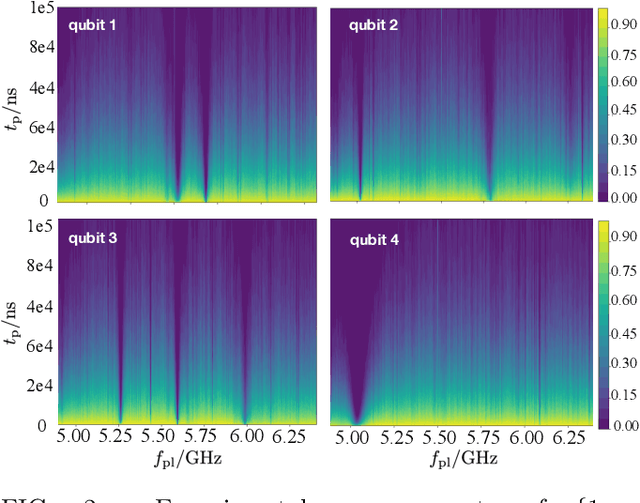

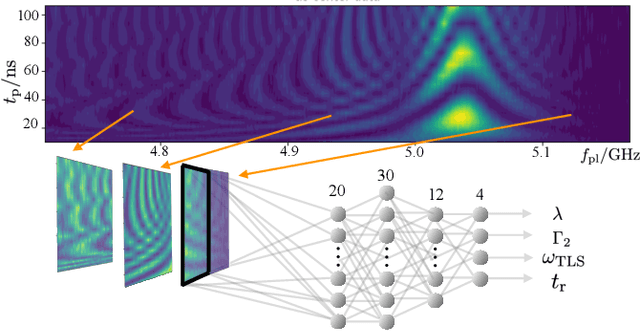

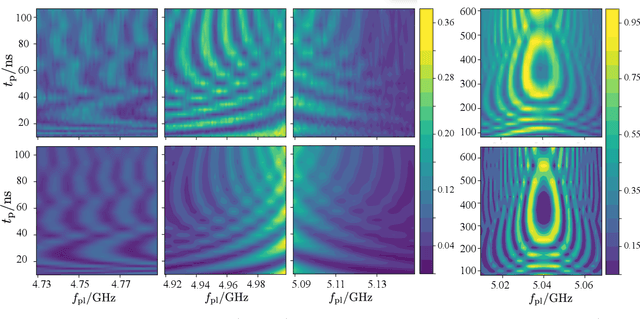

Abstract:Two-level-system (TLS) defects in amorphous dielectrics are a major source of noise and decoherence in solid-state qubits. Gate-dependent non-Markovian errors caused by TLS-qubit coupling are detrimental to fault-tolerant quantum computation and have not been rigorously treated in the existing literature. In this work, we derive the non-Markovian dynamics between TLS and qubits during a SWAP-like two-qubit gate and the associated average gate fidelity for frequency-tunable Transmon qubits. This gate dependent error model facilitates using qubits as sensors to simultaneously learn practical imperfections in both the qubit's environment and control waveforms. We combine the-state-of-art machine learning algorithm with Moir\'{e}-enhanced swap spectroscopy to achieve robust learning using noisy experimental data. Deep neural networks are used to represent the functional map from experimental data to TLS parameters and are trained through an evolutionary algorithm. Our method achieves the highest learning efficiency and robustness against experimental imperfections to-date, representing an important step towards in-situ quantum control optimization over environmental and control defects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge