Christopher Mann

DH-GAN: A Physics-driven Untrained Generative Adversarial Network for 3D Microscopic Imaging using Digital Holography

May 25, 2022

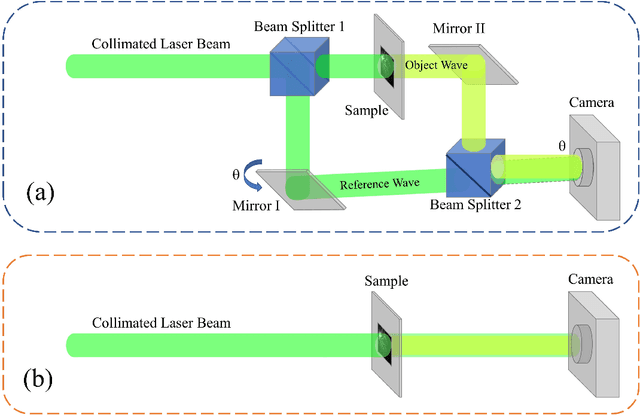

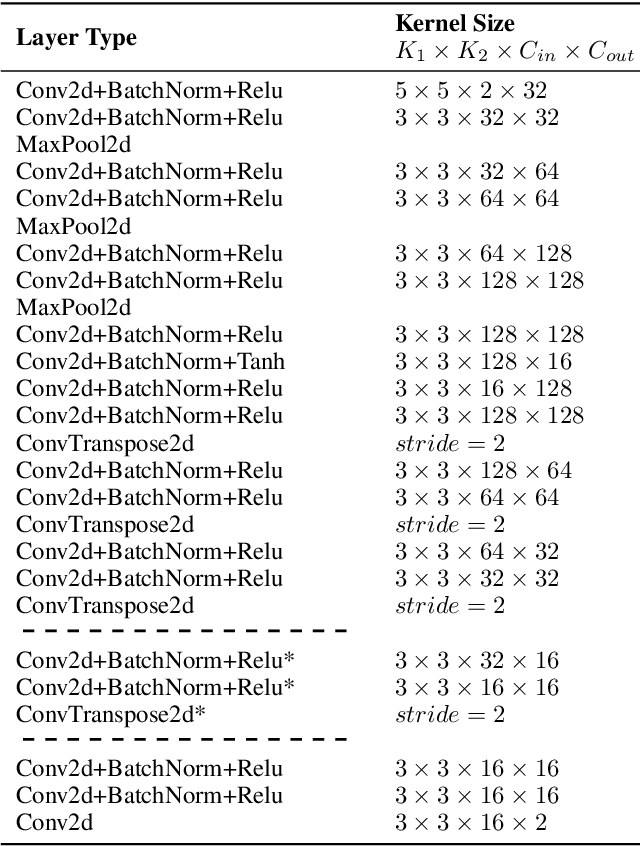

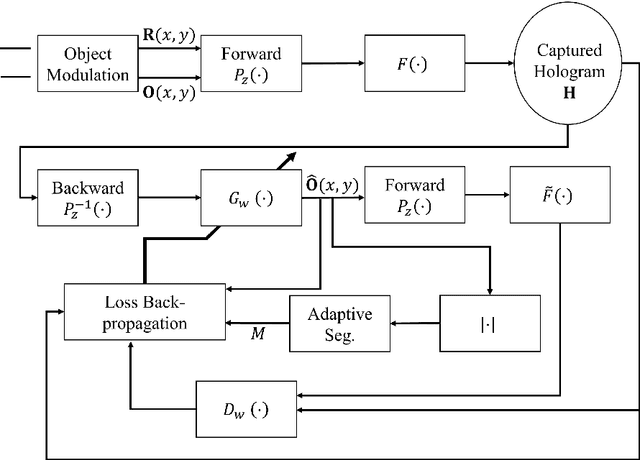

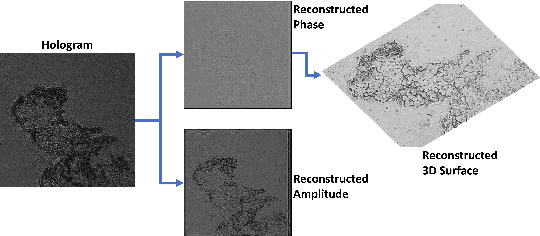

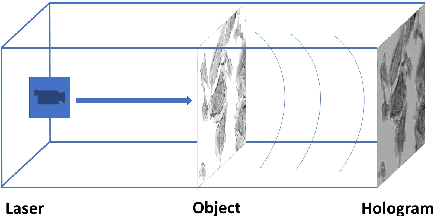

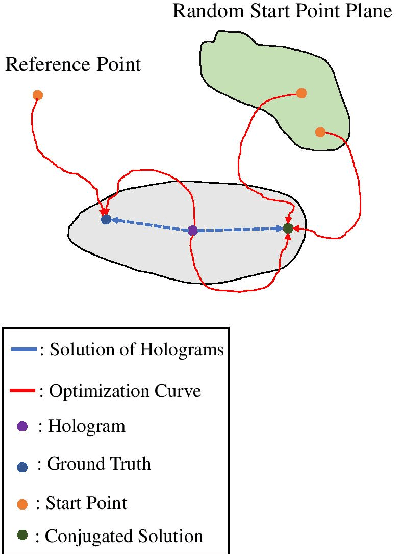

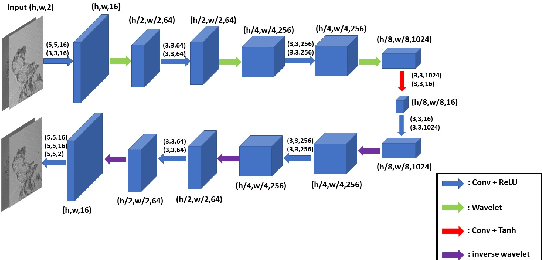

Abstract:Digital holography is a 3D imaging technique by emitting a laser beam with a plane wavefront to an object and measuring the intensity of the diffracted waveform, called holograms. The object's 3D shape can be obtained by numerical analysis of the captured holograms and recovering the incurred phase. Recently, deep learning (DL) methods have been used for more accurate holographic processing. However, most supervised methods require large datasets to train the model, which is rarely available in most DH applications due to the scarcity of samples or privacy concerns. A few one-shot DL-based recovery methods exist with no reliance on large datasets of paired images. Still, most of these methods often neglect the underlying physics law that governs wave propagation. These methods offer a black-box operation, which is not explainable, generalizable, and transferrable to other samples and applications. In this work, we propose a new DL architecture based on generative adversarial networks that uses a discriminative network for realizing a semantic measure for reconstruction quality while using a generative network as a function approximator to model the inverse of hologram formation. We impose smoothness on the background part of the recovered image using a progressive masking module powered by simulated annealing to enhance the reconstruction quality. The proposed method is one of its kind that exhibits high transferability to similar samples, which facilitates its fast deployment in time-sensitive applications without the need for retraining the network. The results show a considerable improvement to competitor methods in reconstruction quality (about 5 dB PSNR gain) and robustness to noise (about 50% reduction in PSNR vs noise increase rate).

Deep DIH : Statistically Inferred Reconstruction of Digital In-Line Holography by Deep Learning

Apr 25, 2020

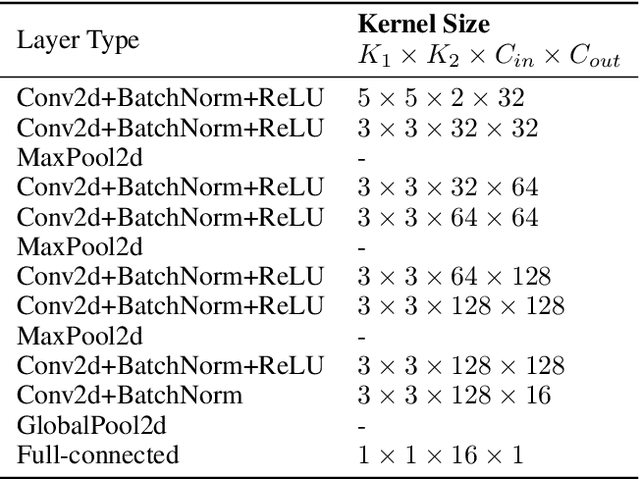

Abstract:Digital in-line holography is commonly used to reconstruct 3D images from 2D holograms for microscopic objects. One of the technical challenges that arise in the signal processing stage is removing the twin image that is caused by the phase-conjugate wavefront from the recorded holograms. Twin image removal is typically formulated as a non-linear inverse problem due to the irreversible scattering process when generating the hologram. Recently, end-to-end deep learning-based methods have been utilized to reconstruct the object wavefront (as a surrogate for the 3D structure of the object) directly from a single-shot in-line digital hologram. However, massive data pairs are required to train deep learning models for acceptable reconstruction precision. In contrast to typical image processing problems, well-curated datasets for in-line digital holography does not exist. Also, the trained model highly influenced by the morphological properties of the object and hence can vary for different applications. Therefore, data collection can be prohibitively cumbersome in practice as a major hindrance to using deep learning for digital holography. In this paper, we proposed a novel implementation of autoencoder-based deep learning architecture for single-shot hologram reconstruction solely based on the current sample without the need for massive datasets to train the model. The simulations results demonstrate the superior performance of the proposed method compared to the state of the art single-shot compressive digital in-line hologram reconstruction method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge