Chris Harrison

Acoustic Field Video for Multimodal Scene Understanding

Jan 23, 2026Abstract:We introduce and explore a new multimodal input representation for vision-language models: acoustic field video. Unlike conventional video (RGB with stereo/mono audio), our video stream provides a spatially grounded visualization of sound intensity across a scene, offering a new and powerful dimension of perceptual understanding. Our real-time pipeline uses low-cost beamforming microphone arrays that are already common in smart speakers and increasingly present in robotics and XR headsets, yet this sensing capability remains unutilized for scene understanding. To assess the value of spatial acoustic information, we constructed an evaluation set of 402 question-answer scenes, comparing a state-of-the-art VLM given conventional video with and without paired acoustic field video. Results show a clear and consistent improvement when incorporating spatial acoustic data; the VLM we test improves from 38.3% correct to 67.4%. Our findings highlight that many everyday scene understanding tasks remain underconstrained when relying solely on visual and audio input, and that acoustic field data provides a promising and practical direction for multimodal reasoning. A video demo is available at https://daehwakim.com/seeingsound

SmartPoser: Arm Pose Estimation with a Smartphone and Smartwatch Using UWB and IMU Data

Sep 03, 2025Abstract:The ability to track a user's arm pose could be valuable in a wide range of applications, including fitness, rehabilitation, augmented reality input, life logging, and context-aware assistants. Unfortunately, this capability is not readily available to consumers. Systems either require cameras, which carry privacy issues, or utilize multiple worn IMUs or markers. In this work, we describe how an off-the-shelf smartphone and smartwatch can work together to accurately estimate arm pose. Moving beyond prior work, we take advantage of more recent ultra-wideband (UWB) functionality on these devices to capture absolute distance between the two devices. This measurement is the perfect complement to inertial data, which is relative and suffers from drift. We quantify the performance of our software-only approach using off-the-shelf devices, showing it can estimate the wrist and elbow joints with a \hl{median positional error of 11.0~cm}, without the user having to provide training data.

EclipseTouch: Touch Segmentation on Ad Hoc Surfaces using Worn Infrared Shadow Casting

Sep 03, 2025Abstract:The ability to detect touch events on uninstrumented, everyday surfaces has been a long-standing goal for mixed reality systems. Prior work has shown that virtual interfaces bound to physical surfaces offer performance and ergonomic benefits over tapping at interfaces floating in the air. A wide variety of approaches have been previously developed, to which we contribute a new headset-integrated technique called \systemname. We use a combination of a computer-triggered camera and one or more infrared emitters to create structured shadows, from which we can accurately estimate hover distance (mean error of 6.9~mm) and touch contact (98.0\% accuracy). We discuss how our technique works across a range of conditions, including surface material, interaction orientation, and environmental lighting.

IMUPoser: Full-Body Pose Estimation using IMUs in Phones, Watches, and Earbuds

Apr 25, 2023

Abstract:Tracking body pose on-the-go could have powerful uses in fitness, mobile gaming, context-aware virtual assistants, and rehabilitation. However, users are unlikely to buy and wear special suits or sensor arrays to achieve this end. Instead, in this work, we explore the feasibility of estimating body pose using IMUs already in devices that many users own -- namely smartphones, smartwatches, and earbuds. This approach has several challenges, including noisy data from low-cost commodity IMUs, and the fact that the number of instrumentation points on a users body is both sparse and in flux. Our pipeline receives whatever subset of IMU data is available, potentially from just a single device, and produces a best-guess pose. To evaluate our model, we created the IMUPoser Dataset, collected from 10 participants wearing or holding off-the-shelf consumer devices and across a variety of activity contexts. We provide a comprehensive evaluation of our system, benchmarking it on both our own and existing IMU datasets.

Gaze-based Autism Detection for Adolescents and Young Adults using Prosaic Videos

May 26, 2020

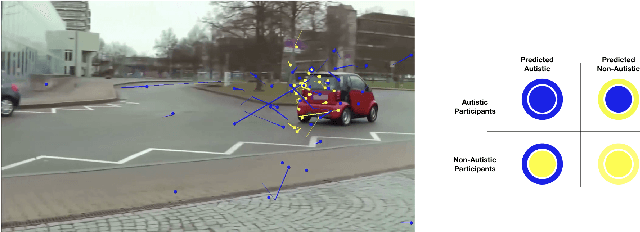

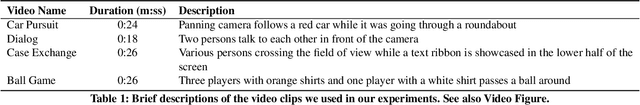

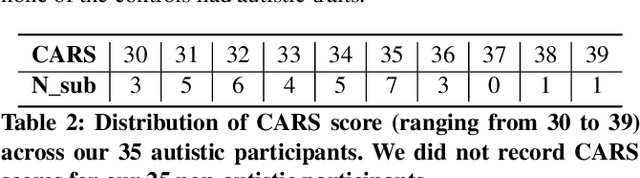

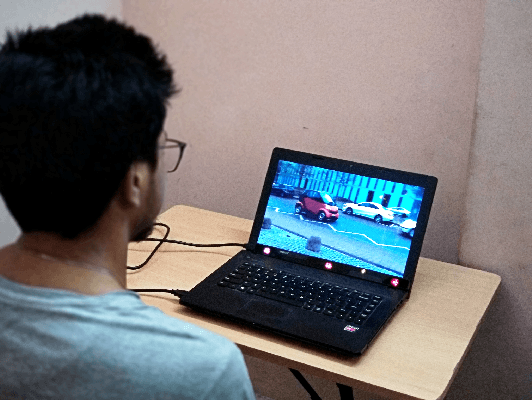

Abstract:Autism often remains undiagnosed in adolescents and adults. Prior research has indicated that an autistic individual often shows atypical fixation and gaze patterns. In this short paper, we demonstrate that by monitoring a user's gaze as they watch commonplace (i.e., not specialized, structured or coded) video, we can identify individuals with autism spectrum disorder. We recruited 35 autistic and 25 non-autistic individuals, and captured their gaze using an off-the-shelf eye tracker connected to a laptop. Within 15 seconds, our approach was 92.5% accurate at identifying individuals with an autism diagnosis. We envision such automatic detection being applied during e.g., the consumption of web media, which could allow for passive screening and adaptation of user interfaces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge