Chiara De Luca

Training slow silicon neurons to control extremely fast robots with spiking reinforcement learning

Jan 29, 2026Abstract:Air hockey demands split-second decisions at high puck velocities, a challenge we address with a compact network of spiking neurons running on a mixed-signal analog/digital neuromorphic processor. By co-designing hardware and learning algorithms, we train the system to achieve successful puck interactions through reinforcement learning in a remarkably small number of trials. The network leverages fixed random connectivity to capture the task's temporal structure and adopts a local e-prop learning rule in the readout layer to exploit event-driven activity for fast and efficient learning. The result is real-time learning with a setup comprising a computer and the neuromorphic chip in-the-loop, enabling practical training of spiking neural networks for robotic autonomous systems. This work bridges neuroscience-inspired hardware with real-world robotic control, showing that brain-inspired approaches can tackle fast-paced interaction tasks while supporting always-on learning in intelligent machines.

Queen Detection in Beehives via Environmental Sensor Fusion for Low-Power Edge Computing

Sep 17, 2025Abstract:Queen bee presence is essential for the health and stability of honeybee colonies, yet current monitoring methods rely on manual inspections that are labor-intensive, disruptive, and impractical for large-scale beekeeping. While recent audio-based approaches have shown promise, they often require high power consumption, complex preprocessing, and are susceptible to ambient noise. To overcome these limitations, we propose a lightweight, multimodal system for queen detection based on environmental sensor fusion-specifically, temperature, humidity, and pressure differentials between the inside and outside of the hive. Our approach employs quantized decision tree inference on a commercial STM32 microcontroller, enabling real-time, low-power edge computing without compromising accuracy. We show that our system achieves over 99% queen detection accuracy using only environmental inputs, with audio features offering no significant performance gain. This work presents a scalable and sustainable solution for non-invasive hive monitoring, paving the way for autonomous, precision beekeeping using off-the-shelf, energy-efficient hardware.

A neuromorphic continuous soil monitoring system for precision irrigation

Sep 17, 2025Abstract:Sensory processing at the edge requires ultra-low power stand-alone computing technologies. This is particularly true for modern agriculture and precision irrigation systems which aim to optimize water usage by monitoring key environmental observables continuously using distributed efficient embedded processing elements. Neuromorphic processing systems are emerging as a promising technology for extreme edge-computing applications that need to run on resource-constrained hardware. As such, they are a very good candidate for implementing efficient water management systems based on data measured from soil and plants, across large fields. In this work, we present a fully energy-efficient neuromorphic irrigation control system that operates autonomously without any need for data transmission or remote processing. Leveraging the properties of a biologically realistic spiking neural network, our system performs computation, and decision-making locally. We validate this approach using real-world soil moisture data from apple and kiwi orchards applied to a mixed-signal neuromorphic processor, and show that the generated irrigation commands closely match those derived from conventional methods across different soil depths. Our results show that local neuromorphic inference can maintain decision accuracy, paving the way for autonomous, sustainable irrigation solutions at scale.

A new GPU library for fast simulation of large-scale networks of spiking neurons

Jul 29, 2020

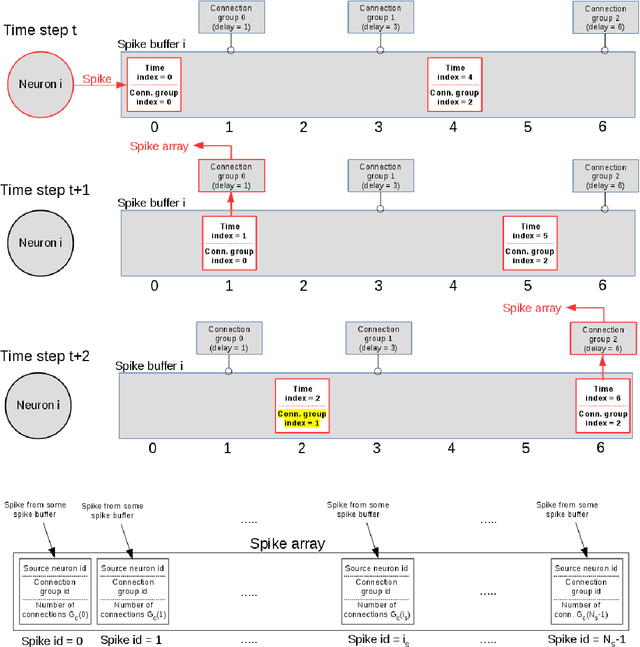

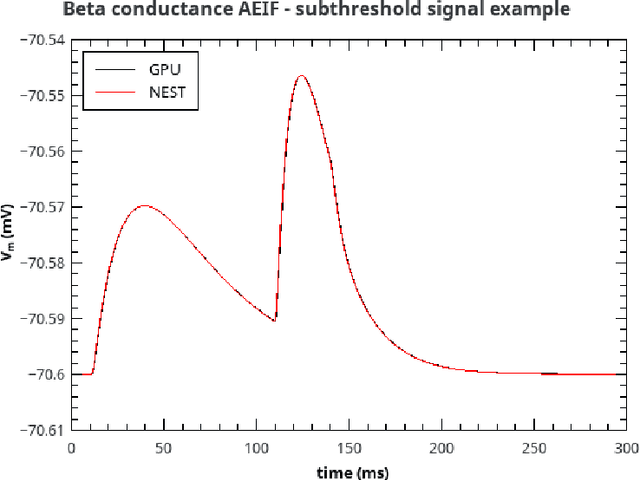

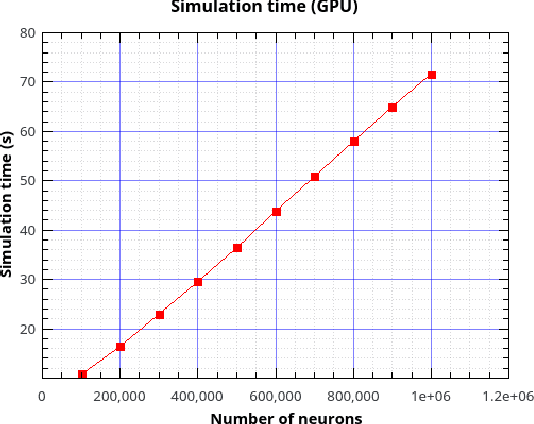

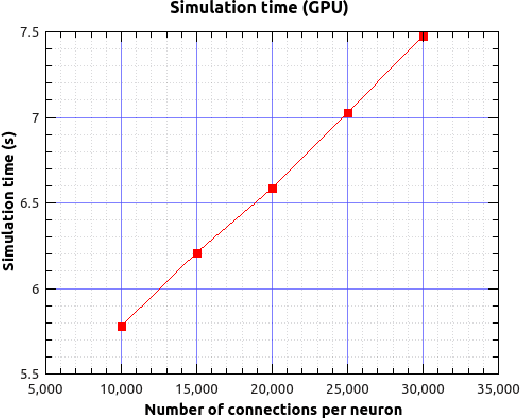

Abstract:Over the past decade there has been a growing interest in the development of parallel hardware systems for simulating large-scale networks of spiking neurons. Compared to other highly-parallel systems, GPU-accelerated solutions have the advantage of a relatively low cost and a great versatility, thanks also to the possibility of using the CUDA-C/C++ programming languages. NeuronGPU is a GPU library for large-scale simulations of spiking neural network models, written in the C++ and CUDA-C++ programming languages, based on a novel spike-delivery algorithm. This library includes simple LIF (leaky-integrate-and-fire) neuron models as well as several multisynapse AdEx (adaptive-exponential-integrate-and-fire) neuron models with current or conductance based synapses, user definable models and different devices. The numerical solution of the differential equations of the dynamics of the AdEx models is performed through a parallel implementation, written in CUDA-C++, of the fifth-order Runge-Kutta method with adaptive step-size control. In this work we evaluate the performance of this library on the simulation of a well-known cortical microcircuit model, based on LIF neurons and current-based synapses, and on a balanced networks of excitatory and inhibitory neurons, using AdEx neurons and conductance-based synapses. On these models, we will show that the proposed library achieves state-of-the-art performance in terms of simulation time per second of biological activity. In particular, using a single NVIDIA GeForce RTX 2080 Ti GPU board, the full-scale cortical-microcircuit model, which includes about 77,000 neurons and $3 \cdot 10^8$ connections, can be simulated at a speed very close to real time, while the simulation time of a balanced network of 1,000,000 AdEx neurons with 1,000 connections per neuron was about 70 s per second of biological activity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge