Chaopeng Shen

Continental-scale streamflow modeling of basins with reservoirs: a demonstration of effectiveness and a delineation of challenges

Jan 12, 2021

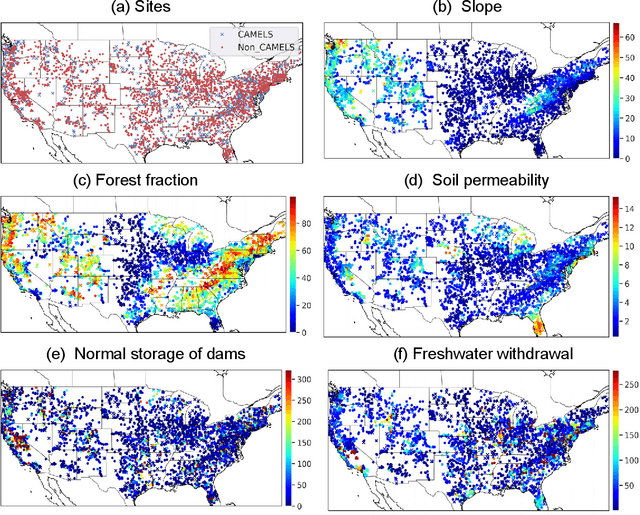

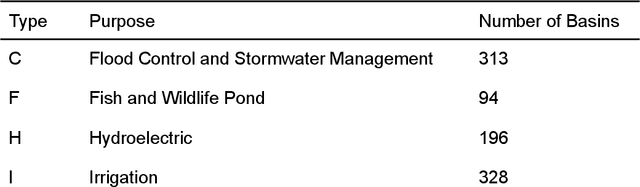

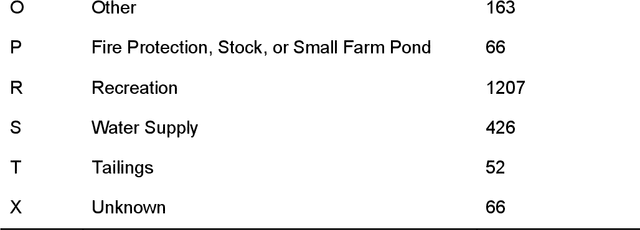

Abstract:A large fraction of major waterways have dams influencing streamflow, which must be accounted for in large-scale hydrologic modeling. However, daily streamflow prediction for basins with dams is challenging for various modeling approaches, especially at large scales. Here we took a divide-and-conquer approach to examine which types of basins could be well represented by a long short-term memory (LSTM) deep learning model using only readily-available information. We analyzed data from 3557 basins (83% dammed) over the contiguous United States and noted strong impacts of reservoir purposes, capacity-to-runoff ratio (dor), and diversion on streamflow on streamflow modeling. Surprisingly, while the LSTM model trained on a widely-used reference-basin dataset performed poorly for more non-reference basins, the model trained on the whole dataset presented a median test Nash-Sutcliffe efficiency coefficient (NSE) of 0.74, reaching benchmark-level performance. The zero-dor, small-dor, and large-dor basins were found to have distinct behaviors, so migrating models between categories yielded catastrophic results. However, training with pooled data from different sets yielded optimal median NSEs of 0.73, 0.78, and 0.71 for these groups, respectively, showing noticeable advantages over existing models. These results support a coherent, mixed modeling strategy where smaller dams are modeled as part of rainfall-runoff processes, but dammed basins must not be treated as reference ones and must be included in the training set; then, large-dor reservoirs can be represented explicitly and future work should examine modeling reservoirs for fire protection and irrigation, followed by those for hydroelectric power generation, and flood control, etc.

The data synergy effects of time-series deep learning models in hydrology

Jan 06, 2021

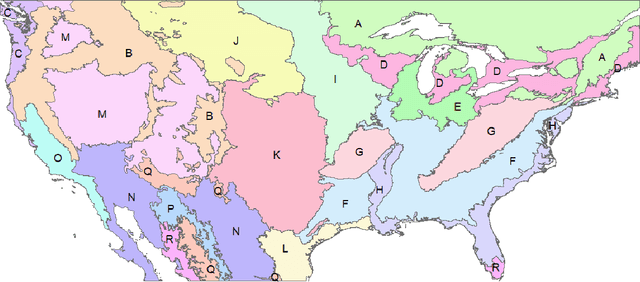

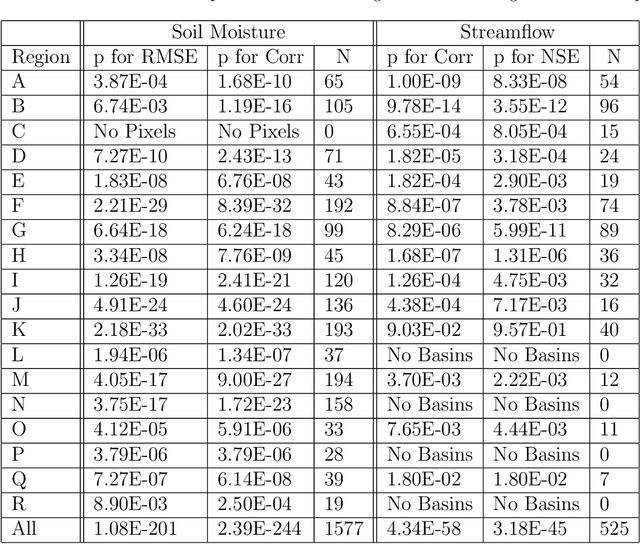

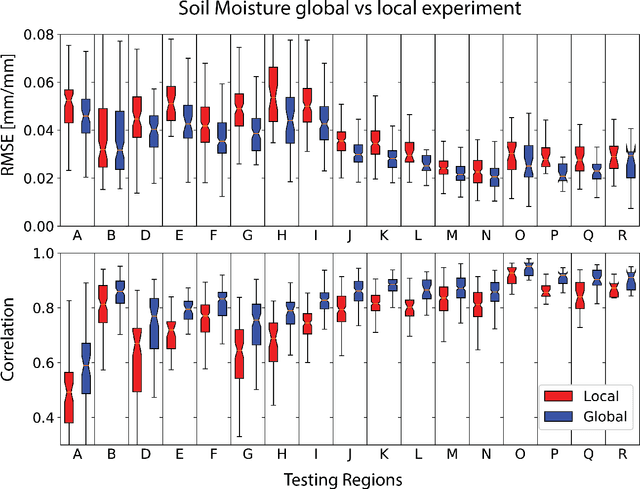

Abstract:When fitting statistical models to variables in geoscientific disciplines such as hydrology, it is a customary practice to regionalize - to divide a large spatial domain into multiple regions and study each region separately - instead of fitting a single model on the entire data (also known as unification). Traditional wisdom in these fields suggests that models built for each region separately will have higher performance because of homogeneity within each region. However, by partitioning the training data, each model has access to fewer data points and cannot learn from commonalities between regions. Here, through two hydrologic examples (soil moisture and streamflow), we argue that unification can often significantly outperform regionalization in the era of big data and deep learning (DL). Common DL architectures, even without bespoke customization, can automatically build models that benefit from regional commonality while accurately learning region-specific differences. We highlight an effect we call data synergy, where the results of the DL models improved when data were pooled together from characteristically different regions. In fact, the performance of the DL models benefited from more diverse rather than more homogeneous training data. We hypothesize that DL models automatically adjust their internal representations to identify commonalities while also providing sufficient discriminatory information to the model. The results here advocate for pooling together larger datasets, and suggest the academic community should place greater emphasis on data sharing and compilation.

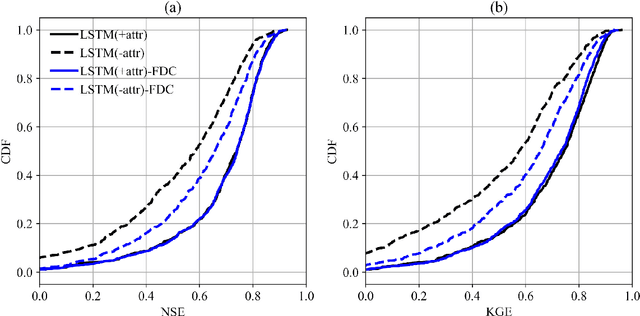

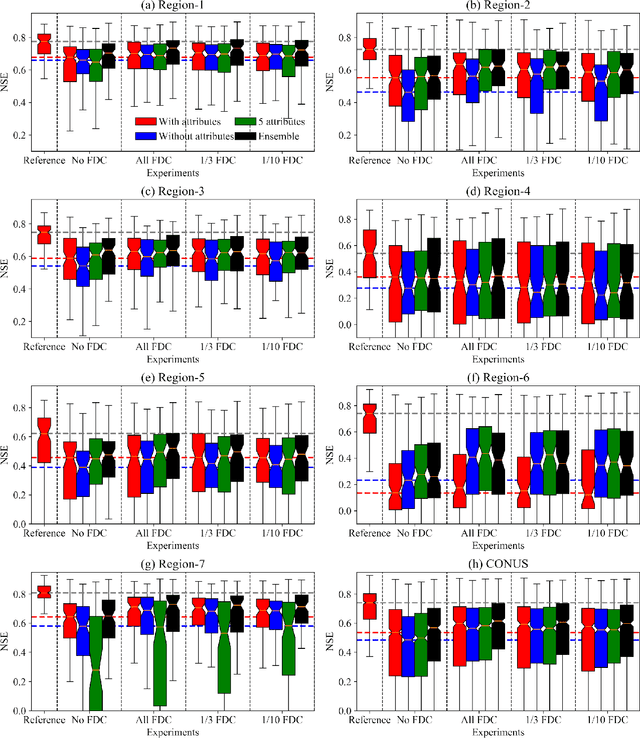

Prediction in ungauged regions with sparse flow duration curves and input-selection ensemble modeling

Nov 26, 2020

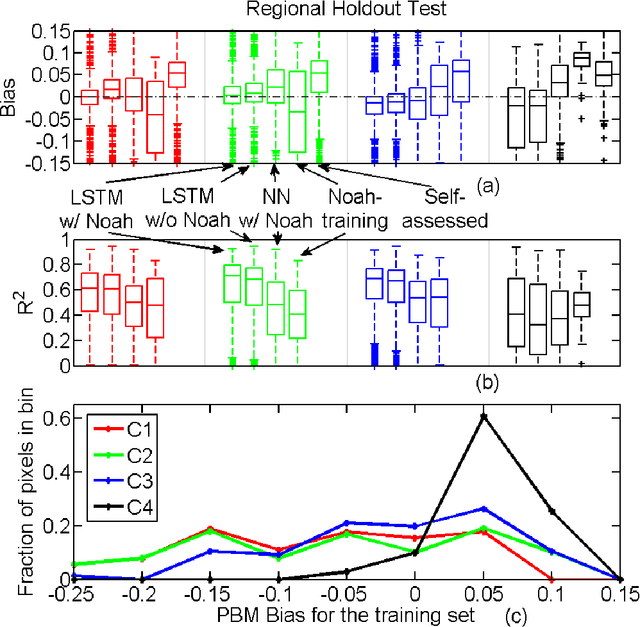

Abstract:While long short-term memory (LSTM) models have demonstrated stellar performance with streamflow predictions, there are major risks in applying these models in contiguous regions with no gauges, or predictions in ungauged regions (PUR) problems. However, softer data such as the flow duration curve (FDC) may be already available from nearby stations, or may become available. Here we demonstrate that sparse FDC data can be migrated and assimilated by an LSTM-based network, via an encoder. A stringent region-based holdout test showed a median Kling-Gupta efficiency (KGE) of 0.62 for a US dataset, substantially higher than previous state-of-the-art global-scale ungauged basin tests. The baseline model without FDC was already competitive (median KGE 0.56), but integrating FDCs had substantial value. Because of the inaccurate representation of inputs, the baseline models might sometimes produce catastrophic results. However, model generalizability was further meaningfully improved by compiling an ensemble based on models with different input selections.

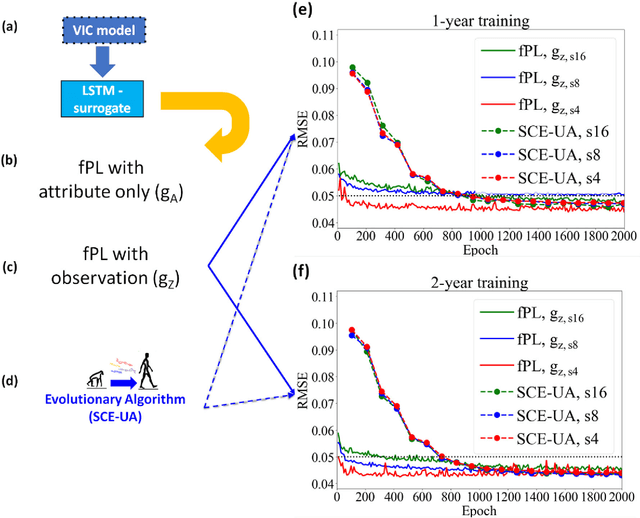

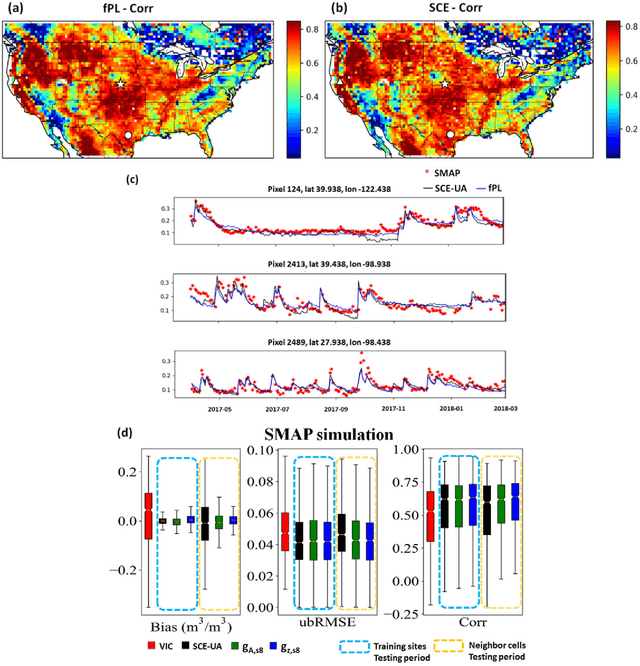

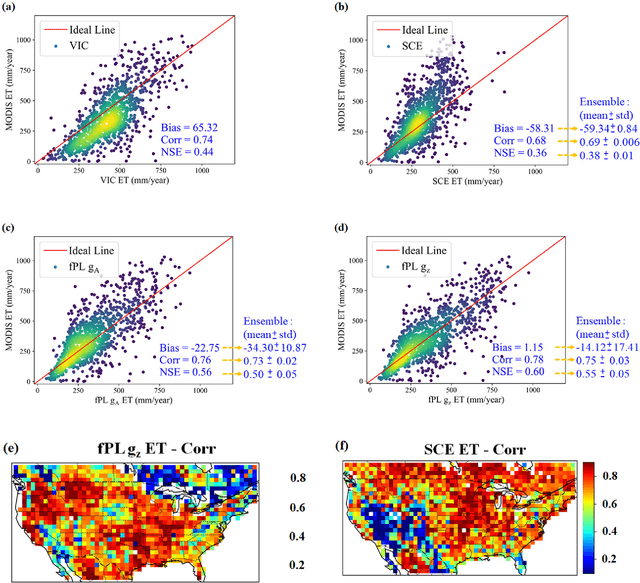

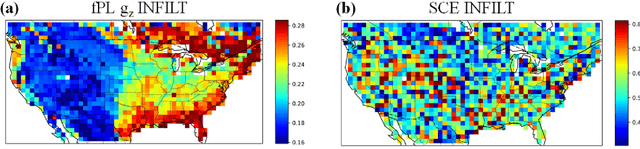

From parameter calibration to parameter learning: Revolutionizing large-scale geoscientific modeling with big data

Sep 12, 2020

Abstract:The behaviors and skills of models in many geoscientific domains strongly depend on spatially varying parameters that lack direct observations and must be determined by calibration. Calibration, which solves inverse problems, is a classical but inefficient and stochasticity-ridden approach to reconcile models and observations. Using a widely applied hydrologic model and soil moisture observations as a case study, here we propose a novel, forward-mapping parameter learning (fPL) framework. Whereas evolutionary algorithm (EA)-based calibration solves inversion problems one by one, fPL solves a pattern recognition problem and learns a more robust, universal mapping. fPL can save orders-of-magnitude computational time compared to EA-based calibration, while, surprisingly, producing equivalent ending skill metrics. With more training data, fPL learned across sites and showed super-convergence, scaling much more favorably. Moreover, a more important benefit emerged: fPL produced spatially-coherent parameters in better agreement with physical processes. As a result, it demonstrated better results for out-of-training-set locations and uncalibrated variables. Compared to purely data-driven models, fPL can output unobserved variables, in this case simulated evapotranspiration, which agrees better with satellite-based estimates than the comparison EA. The deep-learning-powered fPL frameworks can be uniformly applied to myriad other geoscientific models. We contend that a paradigm shift from inverse parameter calibration to parameter learning will greatly propel various geoscientific domains.

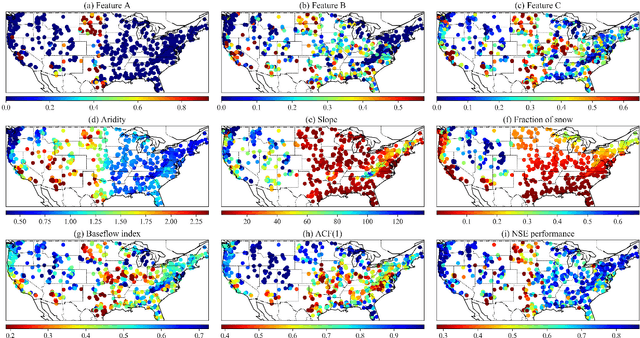

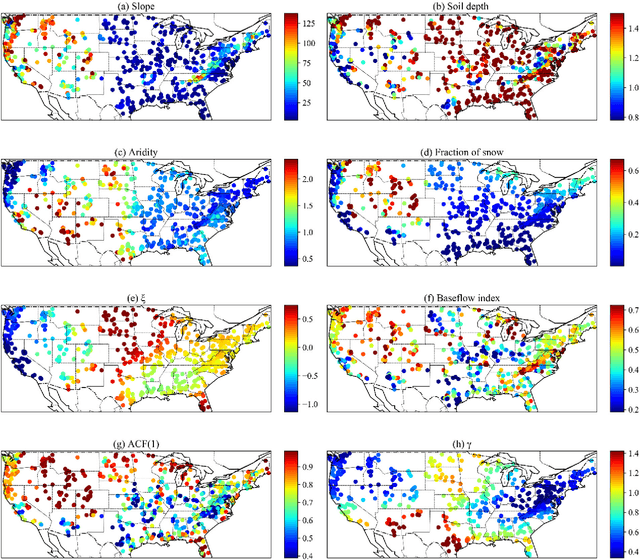

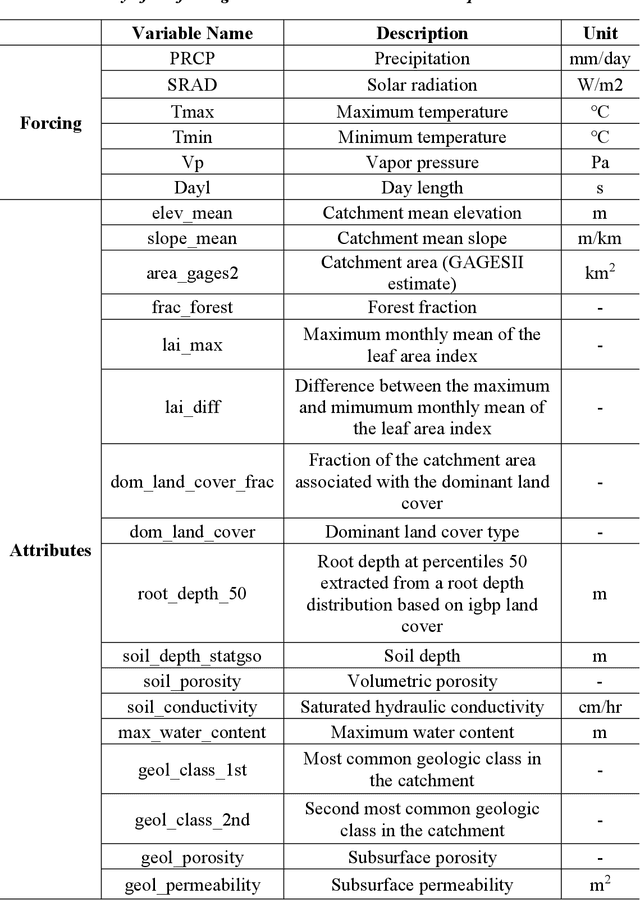

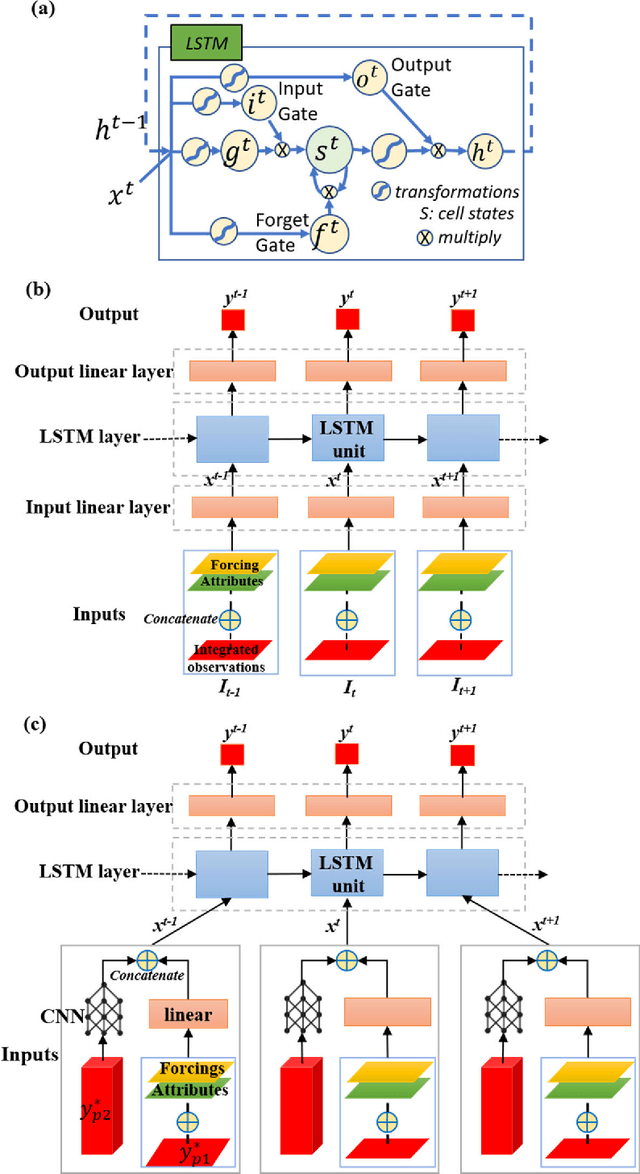

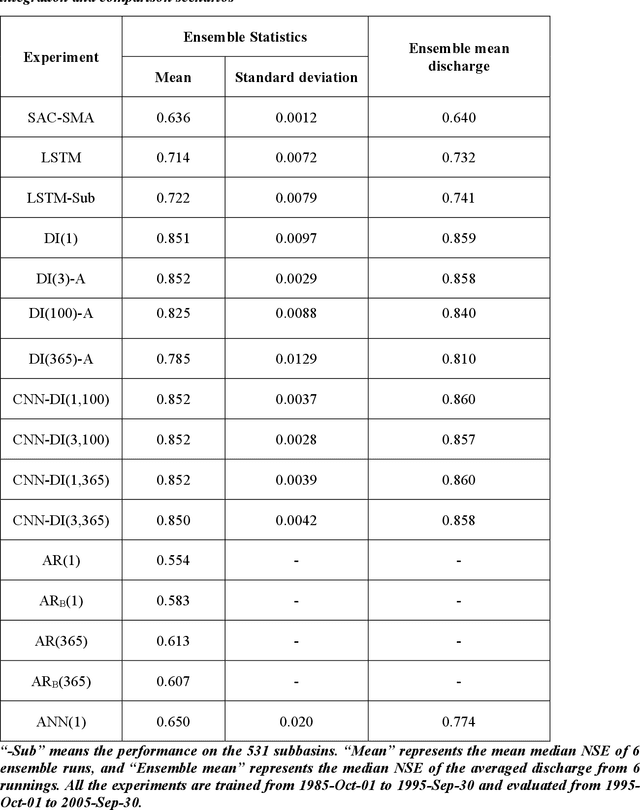

Enhancing streamflow forecast and extracting insights using long-short term memory networks with data integration at continental scales

Jan 02, 2020

Abstract:Recent observations with varied schedules and types (moving average, snapshot, or regularly spaced) can help to improve streamflow forecast but it is difficult to effectively integrate them. Based on a long short-term memory (LSTM) streamflow model, we tested different formulations in a flexible method we call data integration (DI) to integrate recently discharge measurements to improve forecast. DI accepts lagged inputs either directly or through a convolutional neural network (CNN) unit. DI can ubiquitously elevate streamflow forecast performance to unseen levels, reaching a continental-scale median Nash-Sutcliffe coefficient of 0.86. Integrating moving-average discharge, discharge from a few days ago, or even average discharge of the last calendar month could all improve daily forecast. It turned out, directly using lagged observations as inputs was comparable in performance to using the CNN unit. Importantly, we obtained valuable insights regarding hydrologic processes impacting LSTM and DI performance. Before applying DI, the original LSTM worked well in mountainous regions and snow-dominated regions, but less so in regions with low discharge volumes (due to either low precipitation or high precipitation-energy synchronicity) and large inter-annual storage variability. DI was most beneficial in regions with high flow autocorrelation: it greatly reduced baseflow bias in groundwater-dominated western basins; it also improved the peaks for basins with dynamical surface water storage, e.g., the Prairie Potholes or Great Lakes regions. However, even DI cannot help high-aridity basins with one-day flash peaks. There is much promise with a deep-learning-based forecast paradigm due to its performance, automation, efficiency, and flexibility.

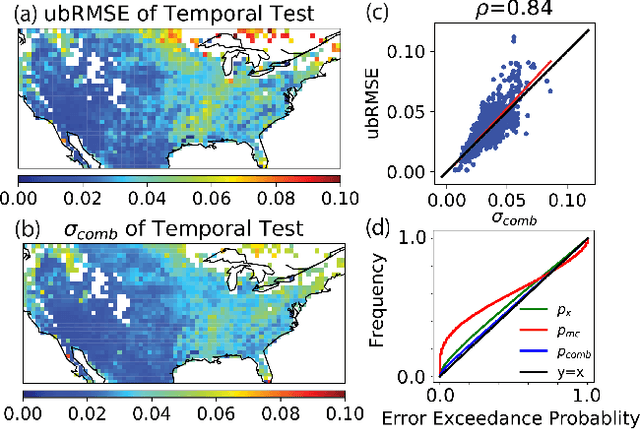

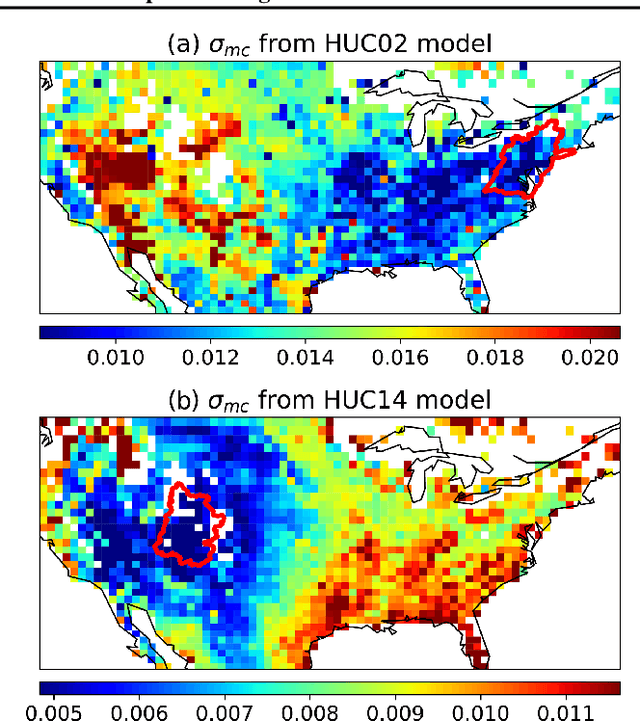

Evaluating aleatoric and epistemic uncertainties of time series deep learning models for soil moisture predictions

Jun 10, 2019

Abstract:Soil moisture is an important variable that determines floods, vegetation health, agriculture productivity, and land surface feedbacks to the atmosphere, etc. Accurately modeling soil moisture has important implications in both weather and climate models. The recently available satellite-based observations give us a unique opportunity to build data-driven models to predict soil moisture instead of using land surface models, but previously there was no uncertainty estimate. We tested Monte Carlo dropout (MCD) with an aleatoric term for our long short-term memory models for this problem, and asked if the uncertainty terms behave as they were argued to. We show that the method successfully captures the predictive error after tuning a hyperparameter on a representative training dataset. We show the MCD uncertainty estimate, as previously argued, does detect dissimilarity.

A trans-disciplinary review of deep learning research for water resources scientists

Aug 24, 2018

Abstract:Deep learning (DL), a new-generation of artificial neural network research, has transformed industries, daily lives and various scientific disciplines in recent years. DL represents significant progress in the ability of neural networks to automatically engineer problem-relevant features and capture highly complex data distributions. I argue that DL can help address several major new and old challenges facing research in water sciences such as inter-disciplinarity, data discoverability, hydrologic scaling, equifinality, and needs for parameter regionalization. This review paper is intended to provide water resources scientists and hydrologists in particular with a simple technical overview, trans-disciplinary progress update, and a source of inspiration about the relevance of DL to water. The review reveals that various physical and geoscientific disciplines have utilized DL to address data challenges, improve efficiency, and gain scientific insights. DL is especially suited for information extraction from image-like data and sequential data. Techniques and experiences presented in other disciplines are of high relevance to water research. Meanwhile, less noticed is that DL may also serve as a scientific exploratory tool. A new area termed 'AI neuroscience,' where scientists interpret the decision process of deep networks and derive insights, has been born. This budding sub-discipline has demonstrated methods including correlation-based analysis, inversion of network-extracted features, reduced-order approximations by interpretable models, and attribution of network decisions to inputs. Moreover, DL can also use data to condition neurons that mimic problem-specific fundamental organizing units, thus revealing emergent behaviors of these units. Vast opportunities exist for DL to propel advances in water sciences.

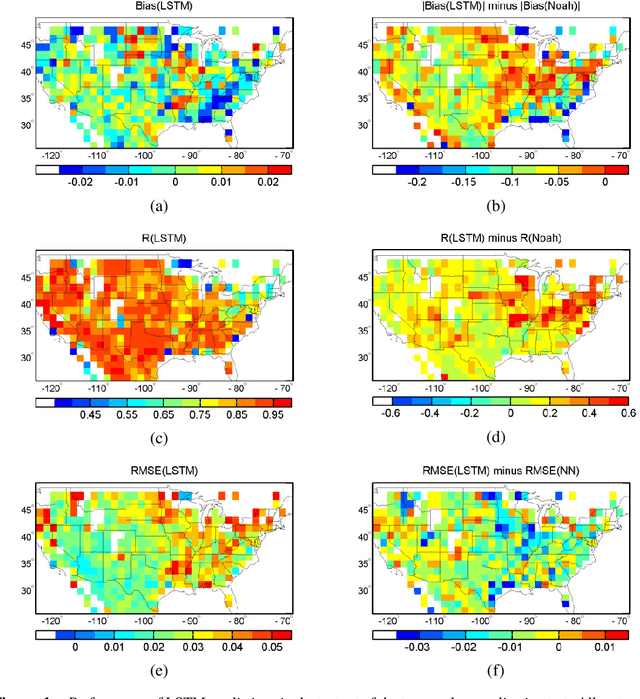

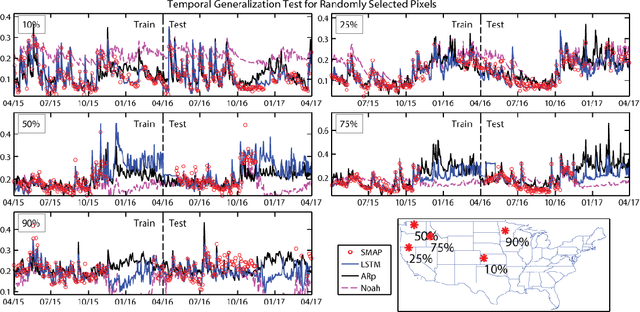

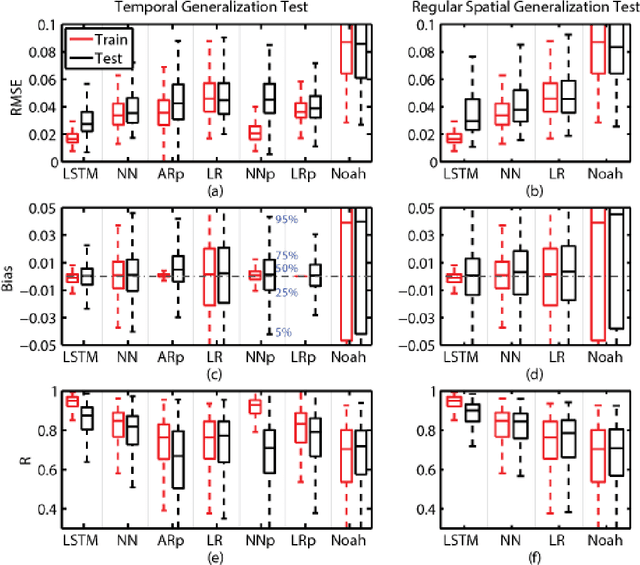

Prolongation of SMAP to Spatio-temporally Seamless Coverage of Continental US Using a Deep Learning Neural Network

Sep 11, 2017

Abstract:The Soil Moisture Active Passive (SMAP) mission has delivered valuable sensing of surface soil moisture since 2015. However, it has a short time span and irregular revisit schedule. Utilizing a state-of-the-art time-series deep learning neural network, Long Short-Term Memory (LSTM), we created a system that predicts SMAP level-3 soil moisture data with atmospheric forcing, model-simulated moisture, and static physiographic attributes as inputs. The system removes most of the bias with model simulations and improves predicted moisture climatology, achieving small test root-mean-squared error (<0.035) and high correlation coefficient >0.87 for over 75\% of Continental United States, including the forested Southeast. As the first application of LSTM in hydrology, we show the proposed network avoids overfitting and is robust for both temporal and spatial extrapolation tests. LSTM generalizes well across regions with distinct climates and physiography. With high fidelity to SMAP, LSTM shows great potential for hindcasting, data assimilation, and weather forecasting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge