Caio Waisman

Policy-Aligned Estimation of Conditional Average Treatment Effects

Dec 15, 2025Abstract:Firms often develop targeting policies to personalize marketing actions and improve incremental profits. Effective targeting depends on accurately separating customers with positive versus negative treatment effects. We propose an approach to estimate the conditional average treatment effects (CATEs) of marketing actions that aligns their estimation with the firm's profit objective. The method recognizes that, for many customers, treatment effects are so extreme that additional accuracy is unlikely to change the recommended actions. However, accuracy matters near the decision boundary, as small errors can alter targeting decisions. By modifying the firm's objective function in the standard profit maximization problem, our method yields a near-optimal targeting policy while simultaneously estimating CATEs. This introduces a new perspective on CATE estimation, reframing it as a problem of profit optimization rather than prediction accuracy. We establish the theoretical properties of the proposed method and demonstrate its performance and trade-offs using synthetic data.

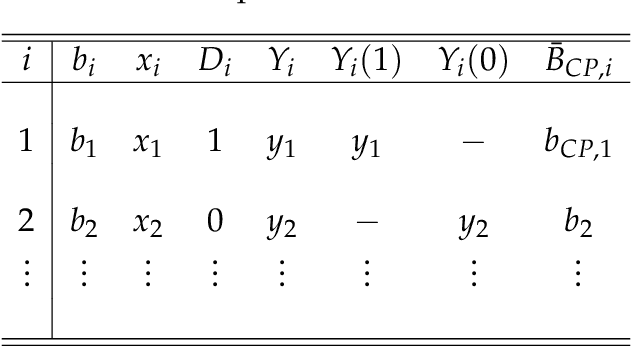

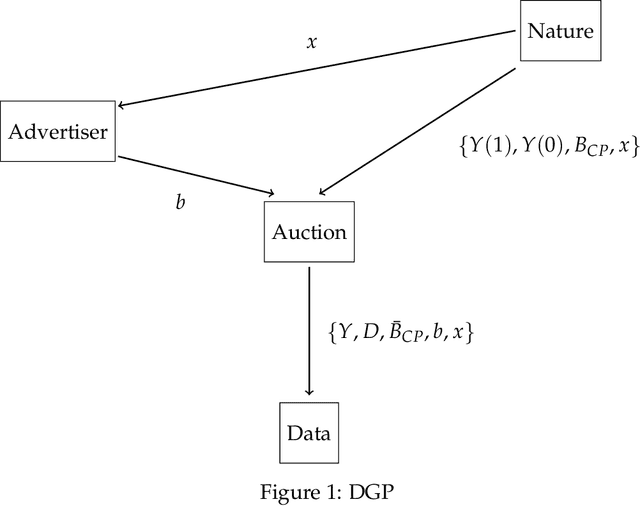

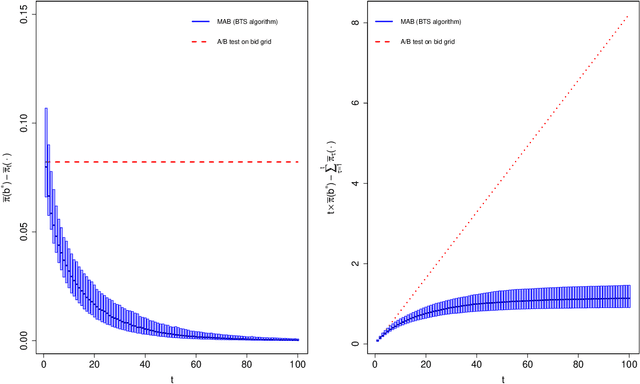

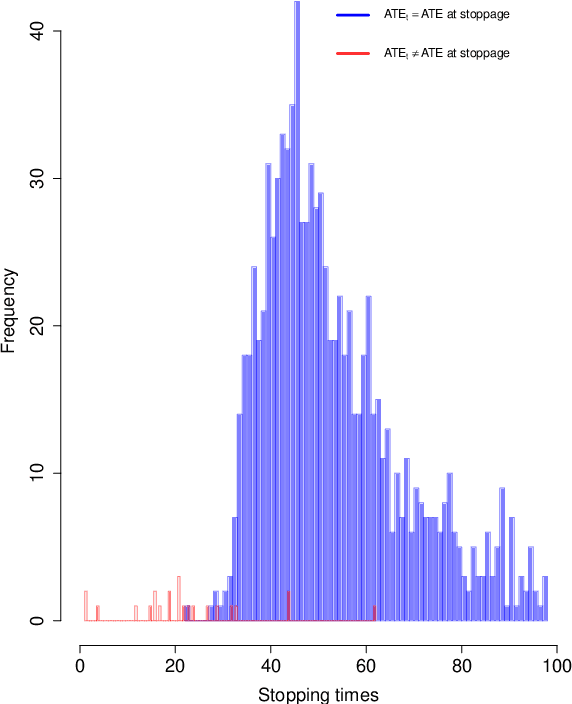

Online Inference for Advertising Auctions

Aug 22, 2019

Abstract:Advertisers that engage in real-time bidding (RTB) to display their ads commonly have two goals: learning their optimal bidding policy and estimating the expected effect of exposing users to their ads. Typical strategies to accomplish one of these goals tend to ignore the other, creating an apparent tension between the two. This paper exploits the economic structure of the bid optimization problem faced by advertisers to show that these two objectives can actually be perfectly aligned. By framing the advertiser's problem as a multi-armed bandit (MAB) problem, we propose a modified Thompson Sampling (TS) algorithm that concurrently learns the optimal bidding policy and estimates the expected effect of displaying the ad while minimizing economic losses from potential sub-optimal bidding. Simulations show that not only the proposed method successfully accomplishes the advertiser's goals, but also does so at a much lower cost than more conventional experimentation policies aimed at performing causal inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge