Atia Cortés

Explaining Autonomous Vehicles with Intention-aware Policy Graphs

May 13, 2025Abstract:The potential to improve road safety, reduce human driving error, and promote environmental sustainability have enabled the field of autonomous driving to progress rapidly over recent decades. The performance of autonomous vehicles has significantly improved thanks to advancements in Artificial Intelligence, particularly Deep Learning. Nevertheless, the opacity of their decision-making, rooted in the use of accurate yet complex AI models, has created barriers to their societal trust and regulatory acceptance, raising the need for explainability. We propose a post-hoc, model-agnostic solution to provide teleological explanations for the behaviour of an autonomous vehicle in urban environments. Building on Intention-aware Policy Graphs, our approach enables the extraction of interpretable and reliable explanations of vehicle behaviour in the nuScenes dataset from global and local perspectives. We demonstrate the potential of these explanations to assess whether the vehicle operates within acceptable legal boundaries and to identify possible vulnerabilities in autonomous driving datasets and models.

Artificial Intelligence across Europe: A Study on Awareness, Attitude and Trust

Aug 19, 2023

Abstract:This paper presents the results of an extensive study investigating the opinions on Artificial Intelligence (AI) of a sample of 4,006 European citizens from eight distinct countries (France, Germany, Italy, Netherlands, Poland, Romania, Spain, and Sweden). The aim of the study is to gain a better understanding of people's views and perceptions within the European context, which is already marked by important policy actions and regulatory processes. To survey the perceptions of the citizens of Europe we design and validate a new questionnaire (PAICE) structured around three dimensions: people's awareness, attitude, and trust. We observe that while awareness is characterized by a low level of self-assessed competency, the attitude toward AI is very positive for more than half of the population. Reflecting upon the collected results, we highlight implicit contradictions and identify trends that may interfere with the creation of an ecosystem of trust and the development of inclusive AI policies. The introduction of rules that ensure legal and ethical standards, along with the activity of high-level educational entities, and the promotion of AI literacy are identified as key factors in supporting a trustworthy AI ecosystem. We make some recommendations for AI governance focused on the European context and conclude with suggestions for future work.

European Strategy on AI: Are we truly fostering social good?

Nov 25, 2020

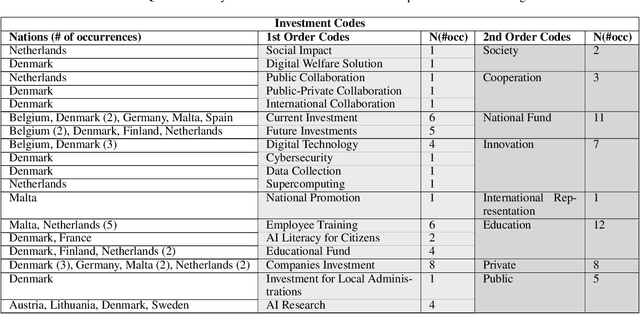

Abstract:Artificial intelligence (AI) is already part of our daily lives and is playing a key role in defining the economic and social shape of the future. In 2018, the European Commission introduced its AI strategy able to compete in the next years with world powers such as China and US, but relying on the respect of European values and fundamental rights. As a result, most of the Member States have published their own National Strategy with the aim to work on a coordinated plan for Europe. In this paper, we present an ongoing study on how European countries are approaching the field of Artificial Intelligence, with its promises and risks, through the lens of their national AI strategies. In particular, we aim to investigate how European countries are investing in AI and to what extent the stated plans can contribute to the benefit of the whole society. This paper reports the main findings of a qualitative analysis of the investment plans reported in 15 European National Strategies

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge