Arjun Lakshmipathy

PICO: Reconstructing 3D People In Contact with Objects

Apr 24, 2025

Abstract:Recovering 3D Human-Object Interaction (HOI) from single color images is challenging due to depth ambiguities, occlusions, and the huge variation in object shape and appearance. Thus, past work requires controlled settings such as known object shapes and contacts, and tackles only limited object classes. Instead, we need methods that generalize to natural images and novel object classes. We tackle this in two main ways: (1) We collect PICO-db, a new dataset of natural images uniquely paired with dense 3D contact on both body and object meshes. To this end, we use images from the recent DAMON dataset that are paired with contacts, but these contacts are only annotated on a canonical 3D body. In contrast, we seek contact labels on both the body and the object. To infer these given an image, we retrieve an appropriate 3D object mesh from a database by leveraging vision foundation models. Then, we project DAMON's body contact patches onto the object via a novel method needing only 2 clicks per patch. This minimal human input establishes rich contact correspondences between bodies and objects. (2) We exploit our new dataset of contact correspondences in a novel render-and-compare fitting method, called PICO-fit, to recover 3D body and object meshes in interaction. PICO-fit infers contact for the SMPL-X body, retrieves a likely 3D object mesh and contact from PICO-db for that object, and uses the contact to iteratively fit the 3D body and object meshes to image evidence via optimization. Uniquely, PICO-fit works well for many object categories that no existing method can tackle. This is crucial to enable HOI understanding to scale in the wild. Our data and code are available at https://pico.is.tue.mpg.de.

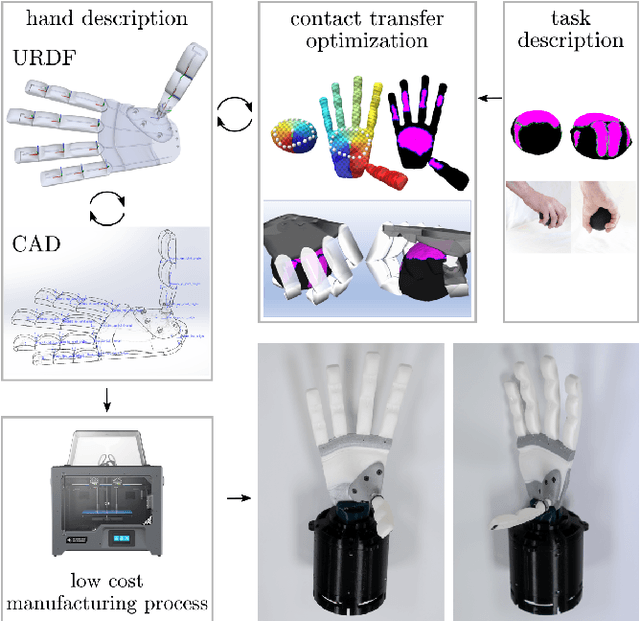

Towards Very Low-Cost Iterative Prototyping for Fully Printable Dexterous Soft Robotic Hands

Nov 02, 2021

Abstract:The design and fabrication of soft robot hands is still a time-consuming and difficult process. Advances in rapid prototyping have accelerated the fabrication process significantly while introducing new complexities into the design process. In this work, we present an approach that utilizes novel low-cost fabrication techniques in conjunction with design tools helping soft hand designers to systematically take advantage of multi-material 3D printing to create dexterous soft robotic hands. While very low cost and lightweight, we show that generated designs are highly durable, surprisingly strong, and capable of dexterous grasping.

Contact Transfer: A Direct, User-Driven Method for Human to Robot Transfer of Grasps and Manipulations

Oct 29, 2021

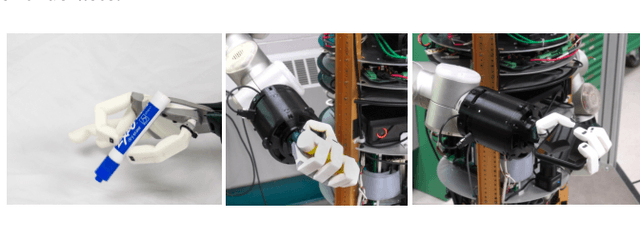

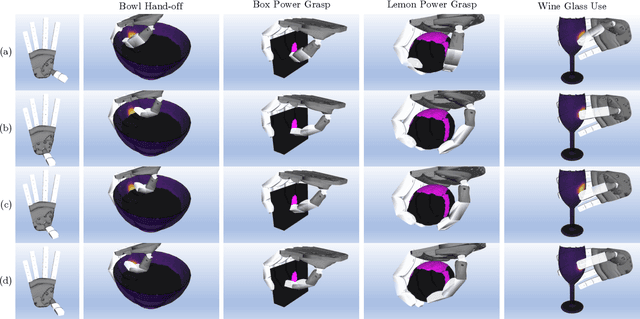

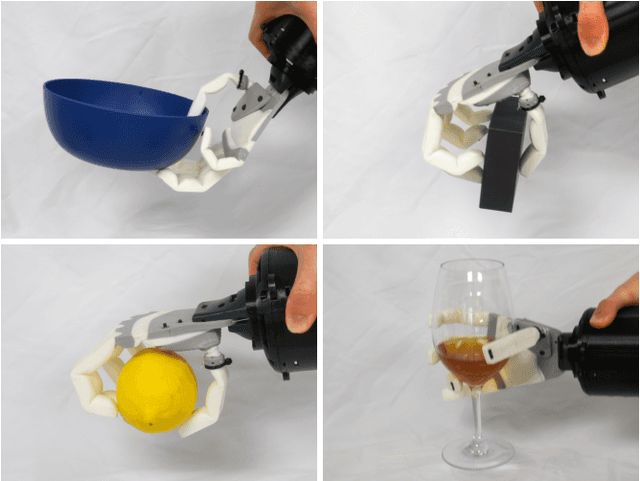

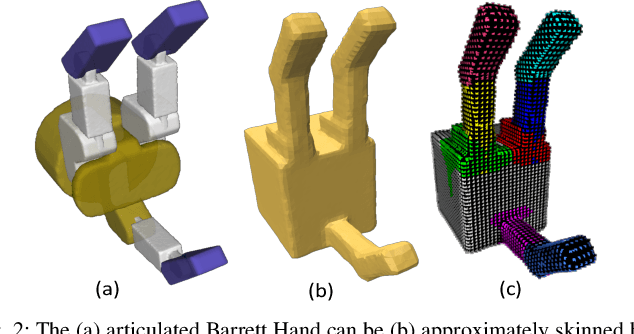

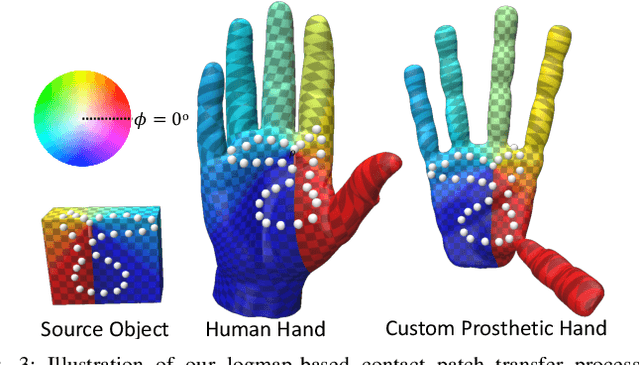

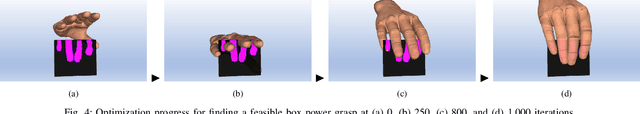

Abstract:We present a novel method for the direct transfer of grasps and manipulations between objects and hands through utilization of contact areas. Our method fully preserves contact shapes, and in contrast to existing techniques, is not dependent on grasp families, requires no model training or grasp sampling, makes no assumptions about manipulator morphology or kinematics, and allows user control over both transfer parameters and solution optimization. Despite these accommodations, we show that our method is capable of synthesizing kinematically feasible whole hand poses in seconds even for poor initializations or hard to reach contacts. We additionally highlight the method's benefits in both response to design alterations as well as fast approximation over in-hand manipulation sequences. Finally, we demonstrate a solution generated by our method on a physical, custom designed prosthetic hand.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge