Arip Asadulaev

Zero-Shot Off-Policy Learning

Feb 02, 2026Abstract:Off-policy learning methods seek to derive an optimal policy directly from a fixed dataset of prior interactions. This objective presents significant challenges, primarily due to the inherent distributional shift and value function overestimation bias. These issues become even more noticeable in zero-shot reinforcement learning, where an agent trained on reward-free data must adapt to new tasks at test time without additional training. In this work, we address the off-policy problem in a zero-shot setting by discovering a theoretical connection of successor measures to stationary density ratios. Using this insight, our algorithm can infer optimal importance sampling ratios, effectively performing a stationary distribution correction with an optimal policy for any task on the fly. We benchmark our method in motion tracking tasks on SMPL Humanoid, continuous control on ExoRL, and for the long-horizon OGBench tasks. Our technique seamlessly integrates into forward-backward representation frameworks and enables fast-adaptation to new tasks in a training-free regime. More broadly, this work bridges off-policy learning and zero-shot adaptation, offering benefits to both research areas.

Expert or not? assessing data quality in offline reinforcement learning

Oct 14, 2025Abstract:Offline reinforcement learning (RL) learns exclusively from static datasets, without further interaction with the environment. In practice, such datasets vary widely in quality, often mixing expert, suboptimal, and even random trajectories. The choice of algorithm therefore depends on dataset fidelity. Behavior cloning can suffice on high-quality data, whereas mixed- or low-quality data typically benefits from offline RL methods that stitch useful behavior across trajectories. Yet in the wild it is difficult to assess dataset quality a priori because the data's provenance and skill composition are unknown. We address the problem of estimating offline dataset quality without training an agent. We study a spectrum of proxies from simple cumulative rewards to learned value based estimators, and introduce the Bellman Wasserstein distance (BWD), a value aware optimal transport score that measures how dissimilar a dataset's behavioral policy is from a random reference policy. BWD is computed from a behavioral critic and a state conditional OT formulation, requiring no environment interaction or full policy optimization. Across D4RL MuJoCo tasks, BWD strongly correlates with an oracle performance score that aggregates multiple offline RL algorithms, enabling efficient prediction of how well standard agents will perform on a given dataset. Beyond prediction, integrating BWD as a regularizer during policy optimization explicitly pushes the learned policy away from random behavior and improves returns. These results indicate that value aware, distributional signals such as BWD are practical tools for triaging offline RL datasets and policy optimization.

Rethinking Optimal Transport in Offline Reinforcement Learning

Oct 17, 2024

Abstract:We propose a novel algorithm for offline reinforcement learning using optimal transport. Typically, in offline reinforcement learning, the data is provided by various experts and some of them can be sub-optimal. To extract an efficient policy, it is necessary to \emph{stitch} the best behaviors from the dataset. To address this problem, we rethink offline reinforcement learning as an optimal transportation problem. And based on this, we present an algorithm that aims to find a policy that maps states to a \emph{partial} distribution of the best expert actions for each given state. We evaluate the performance of our algorithm on continuous control problems from the D4RL suite and demonstrate improvements over existing methods.

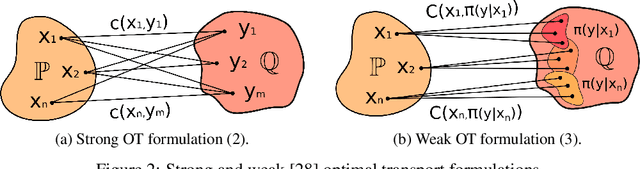

Inverse Entropic Optimal Transport Solves Semi-supervised Learning via Data Likelihood Maximization

Oct 03, 2024

Abstract:Learning conditional distributions $\pi^*(\cdot|x)$ is a central problem in machine learning, which is typically approached via supervised methods with paired data $(x,y) \sim \pi^*$. However, acquiring paired data samples is often challenging, especially in problems such as domain translation. This necessitates the development of $\textit{semi-supervised}$ models that utilize both limited paired data and additional unpaired i.i.d. samples $x \sim \pi^*_x$ and $y \sim \pi^*_y$ from the marginal distributions. The usage of such combined data is complex and often relies on heuristic approaches. To tackle this issue, we propose a new learning paradigm that integrates both paired and unpaired data $\textbf{seamlessly}$ through the data likelihood maximization techniques. We demonstrate that our approach also connects intriguingly with inverse entropic optimal transport (OT). This finding allows us to apply recent advances in computational OT to establish a $\textbf{light}$ learning algorithm to get $\pi^*(\cdot|x)$. Furthermore, we demonstrate through empirical tests that our method effectively learns conditional distributions using paired and unpaired data simultaneously.

Adversarial Training Improves Joint Energy-Based Generative Modelling

Jul 18, 2022

Abstract:We propose the novel framework for generative modelling using hybrid energy-based models. In our method we combine the interpretable input gradients of the robust classifier and Langevin Dynamics for sampling. Using the adversarial training we improve not only the training stability, but robustness and generative modelling of the joint energy-based models.

Multi-step domain adaptation by adversarial attack to $\mathcal{H} Δ\mathcal{H}$-divergence

Jul 18, 2022

Abstract:Adversarial examples are transferable between different models. In our paper, we propose to use this property for multi-step domain adaptation. In unsupervised domain adaptation settings, we demonstrate that replacing the source domain with adversarial examples to $\mathcal{H} \Delta \mathcal{H}$-divergence can improve source classifier accuracy on the target domain. Our method can be connected to most domain adaptation techniques. We conducted a range of experiments and achieved improvement in accuracy on Digits and Office-Home datasets.

Easy Batch Normalization

Jul 18, 2022

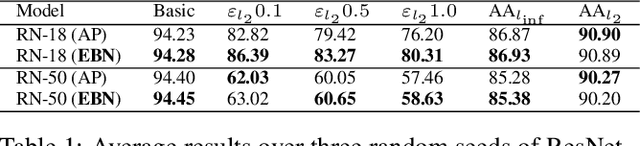

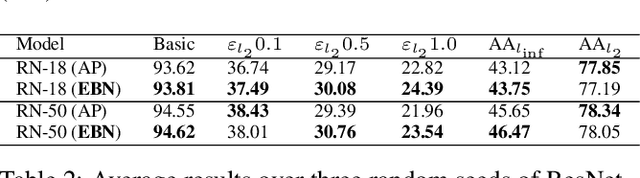

Abstract:It was shown that adversarial examples improve object recognition. But what about their opposite side, easy examples? Easy examples are samples that the machine learning model classifies correctly with high confidence. In our paper, we are making the first step toward exploring the potential benefits of using easy examples in the training procedure of neural networks. We propose to use an auxiliary batch normalization for easy examples for the standard and robust accuracy improvement.

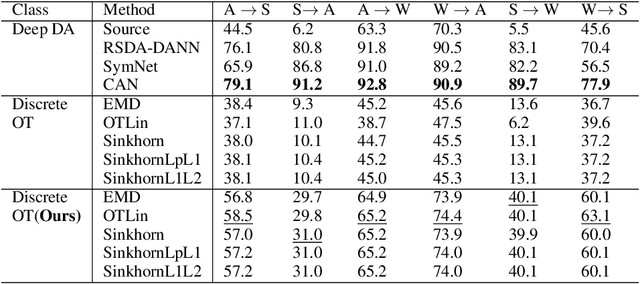

Connecting adversarial attacks and optimal transport for domain adaptation

Jun 04, 2022

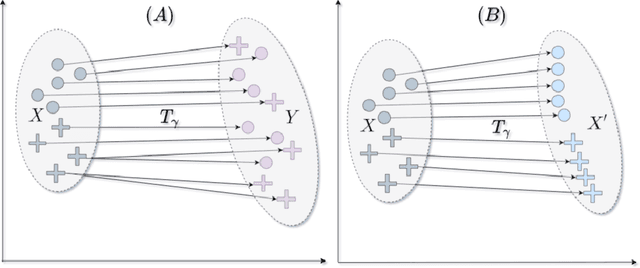

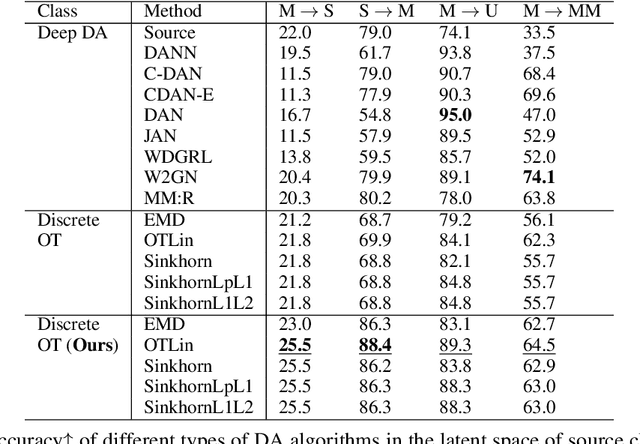

Abstract:We present a novel algorithm for domain adaptation using optimal transport. In domain adaptation, the goal is to adapt a classifier trained on the source domain samples to the target domain. In our method, we use optimal transport to map target samples to the domain named source fiction. This domain differs from the source but is accurately classified by the source domain classifier. Our main idea is to generate a source fiction by c-cyclically monotone transformation over the target domain. If samples with the same labels in two domains are c-cyclically monotone, the optimal transport map between these domains preserves the class-wise structure, which is the main goal of domain adaptation. To generate a source fiction domain, we propose an algorithm that is based on our finding that adversarial attacks are a c-cyclically monotone transformation of the dataset. We conduct experiments on Digits and Modern Office-31 datasets and achieve improvement in performance for simple discrete optimal transport solvers for all adaptation tasks.

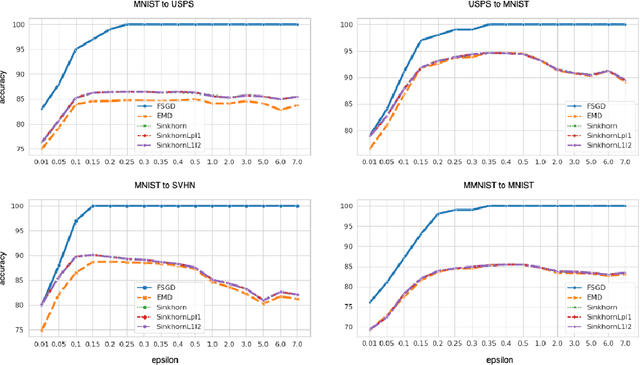

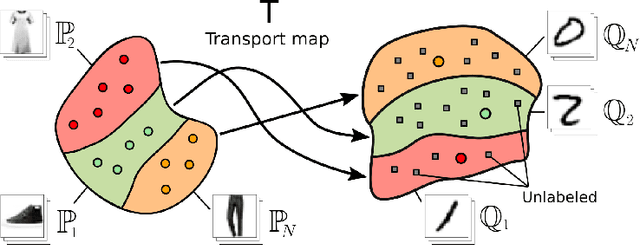

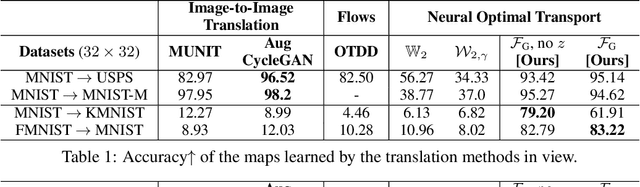

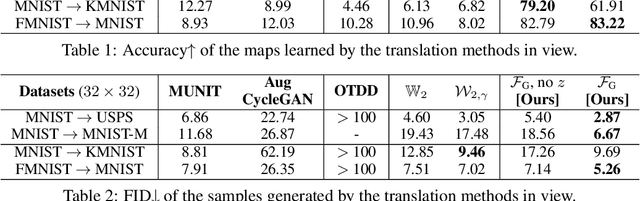

Neural Optimal Transport with General Cost Functionals

May 30, 2022

Abstract:We present a novel neural-networks-based algorithm to compute optimal transport (OT) plans and maps for general cost functionals. The algorithm is based on a saddle point reformulation of the OT problem and generalizes prior OT methods for weak and strong cost functionals. As an application, we construct a functional to map data distributions with preserving the class-wise structure of data.

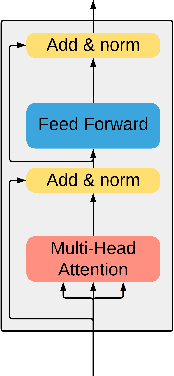

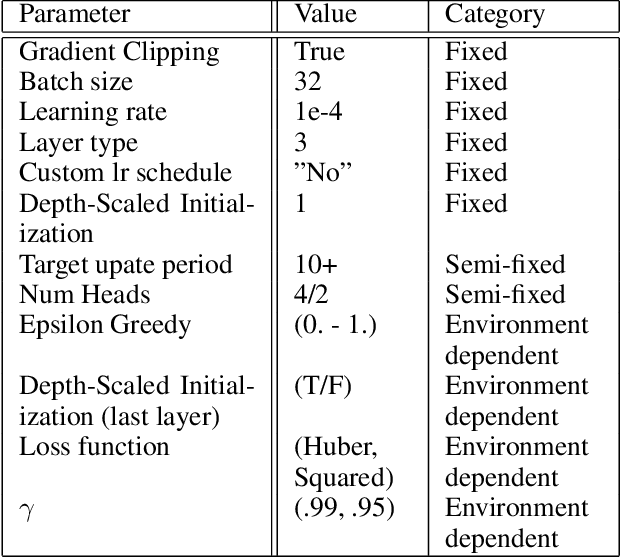

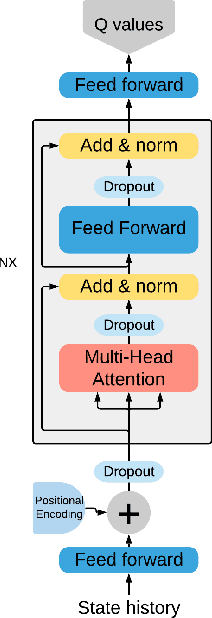

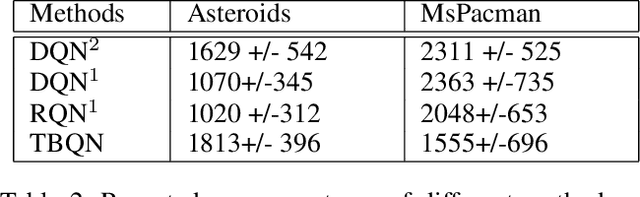

Stabilizing Transformer-Based Action Sequence Generation For Q-Learning

Oct 23, 2020

Abstract:Since the publication of the original Transformer architecture (Vaswani et al. 2017), Transformers revolutionized the field of Natural Language Processing. This, mainly due to their ability to understand timely dependencies better than competing RNN-based architectures. Surprisingly, this architecture change does not affect the field of Reinforcement Learning (RL), even though RNNs are quite popular in RL, and time dependencies are very common in RL. Recently, (Parisotto et al. 2019) conducted the first promising research of Transformers in RL. To support the findings of this work, this paper seeks to provide an additional example of a Transformer-based RL method. Specifically, the goal is a simple Transformer-based Deep Q-Learning method that is stable over several environments. Due to the unstable nature of Transformers and RL, an extensive method search was conducted to arrive at a final method that leverages developments around Transformers as well as Q-learning. The proposed method can match the performance of classic Q-learning on control environments while showing potential on some selected Atari benchmarks. Furthermore, it was critically evaluated to give additional insights into the relation between Transformers and RL.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge