Anil K. Jain

End-to-End Pore Extraction and Matching in Latent Fingerprints: Going Beyond Minutiae

May 30, 2019

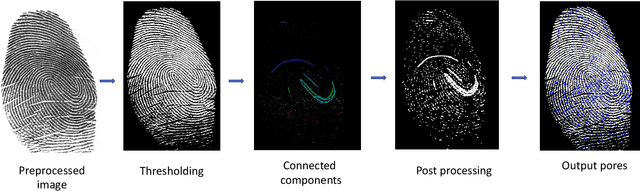

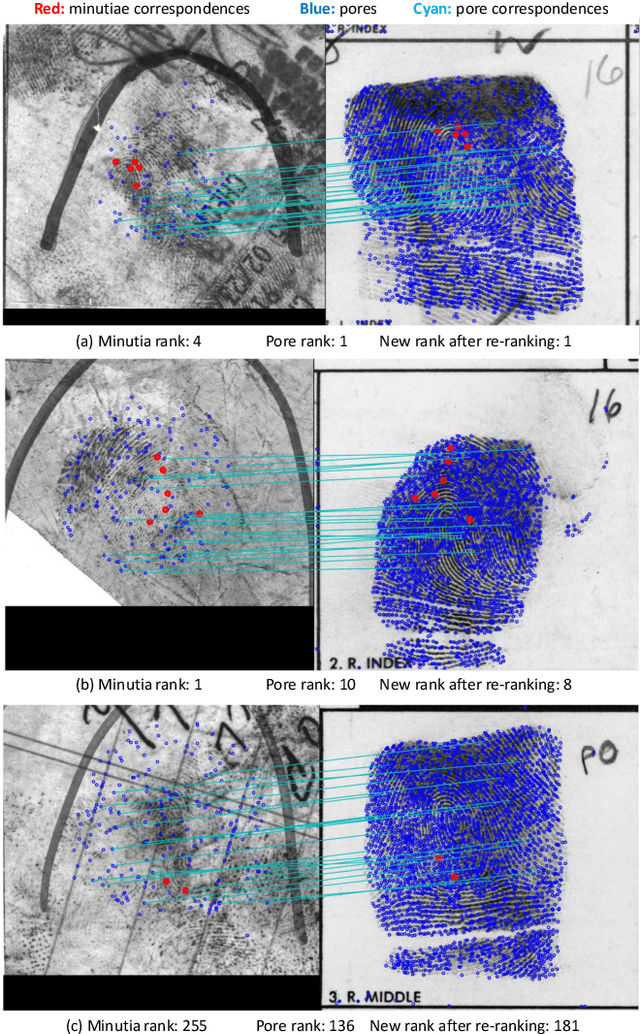

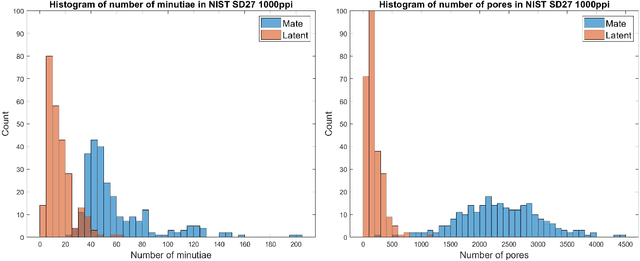

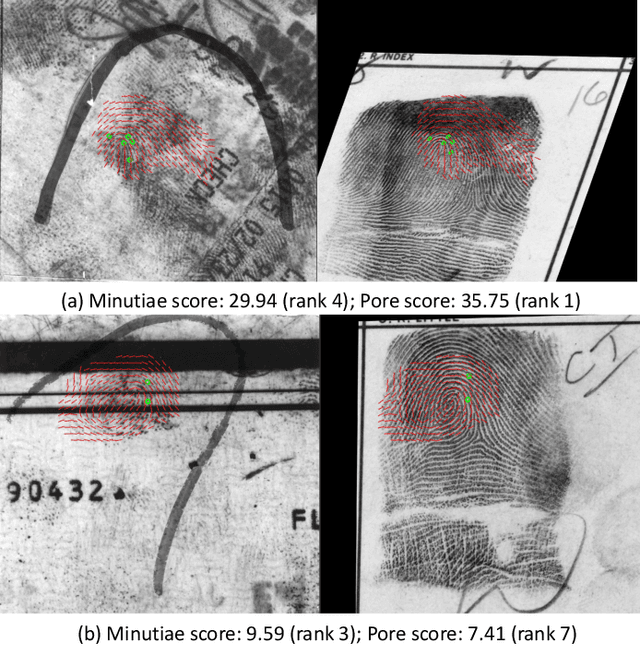

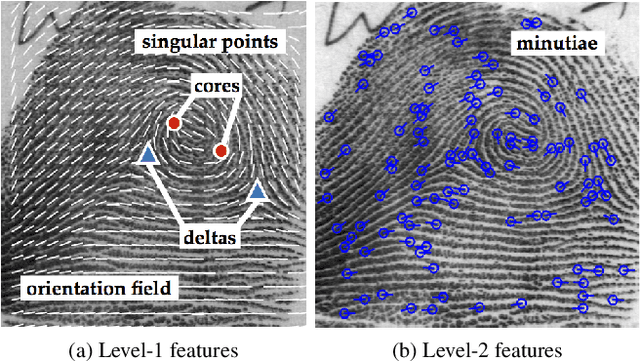

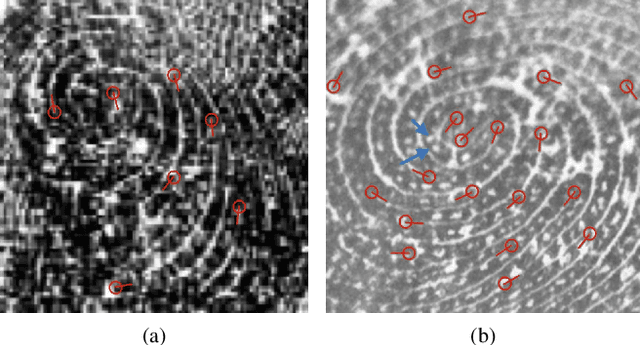

Abstract:Latent fingerprint recognition is not a new topic but it has attracted a lot of attention from researchers in both academia and industry over the past 50 years. With the rapid development of pattern recognition techniques, automated fingerprint identification systems (AFIS) have become more and more ubiquitous. However, most AFIS are utilized for live-scan or rolled/slap prints while only a few systems can work on latent fingerprints with reasonable accuracy. The question of whether taking higher resolution scans of latent fingerprints and their rolled/slap mate prints could help improve the identification accuracy still remains an open question in the forensic community. Because pores are one of the most reliable features besides minutiae to identify latent fingerprints, we propose an end-to-end automatic pore extraction and matching system to analyze the utility of pores in latent fingerprint identification. Hence, this paper answers two questions in the latent fingerprint domain: (i) does the incorporation of pores as level-3 features improve the system performance significantly? and (ii) does the 1,000 ppi image resolution improve the recognition results? We believe that our proposed end-to-end pore extraction and matching system will be a concrete baseline for future latent AFIS development.

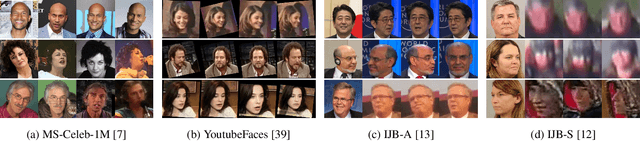

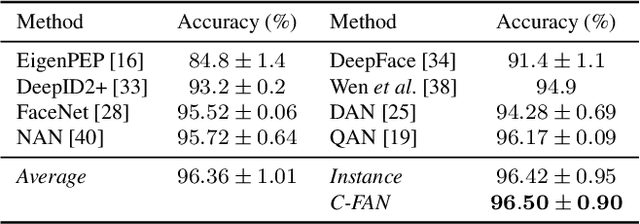

Recurrent Embedding Aggregation Network for Video Face Recognition

Apr 26, 2019

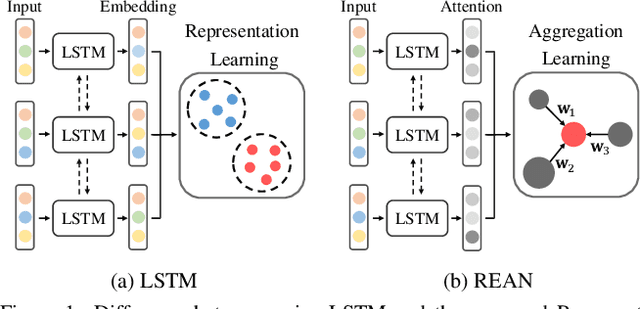

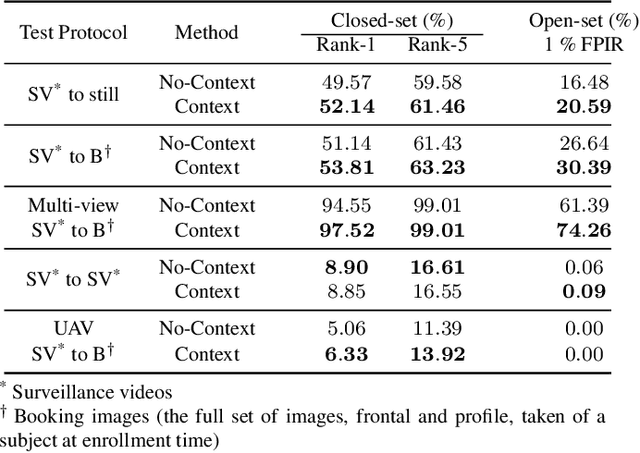

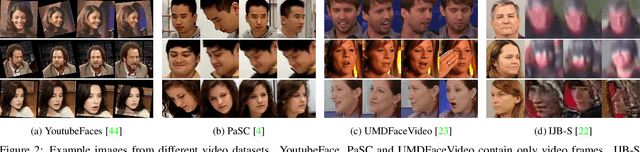

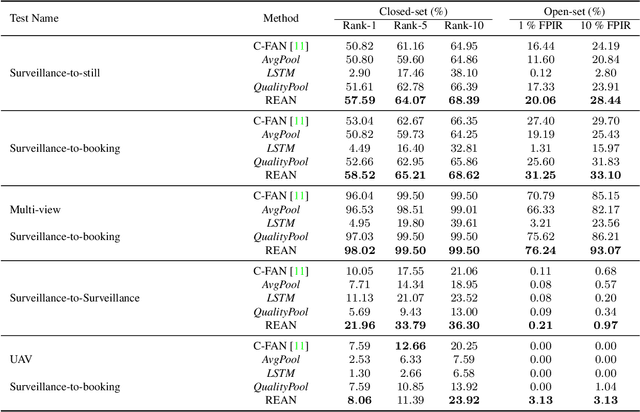

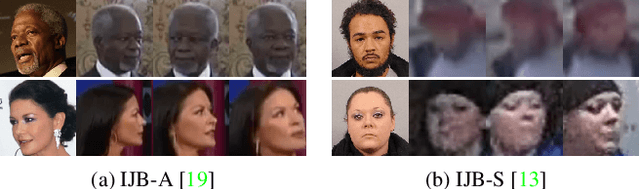

Abstract:Recurrent networks have been successful in analyzing temporal data and have been widely used for video analysis. However, for video face recognition, where the base CNNs trained on large-scale data already provide discriminative features, using Long Short-Term Memory (LSTM), a popular recurrent network, for feature learning could lead to overfitting and degrade the performance instead. We propose a Recurrent Embedding Aggregation Network (REAN) for set to set face recognition. Compared with LSTM, REAN is robust against overfitting because it only learns how to aggregate the pre-trained embeddings rather than learning representations from scratch. Compared with quality-aware aggregation methods, REAN can take advantage of the context information to circumvent the noise introduced by redundant video frames. Empirical results on three public domain video face recognition datasets, IJB-S, YTF, and PaSC show that the proposed REAN significantly outperforms naive CNN-LSTM structure and quality-aware aggregation methods.

Probabilistic Face Embeddings

Apr 25, 2019

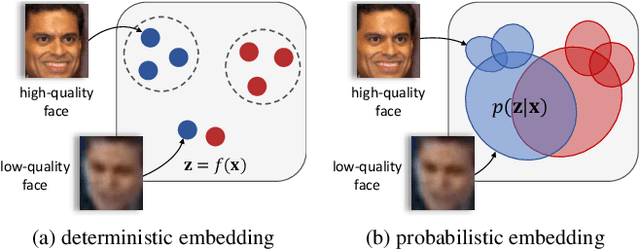

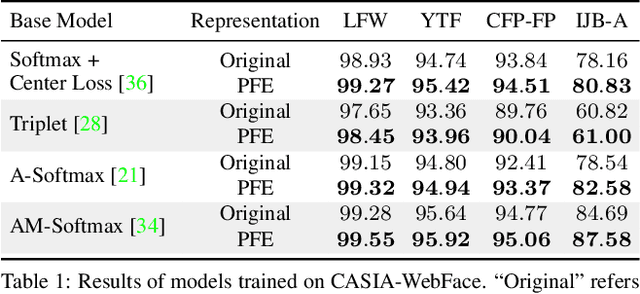

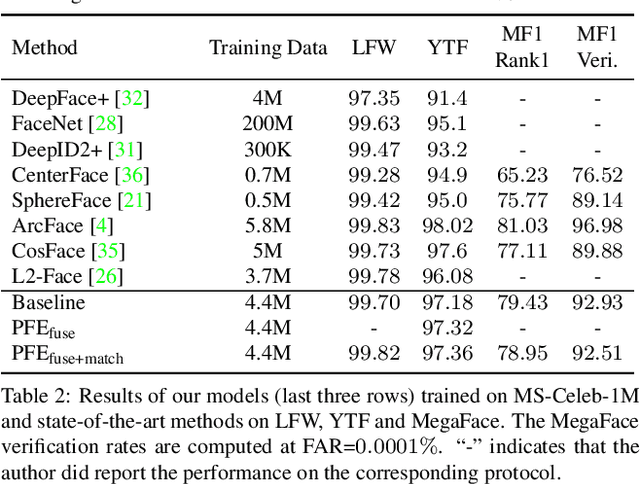

Abstract:Embedding methods have achieved success in face recognition by comparing facial features in a latent semantic space. However, in a fully unconstrained face setting, the features learned by the embedding model could be ambiguous or may not even be present in the input face, leading to noisy representations. We propose Probabilistic Face Embeddings (PFEs), which represent each face image as a Gaussian distribution in the latent space. The mean of the distribution estimates the most likely feature values while the variance shows the uncertainty in the feature values. Probabilistic solutions can then be naturally derived for matching and fusing PFEs using the uncertainty information. Empirical evaluation on different baseline models, training datasets and benchmarks show that the proposed method can improve the face recognition performance of deterministic embeddings by converting them into PFEs. The uncertainties estimated by PFEs also serve as good indicators of the potential matching accuracy, which are important for a risk-controlled recognition system.

Fingerprints: Fixed Length Representation via Deep Networks and Domain Knowledge

Apr 01, 2019

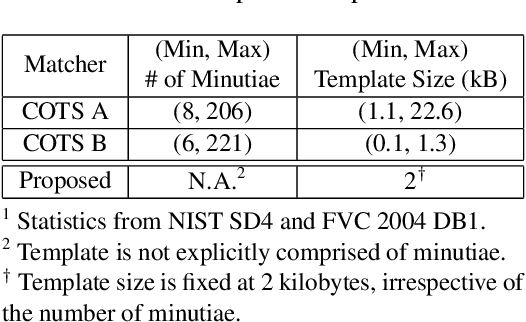

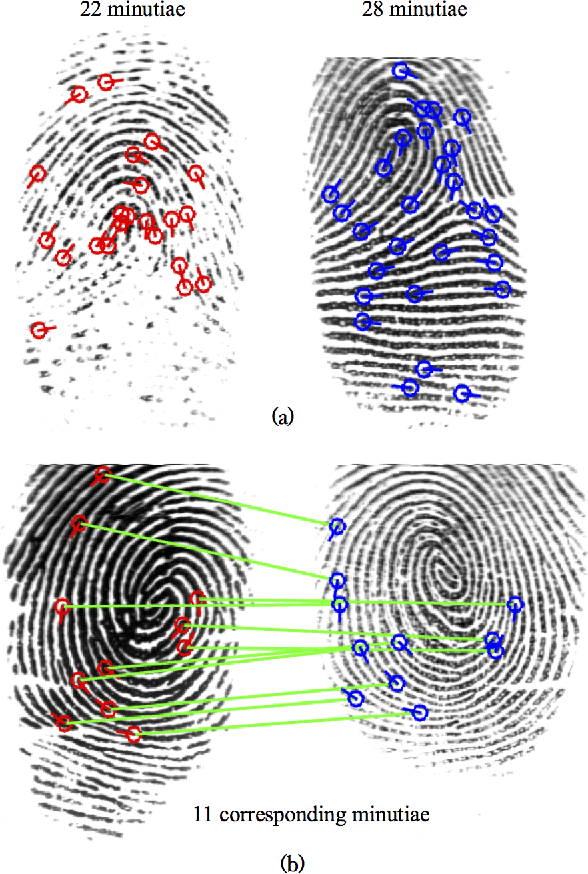

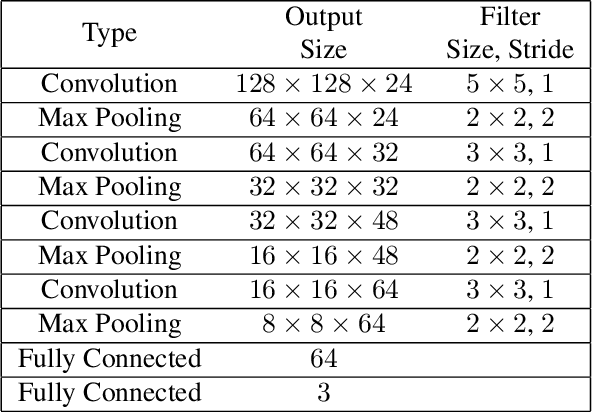

Abstract:We learn a discriminative fixed length feature representation of fingerprints which stands in contrast to commonly used unordered, variable length sets of minutiae points. To arrive at this fixed length representation, we embed fingerprint domain knowledge into a multitask deep convolutional neural network architecture. Empirical results, on two public-domain fingerprint databases (NIST SD4 and FVC 2004 DB1) show that compared to minutiae representations, extracted by two state-of-the-art commercial matchers (Verifinger v6.3 and Innovatrics v2.0.3), our fixed-length representations provide (i) higher search accuracy: Rank-1 accuracy of 97.9% vs. 97.3% on NIST SD4 against a gallery size of 2000 and (ii) significantly faster, large scale search: 682,594 matches per second vs. 22 matches per second for commercial matchers on an i5 3.3 GHz processor with 8 GB of RAM.

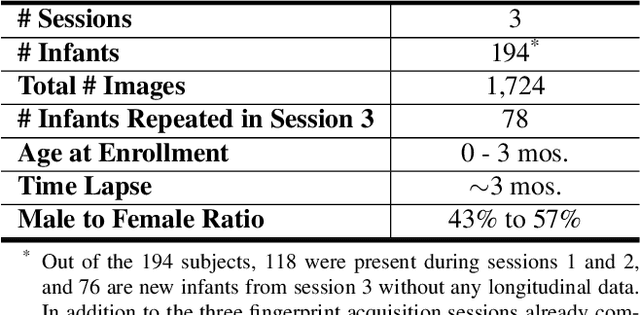

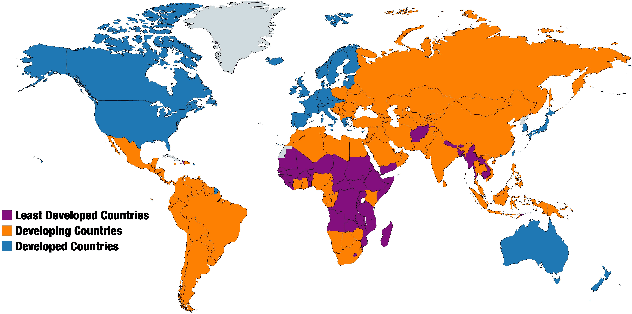

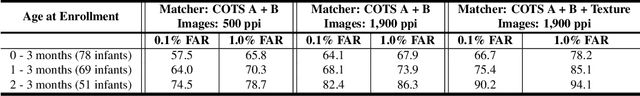

Infant-Prints: Fingerprints for Reducing Infant Mortality

Apr 01, 2019

Abstract:In developing countries around the world, a multitude of infants continue to suffer and die from vaccine-preventable diseases, and malnutrition. Lamentably, the lack of any official identification documentation makes it exceedingly difficult to prevent these infant deaths. To solve this global crisis, we propose Infant-Prints which is comprised of (i) a custom, compact, low-cost (85 USD), high-resolution (1,900 ppi) fingerprint reader, (ii) a high-resolution fingerprint matcher, and (iii) a mobile application for search and verification for the infant fingerprint. Using Infant-Prints, we have collected a longitudinal database of infant fingerprints and demonstrate its ability to perform accurate and reliable recognition of infants enrolled at the ages 0-3 months, in time for effective delivery of critical vaccinations and nutritional supplements (TAR=90% @ FAR = 0.1% for infants older than 8 weeks).

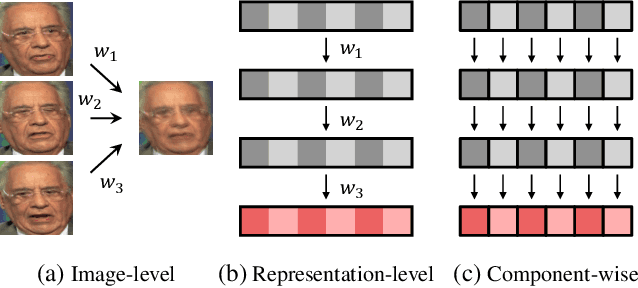

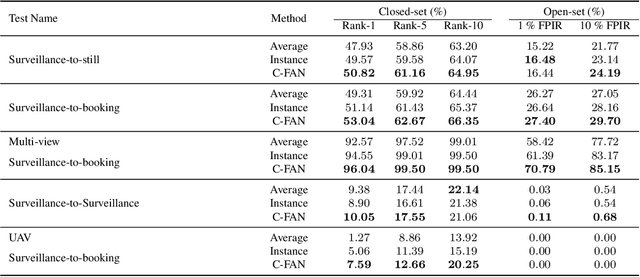

Video Face Recognition: Component-wise Feature Aggregation Network (C-FAN)

Feb 21, 2019

Abstract:We propose a new approach to video face recognition. Our component-wise feature aggregation network (C-FAN) accepts a set of face images of a subject as an input, and outputs a single feature vector as the face representation of the set for the recognition task. The whole network is trained in two steps: (i) train a base CNN for still image face recognition; (ii) add an aggregation module to the base network to learn the quality value for each feature component, which adaptively aggregates deep feature vectors into a single vector to represent the face in a video. C-FAN automatically learns to retain salient face features with high quality scores while suppressing features with low quality scores. The experimental results on three benchmark datasets, YouTube Faces, IJB-A, and IJB-S show that the proposed C-FAN network is capable of generating a compact feature vector with 512 dimensions for a video sequence by efficiently aggregating feature vectors of all the video frames to achieve state of the art performance.

Actions Speak Louder Than (Pass)words: Passive Authentication of Smartphone Users via Deep Temporal Features

Jan 16, 2019

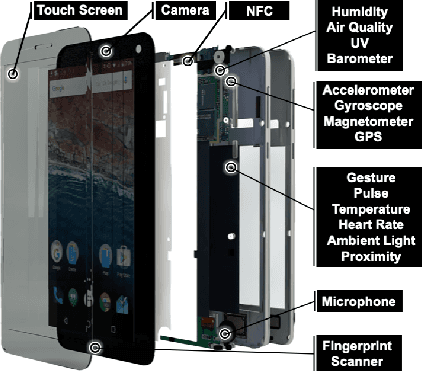

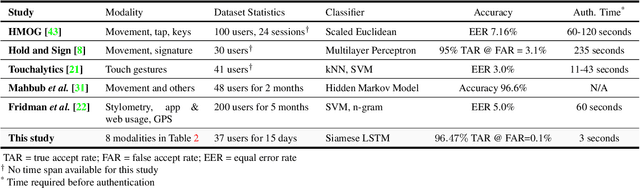

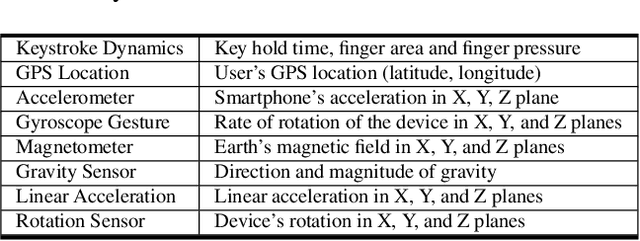

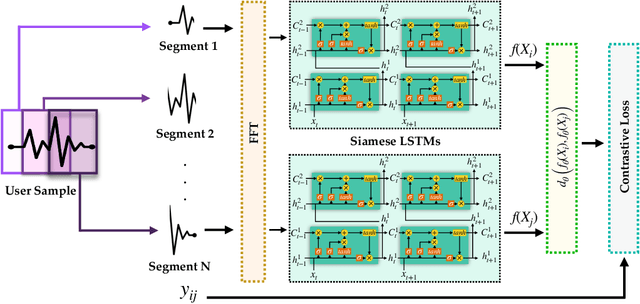

Abstract:Prevailing user authentication schemes on smartphones rely on explicit user interaction, where a user types in a passcode or presents a biometric cue such as face, fingerprint, or iris. In addition to being cumbersome and obtrusive to the users, such authentication mechanisms pose security and privacy concerns. Passive authentication systems can tackle these challenges by frequently and unobtrusively monitoring the user's interaction with the device. In this paper, we propose a Siamese Long Short-Term Memory network architecture for passive authentication, where users can be verified without requiring any explicit authentication step. We acquired a dataset comprising of measurements from 30 smartphone sensor modalities for 37 users. We evaluate our approach on 8 dominant modalities, namely, keystroke dynamics, GPS location, accelerometer, gyroscope, magnetometer, linear accelerometer, gravity, and rotation sensors. Experimental results find that, within 3 seconds, a genuine user can be correctly verified 97.15% of the time at a false accept rate of 0.1%.

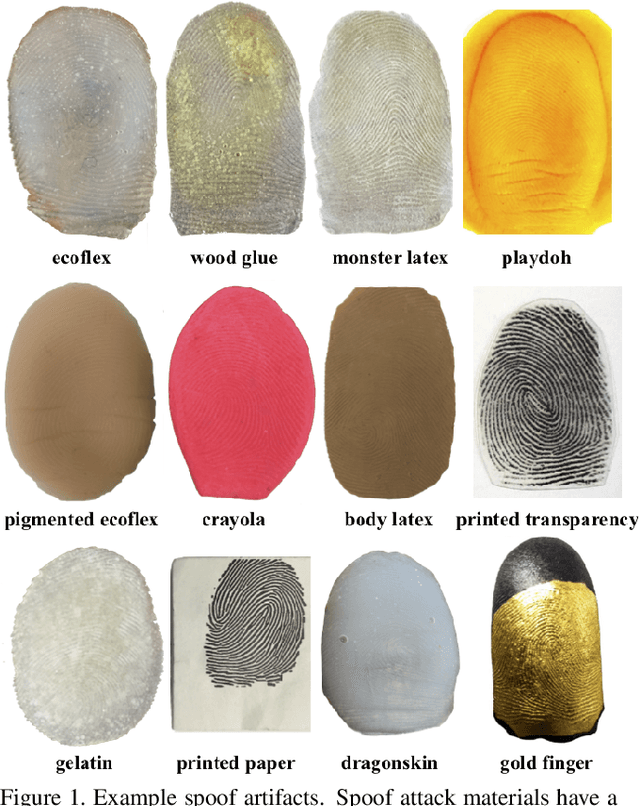

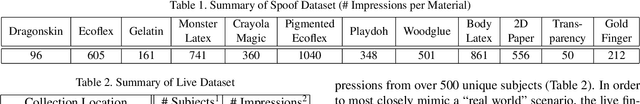

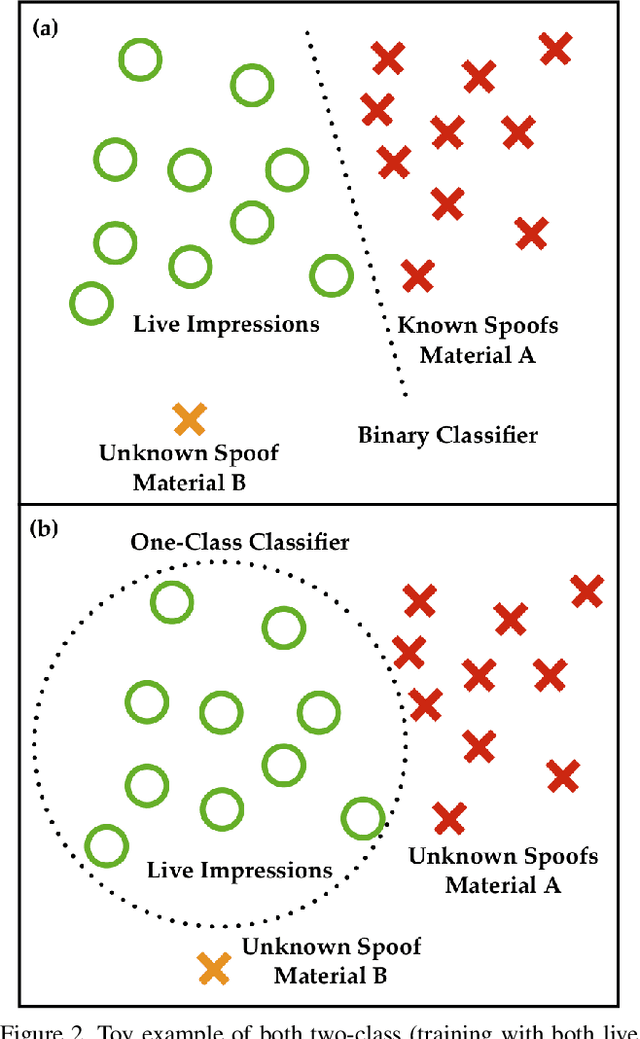

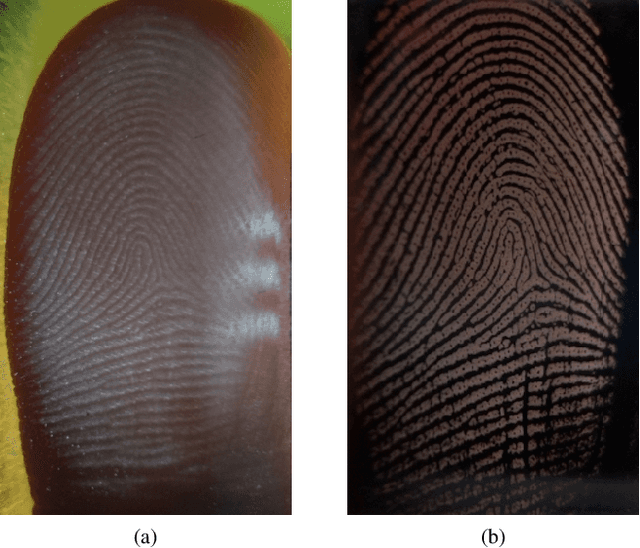

Generalizing Fingerprint Spoof Detector: Learning a One-Class Classifier

Jan 13, 2019

Abstract:Prevailing fingerprint recognition systems are vulnerable to spoof attacks. To mitigate these attacks, automated spoof detectors are trained to distinguish a set of live or bona fide fingerprints from a set of known spoof fingerprints. However, spoof detectors remain vulnerable when exposed to attacks from spoofs made with materials not seen during training of the detector. To alleviate this shortcoming, we approach spoof detection as a one-class classification problem. The goal is to train a spoof detector on only the live fingerprints such that once the concept of "live" has been learned, spoofs of any material can be rejected. We accomplish this through training multiple generative adversarial networks (GANS) on live fingerprint images acquired with the open source, dual-camera, 1900 ppi RaspiReader fingerprint reader. Our experimental results, conducted on 5.5K spoof images (from 12 materials) and 11.8K live images show that the proposed approach improves the cross-material spoof detection performance over state-of-the-art one-class and binary class spoof detectors TDR = 84.4% vs. TDR = 40.3% (both @ FDR = 0.2%).

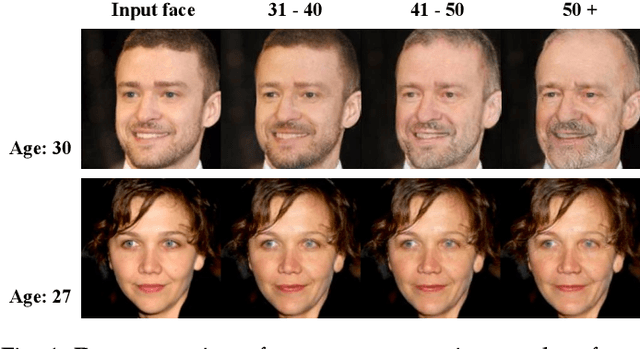

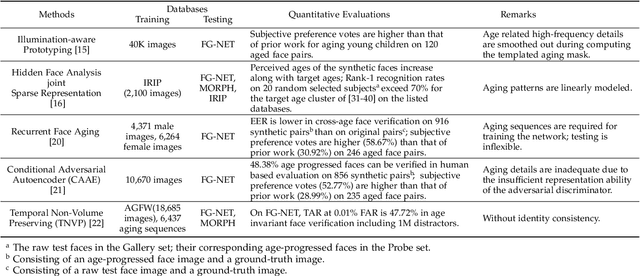

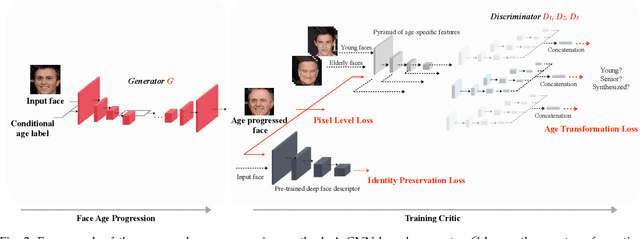

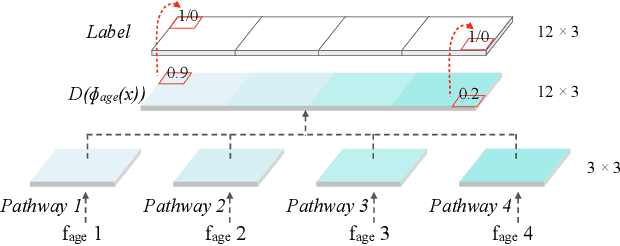

Learning Continuous Face Age Progression: A Pyramid of GANs

Jan 10, 2019

Abstract:The two underlying requirements of face age progression, i.e. aging accuracy and identity permanence, are not well studied in the literature. This paper presents a novel generative adversarial network based approach to address the issues in a coupled manner. It separately models the constraints for the intrinsic subject-specific characteristics and the age-specific facial changes with respect to the elapsed time, ensuring that the generated faces present desired aging effects while simultaneously keeping personalized properties stable. To ensure photo-realistic facial details, high-level age-specific features conveyed by the synthesized face are estimated by a pyramidal adversarial discriminator at multiple scales, which simulates the aging effects with finer details. Further, an adversarial learning scheme is introduced to simultaneously train a single generator and multiple parallel discriminators, resulting in smooth continuous face aging sequences. The proposed method is applicable even in the presence of variations in pose, expression, makeup, etc., achieving remarkably vivid aging effects. Quantitative evaluations by a COTS face recognition system demonstrate that the target age distributions are accurately recovered, and 99.88% and 99.98% age progressed faces can be correctly verified at 0.001% FAR after age transformations of approximately 28 and 23 years elapsed time on the MORPH and CACD databases, respectively. Both visual and quantitative assessments show that the approach advances the state-of-the-art.

Fingerprint Presentation Attack Detection: Generalization and Efficiency

Dec 30, 2018

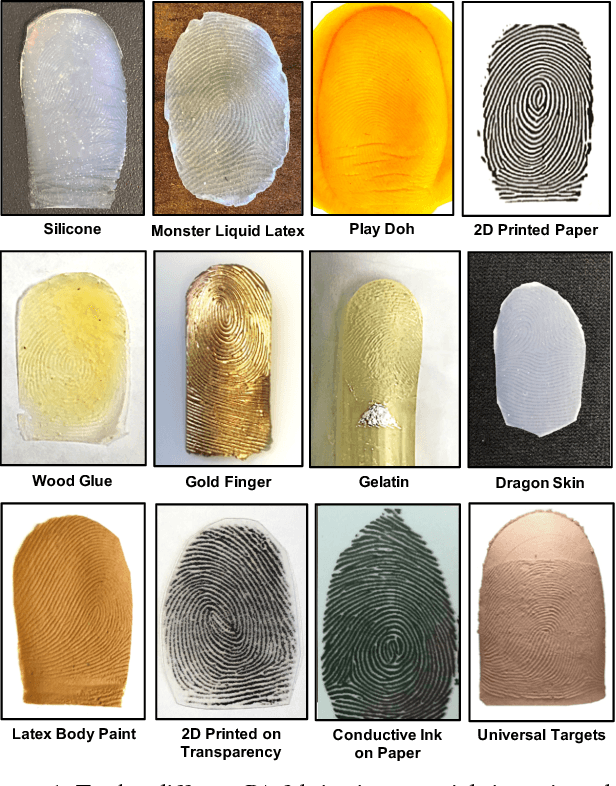

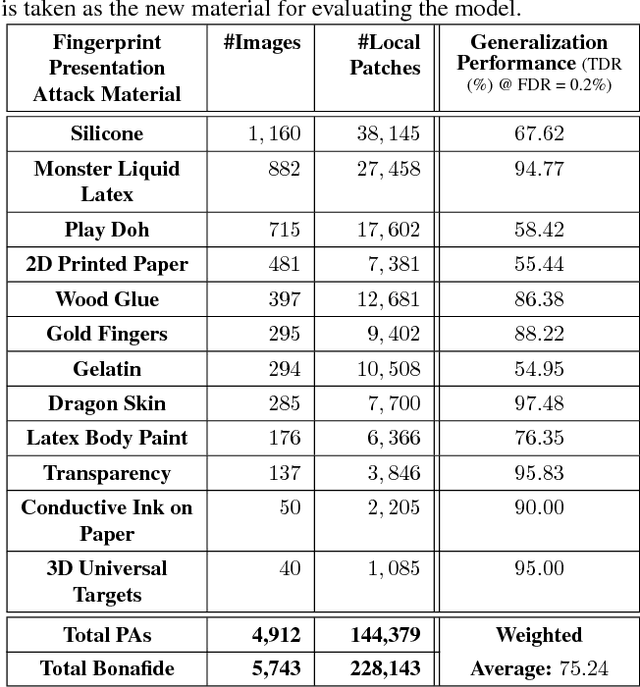

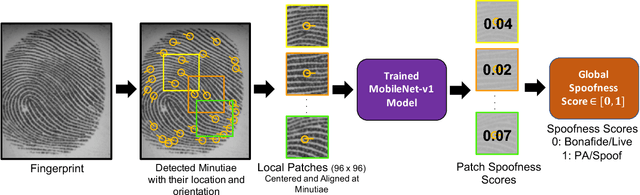

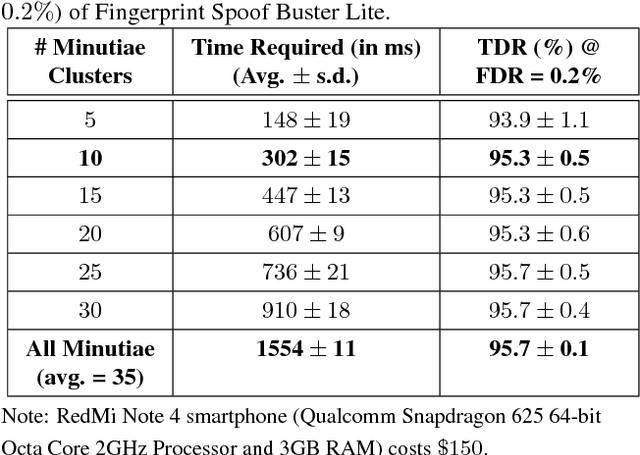

Abstract:We study the problem of fingerprint presentation attack detection (PAD) under unknown PA materials not seen during PAD training. A dataset of 5,743 bonafide and 4,912 PA images of 12 different materials is used to evaluate a state-of-the-art PAD, namely Fingerprint Spoof Buster. We utilize 3D t-SNE visualization and clustering of material characteristics to identify a representative set of PA materials that cover most of PA feature space. We observe that a set of six PA materials, namely Silicone, 2D Paper, Play Doh, Gelatin, Latex Body Paint and Monster Liquid Latex provide a good representative set that should be included in training to achieve generalization of PAD. We also propose an optimized Android app of Fingerprint Spoof Buster that can run on a commodity smartphone (Xiaomi Redmi Note 4) without a significant drop in PAD performance (from TDR = 95.7% to 95.3% @ FDR = 0.2%) which can make a PA prediction in less than 300ms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge