Aniesh Chawla

A Decompilation-Driven Framework for Malware Detection with Large Language Models

Jan 14, 2026Abstract:The parallel evolution of Large Language Models (LLMs) with advanced code-understanding capabilities and the increasing sophistication of malware presents a new frontier for cybersecurity research. This paper evaluates the efficacy of state-of-the-art LLMs in classifying executable code as either benign or malicious. We introduce an automated pipeline that first decompiles Windows executable into a C code using Ghidra disassembler and then leverages LLMs to perform the classification. Our evaluation reveals that while standard LLMs show promise, they are not yet robust enough to replace traditional anti-virus software. We demonstrate that a fine-tuned model, trained on curated malware and benign datasets, significantly outperforms its vanilla counterpart. However, the performance of even this specialized model degrades notably when encountering newer malware. This finding demonstrates the critical need for continuous fine-tuning with emerging threats to maintain model effectiveness against the changing coding patterns and behaviors of malicious software.

Proactively Detecting Threats: A Novel Approach Using LLMs

Jan 13, 2026Abstract:Enterprise security faces escalating threats from sophisticated malware, compounded by expanding digital operations. This paper presents the first systematic evaluation of large language models (LLMs) to proactively identify indicators of compromise (IOCs) from unstructured web-based threat intelligence sources, distinguishing it from reactive malware detection approaches. We developed an automated system that pulls IOCs from 15 web-based threat report sources to evaluate six LLM models (Gemini, Qwen, and Llama variants). Our evaluation of 479 webpages containing 2,658 IOCs (711 IPv4 addresses, 502 IPv6 addresses, 1,445 domains) reveals significant performance variations. Gemini 1.5 Pro achieved 0.958 precision and 0.788 specificity for malicious IOC identification, while demonstrating perfect recall (1.0) for actual threats.

Automated Discovery of Real-Time Network Camera Data From Heterogeneous Web Pages

Mar 23, 2021

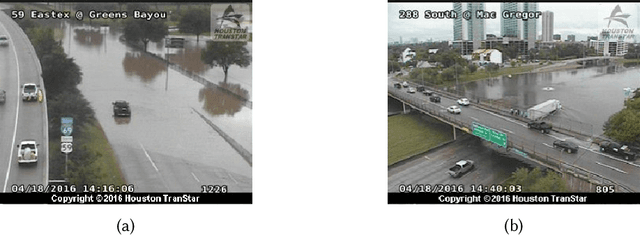

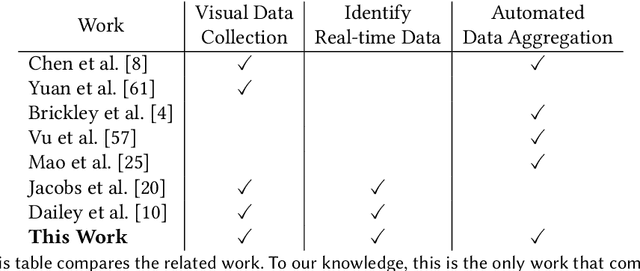

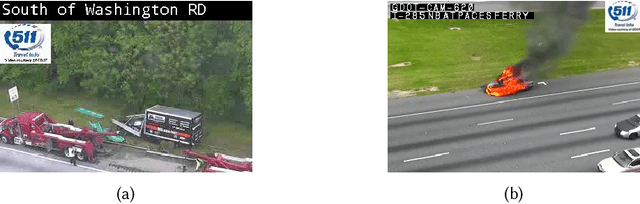

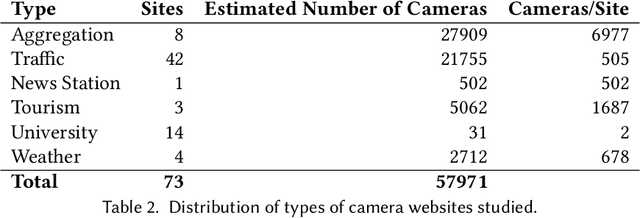

Abstract:Reduction in the cost of Network Cameras along with a rise in connectivity enables entities all around the world to deploy vast arrays of camera networks. Network cameras offer real-time visual data that can be used for studying traffic patterns, emergency response, security, and other applications. Although many sources of Network Camera data are available, collecting the data remains difficult due to variations in programming interface and website structures. Previous solutions rely on manually parsing the target website, taking many hours to complete. We create a general and automated solution for aggregating Network Camera data spread across thousands of uniquely structured web pages. We analyze heterogeneous web page structures and identify common characteristics among 73 sample Network Camera websites (each website has multiple web pages). These characteristics are then used to build an automated camera discovery module that crawls and aggregates Network Camera data. Our system successfully extracts 57,364 Network Cameras from 237,257 unique web pages.

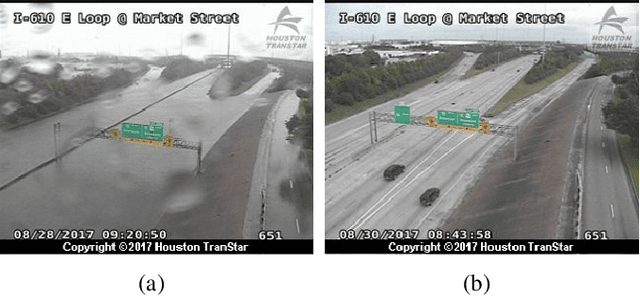

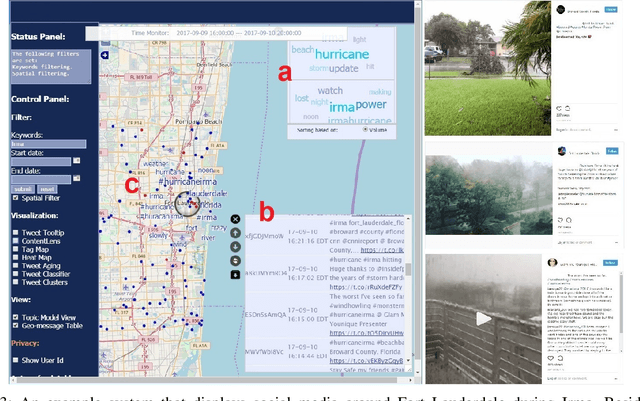

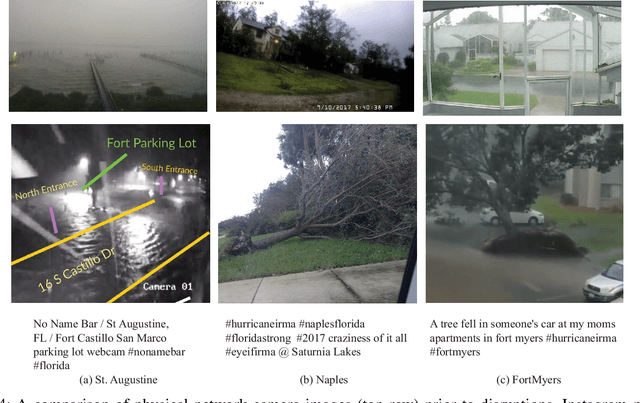

Cross-referencing Social Media and Public Surveillance Camera Data for Disaster Response

Jan 19, 2019

Abstract:Physical media (like surveillance cameras) and social media (like Instagram and Twitter) may both be useful in attaining on-the-ground information during an emergency or disaster situation. However, the intersection and reliability of both surveillance cameras and social media during a natural disaster are not fully understood. To address this gap, we tested whether social media is of utility when physical surveillance cameras went off-line during Hurricane Irma in 2017. Specifically, we collected and compared geo-tagged Instagram and Twitter posts in the state of Florida during times and in areas where public surveillance cameras went off-line. We report social media content and frequency and content to determine the utility for emergency managers or first responders during a natural disaster.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge