Angela Chao

X-Sim: Cross-Embodiment Learning via Real-to-Sim-to-Real

May 15, 2025Abstract:Human videos offer a scalable way to train robot manipulation policies, but lack the action labels needed by standard imitation learning algorithms. Existing cross-embodiment approaches try to map human motion to robot actions, but often fail when the embodiments differ significantly. We propose X-Sim, a real-to-sim-to-real framework that uses object motion as a dense and transferable signal for learning robot policies. X-Sim starts by reconstructing a photorealistic simulation from an RGBD human video and tracking object trajectories to define object-centric rewards. These rewards are used to train a reinforcement learning (RL) policy in simulation. The learned policy is then distilled into an image-conditioned diffusion policy using synthetic rollouts rendered with varied viewpoints and lighting. To transfer to the real world, X-Sim introduces an online domain adaptation technique that aligns real and simulated observations during deployment. Importantly, X-Sim does not require any robot teleoperation data. We evaluate it across 5 manipulation tasks in 2 environments and show that it: (1) improves task progress by 30% on average over hand-tracking and sim-to-real baselines, (2) matches behavior cloning with 10x less data collection time, and (3) generalizes to new camera viewpoints and test-time changes. Code and videos are available at https://portal-cornell.github.io/X-Sim/.

MOSAIC: A Modular System for Assistive and Interactive Cooking

Feb 29, 2024Abstract:We present MOSAIC, a modular architecture for home robots to perform complex collaborative tasks, such as cooking with everyday users. MOSAIC tightly collaborates with humans, interacts with users using natural language, coordinates multiple robots, and manages an open vocabulary of everyday objects. At its core, MOSAIC employs modularity: it leverages multiple large-scale pre-trained models for general tasks like language and image recognition, while using streamlined modules designed for task-specific control. We extensively evaluate MOSAIC on 60 end-to-end trials where two robots collaborate with a human user to cook a combination of 6 recipes. We also extensively test individual modules with 180 episodes of visuomotor picking, 60 episodes of human motion forecasting, and 46 online user evaluations of the task planner. We show that MOSAIC is able to efficiently collaborate with humans by running the overall system end-to-end with a real human user, completing 68.3% (41/60) collaborative cooking trials of 6 different recipes with a subtask completion rate of 91.6%. Finally, we discuss the limitations of the current system and exciting open challenges in this domain. The project's website is at https://portal-cornell.github.io/MOSAIC/

The feasibility of automated identification of six algae types using neural networks and fluorescence-based spectral-morphological features

May 03, 2018

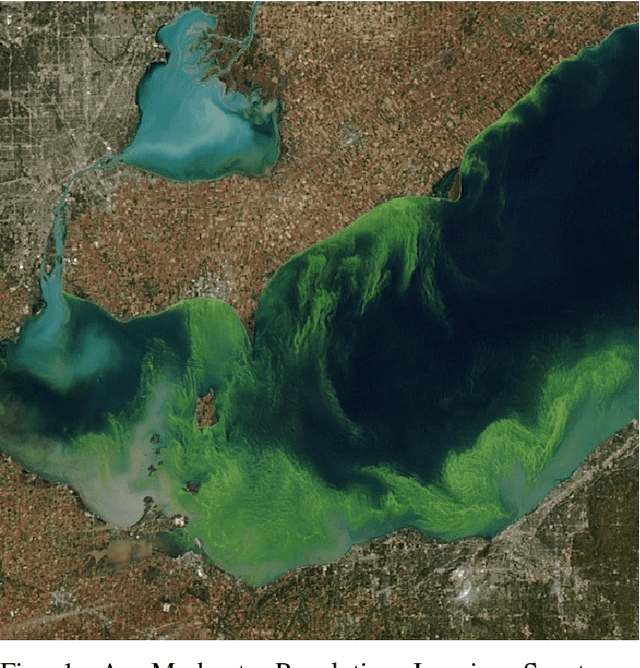

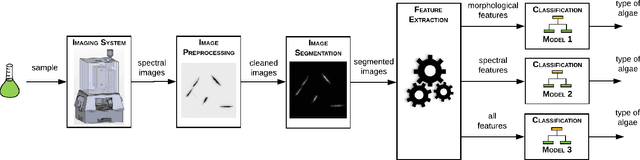

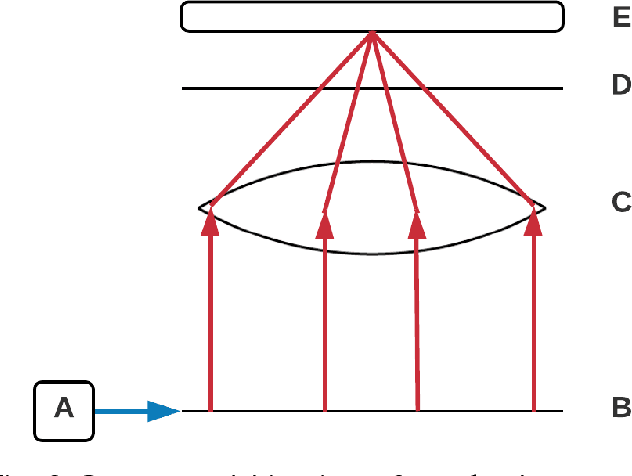

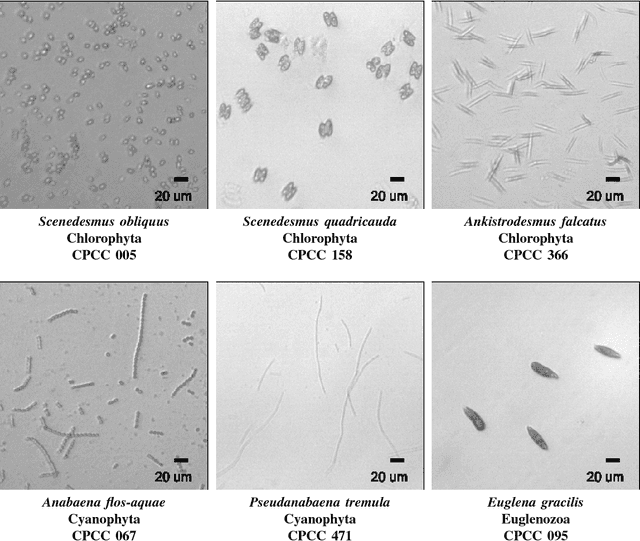

Abstract:Harmful algae blooms (HABs), which produce lethal toxins, are a growing global concern since they negatively affect the quality of drinking water and have major negative impact on wildlife, the fishing industry, as well as tourism and recreational water use. In this study, we investigate the feasibility of leveraging machine learning and fluorescence-based spectral-morphological features to enable the identification of six different algae types in an automated fashion. More specifically, a custom multi-band fluorescence imaging microscope is used to capture fluorescence imaging data of a water sample at six different excitation wavelengths ranging from 405 nm - 530 nm. A number of morphological and spectral fluorescence features are then extracted from the isolated micro-organism imaging data, and used to train neural network classification models designed for the purpose of identification of the six algae types given an isolated micro-organism. Experimental results using three different neural network classification models showed that the use of either fluorescence-based spectral features or fluorescence-based spectral-morphological features to train neural network classification models led to statistically significant improvements in identification accuracy when compared to the use of morphological features (with average identification accuracies of 95.7%+/-3.5% and 96.1%+/-1.5%, respectively). These preliminary results are quite promising, given that the identification accuracy of human taxonomists are typically between the range of 67% and 83%, and thus illustrates the feasibility of leveraging machine learning and fluorescence-based spectral-morphological features as a viable method for automated identification of different algae types.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge