Andrea Bandini

Multimodal Assessment of Speech Impairment in ALS Using Audio-Visual and Machine Learning Approaches

May 27, 2025Abstract:The analysis of speech in individuals with amyotrophic lateral sclerosis is a powerful tool to support clinicians in the assessment of bulbar dysfunction. However, current methods used in clinical practice consist of subjective evaluations or expensive instrumentation. This study investigates different approaches combining audio-visual analysis and machine learning to predict the speech impairment evaluation performed by clinicians. Using a small dataset of acoustic and kinematic features extracted from audio and video recordings of speech tasks, we trained and tested some regression models. The best performance was achieved using the extreme boosting machine regressor with multimodal features, which resulted in a root mean squared error of 0.93 on a scale ranging from 5 to 25. Results suggest that integrating audio-video analysis enhances speech impairment assessment, providing an objective tool for early detection and monitoring of bulbar dysfunction, also in home settings.

Wearable Technologies for Monitoring Upper Extremity Functions During Daily Life in Neurologically Impaired Individuals

Nov 21, 2023Abstract:Neurological disorders, including stroke, spinal cord injuries, multiple sclerosis, and Parkinson's disease, generally lead to diminished upper extremity (UE) function, impacting individuals' independence and quality of life. Traditional assessments predominantly focus on standardized clinical tasks, offering limited insights into real-life UE performance. In this context, this review focuses on wearable technologies as a promising solution to monitor UE function in neurologically impaired individuals during daily life activities. Our primary objective is to categorize the different sensors, data collection and data processing approaches employed. What comes to light is that the majority of studies involved stroke survivors, and predominantly employed inertial measurement units and accelerometers to collect kinematics. Most analyses in these studies were performed offline, focusing on activity duration and frequency as key metrics. Although wearable technology shows potential in monitoring UE function in real-life scenarios, an ideal solution that combines non-intrusiveness, lightweight design, detailed hand and finger movement capture, contextual information, extended recording duration, ease of use, and privacy protection remains an elusive goal. Furthermore, it stands out a growing necessity for a multimodal approach in capturing comprehensive data on UE function during real-life activities to enhance the personalization of rehabilitation strategies and ultimately improve outcomes for these individuals.

Video-Based Hand Pose Estimation for Remote Assessment of Bradykinesia in Parkinson's Disease

Aug 28, 2023Abstract:There is a growing interest in using pose estimation algorithms for video-based assessment of Bradykinesia in Parkinson's Disease (PD) to facilitate remote disease assessment and monitoring. However, the accuracy of pose estimation algorithms in videos from video streaming services during Telehealth appointments has not been studied. In this study, we used seven off-the-shelf hand pose estimation models to estimate the movement of the thumb and index fingers in videos of the finger-tapping (FT) test recorded from Healthy Controls (HC) and participants with PD and under two different conditions: streaming (videos recorded during a live Zoom meeting) and on-device (videos recorded locally with high-quality cameras). The accuracy and reliability of the models were estimated by comparing the models' output with manual results. Three of the seven models demonstrated good accuracy for on-device recordings, and the accuracy decreased significantly for streaming recordings. We observed a negative correlation between movement speed and the model's accuracy for the streaming recordings. Additionally, we evaluated the reliability of ten movement features related to bradykinesia extracted from video recordings of PD patients performing the FT test. While most of the features demonstrated excellent reliability for on-device recordings, most of the features demonstrated poor to moderate reliability for streaming recordings. Our findings highlight the limitations of pose estimation algorithms when applied to video recordings obtained during Telehealth visits, and demonstrate that on-device recordings can be used for automatic video-assessment of bradykinesia in PD.

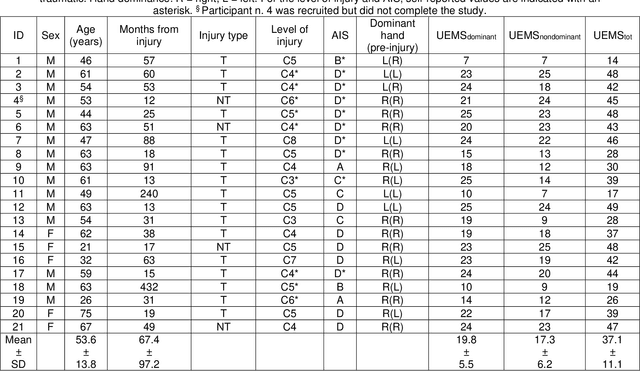

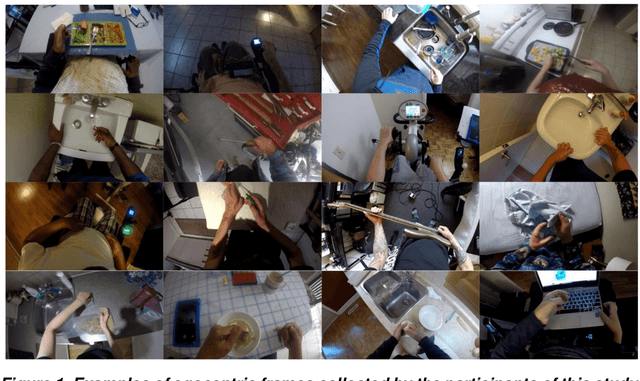

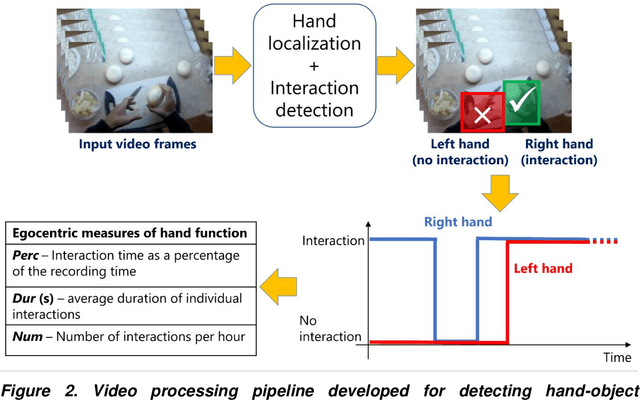

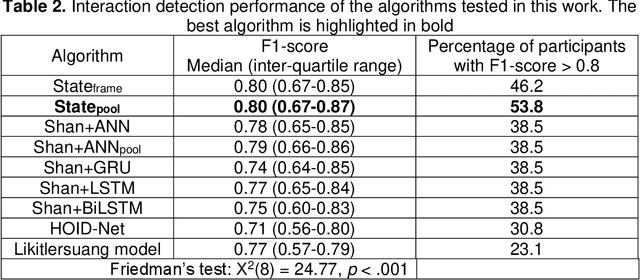

Measuring hand use in the home after cervical spinal cord injury using egocentric video

Mar 31, 2022

Abstract:Background: Egocentric video has recently emerged as a potential solution for monitoring hand function in individuals living with tetraplegia in the community, especially for its ability to detect functional use in the home environment. Objective: To develop and validate a wearable vision-based system for measuring hand use in the home among individuals living with tetraplegia. Methods: Several deep learning algorithms for detecting functional hand-object interactions were developed and compared. The most accurate algorithm was used to extract measures of hand function from 65 hours of unscripted video recorded at home by 20 participants with tetraplegia. These measures were: the percentage of interaction time over total recording time (Perc); the average duration of individual interactions (Dur); the number of interactions per hour (Num). To demonstrate the clinical validity of the technology, egocentric measures were correlated with validated clinical assessments of hand function and independence (Graded Redefined Assessment of Strength, Sensibility and Prehension - GRASSP, Upper Extremity Motor Score - UEMS, and Spinal Cord Independent Measure - SCIM). Results: Hand-object interactions were automatically detected with a median F1-score of 0.80 (0.67-0.87). Our results demonstrated that higher UEMS and better prehension were related to greater time spent interacting, whereas higher SCIM and better hand sensation resulted in a higher number of interactions performed during the egocentric video recordings. Conclusions: For the first time, measures of hand function automatically estimated in an unconstrained environment in individuals with tetraplegia have been validated against internationally accepted measures of hand function. Future work will necessitate a formal evaluation of the reliability and responsiveness of the egocentric-based performance measures for hand use.

Automated pharyngeal phase detection and bolus localization in videofluoroscopic swallowing study: Killing two birds with one stone?

Nov 08, 2021

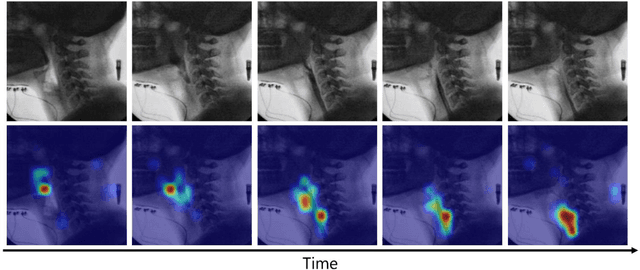

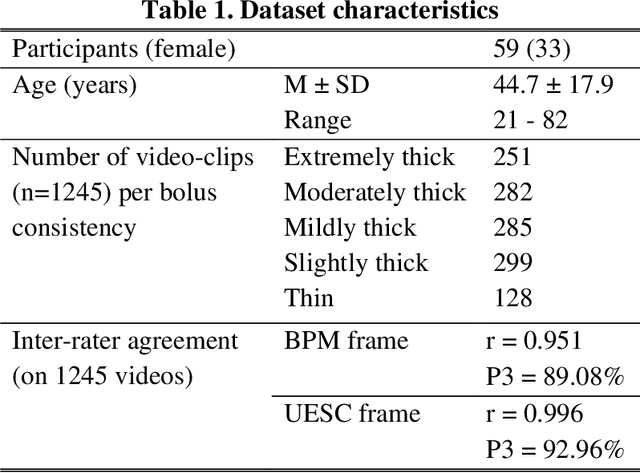

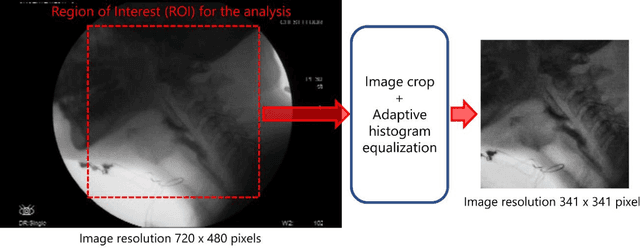

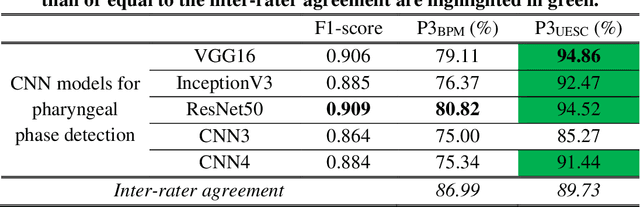

Abstract:The videofluoroscopic swallowing study (VFSS) is a gold-standard imaging technique for assessing swallowing, but analysis and rating of VFSS recordings is time consuming and requires specialized training and expertise. Researchers have demonstrated that it is possible to automatically detect the pharyngeal phase of swallowing and to localize the bolus in VFSS recordings via computer vision approaches, fostering the development of novel techniques for automatic VFSS analysis. However, training of algorithms to perform these tasks requires large amounts of annotated data that are seldom available. We demonstrate that the challenges of pharyngeal phase detection and bolus localization can be solved together using a single approach. We propose a deep-learning framework that jointly tackles pharyngeal phase detection and bolus localization in a weakly-supervised manner, requiring only the initial and final frames of the pharyngeal phase as ground truth annotations for the training. Our approach stems from the observation that bolus presence in the pharynx is the most prominent visual feature upon which to infer whether individual VFSS frames belong to the pharyngeal phase. We conducted extensive experiments with multiple convolutional neural networks (CNNs) on a dataset of 1245 VFSS clips from 59 healthy subjects. We demonstrated that the pharyngeal phase can be detected with an F1-score higher than 0.9. Moreover, by processing the class activation maps of the CNNs, we were able to localize the bolus with promising results, obtaining correlations with ground truth trajectories higher than 0.9, without any manual annotations of bolus location used for training purposes. Once validated on a larger sample of participants with swallowing disorders, our framework will pave the way for the development of intelligent tools for VFSS analysis to support clinicians in swallowing assessment.

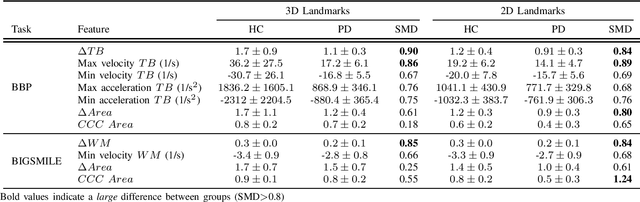

Estimation of Orofacial Kinematics in Parkinson's Disease: Comparison of 2D and 3D Markerless Systems for Motion Tracking

Mar 18, 2020

Abstract:Orofacial deficits are common in people with Parkinson's disease (PD) and their evolution might represent an important biomarker of disease progression. We are developing an automated system for assessment of orofacial function in PD that can be used in-home or in-clinic and can provide useful and objective clinical information that informs disease management. Our current approach relies on color and depth cameras for the estimation of 3D facial movements. However, depth cameras are not commonly available, might be expensive, and require specialized software for control and data processing. The objective of this paper was to evaluate if depth cameras are needed to differentiate between healthy controls and PD patients based on features extracted from orofacial kinematics. Results indicate that 2D features, extracted from color cameras only, are as informative as 3D features, extracted from color and depth cameras, differentiating healthy controls from PD patients. These results pave the way for the development of a universal system for automatic and objective assessment of orofacial function in PD.

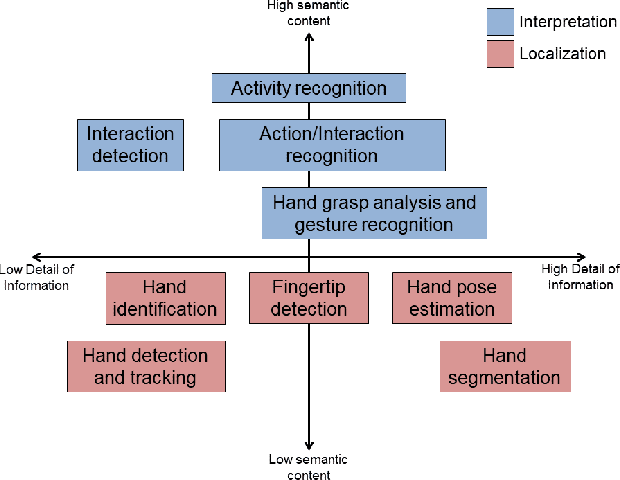

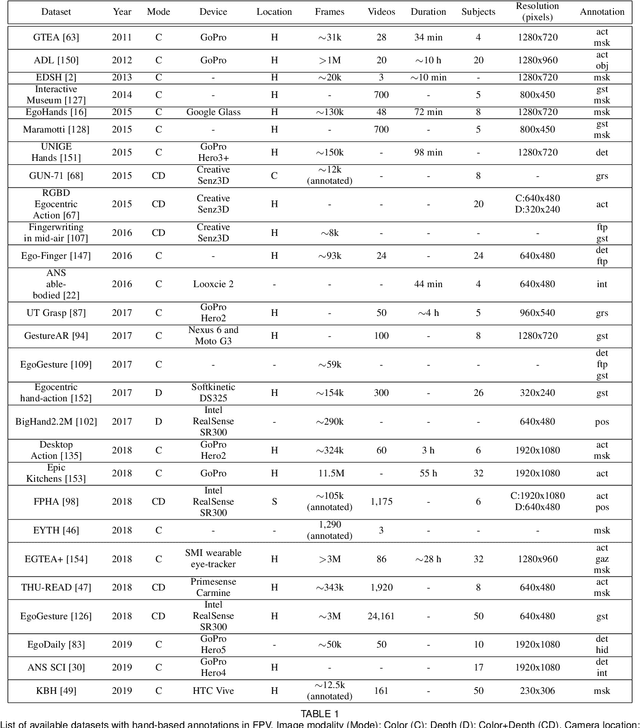

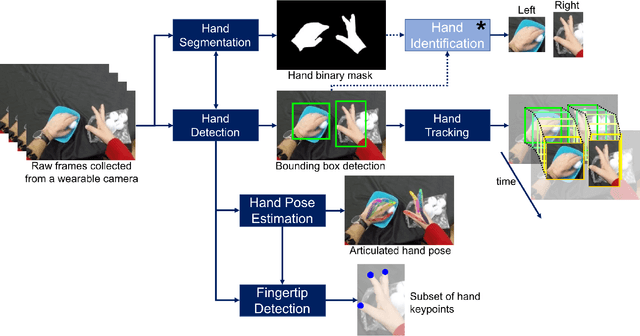

Analysis of the hands in egocentric vision: A survey

Dec 23, 2019

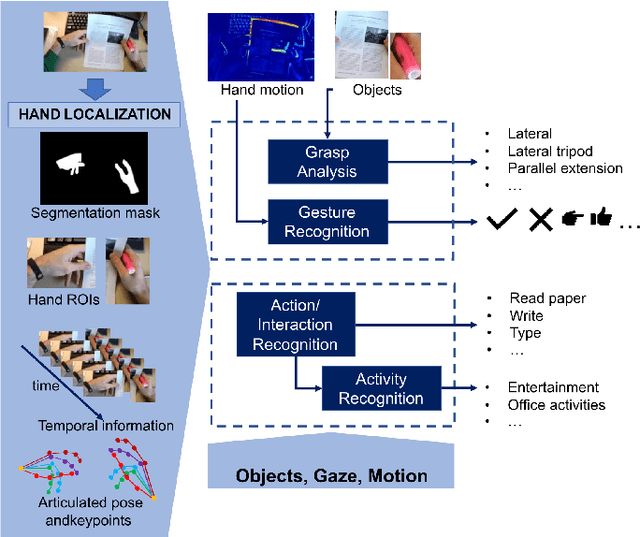

Abstract:Egocentric vision (a.k.a. first-person vision - FPV) applications have thrived over the past few years, thanks to the availability of affordable wearable cameras and large annotated datasets. The position of the wearable camera (usually mounted on the head) allows recording exactly what the camera wearers have in front of them, in particular hands and manipulated objects. This intrinsic advantage enables the study of the hands from multiple perspectives: localizing hands and their parts within the images; understanding what actions and activities the hands are involved in; and developing human-computer interfaces that rely on hand gestures. In this survey, we review the literature that focuses on the hands using egocentric vision, categorizing the existing approaches into: localization (where are the hands or part of them?); interpretation (what are the hands doing?); and application (e.g., systems that used egocentric hand cues for solving a specific problem). Moreover, a list of the most prominent datasets with hand-based annotations is provided.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge