Amarsagar Reddy Ramapuram Matavalam

Machine Learning for Physical Simulation Challenge Results and Retrospective Analysis: Power Grid Use Case

May 02, 2025

Abstract:This paper addresses the growing computational challenges of power grid simulations, particularly with the increasing integration of renewable energy sources like wind and solar. As grid operators must analyze significantly more scenarios in near real-time to prevent failures and ensure stability, traditional physical-based simulations become computationally impractical. To tackle this, a competition was organized to develop AI-driven methods that accelerate power flow simulations by at least an order of magnitude while maintaining operational reliability. This competition utilized a regional-scale grid model with a 30\% renewable energy mix, mirroring the anticipated near-future composition of the French power grid. A key contribution of this work is through the use of LIPS (Learning Industrial Physical Systems), a benchmarking framework that evaluates solutions based on four critical dimensions: machine learning performance, physical compliance, industrial readiness, and generalization to out-of-distribution scenarios. The paper provides a comprehensive overview of the Machine Learning for Physical Simulation (ML4PhySim) competition, detailing the benchmark suite, analyzing top-performing solutions that outperformed traditional simulation methods, and sharing key organizational insights and best practices for running large-scale AI competitions. Given the promising results achieved, the study aims to inspire further research into more efficient, scalable, and sustainable power network simulation methodologies.

Curriculum Based Reinforcement Learning of Grid Topology Controllers to Prevent Thermal Cascading

Dec 18, 2021

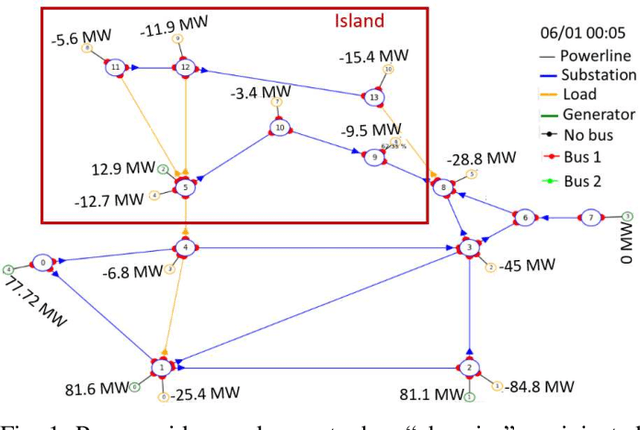

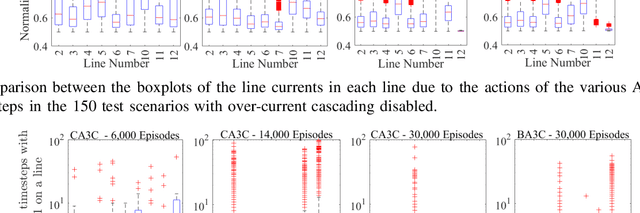

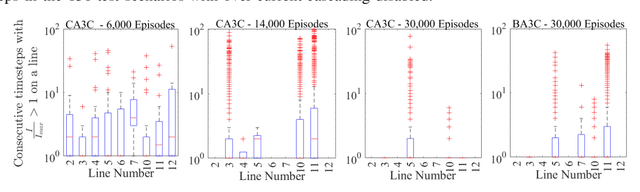

Abstract:This paper describes how domain knowledge of power system operators can be integrated into reinforcement learning (RL) frameworks to effectively learn agents that control the grid's topology to prevent thermal cascading. Typical RL-based topology controllers fail to perform well due to the large search/optimization space. Here, we propose an actor-critic-based agent to address the problem's combinatorial nature and train the agent using the RL environment developed by RTE, the French TSO. To address the challenge of the large optimization space, a curriculum-based approach with reward tuning is incorporated into the training procedure by modifying the environment using network physics for enhanced agent learning. Further, a parallel training approach on multiple scenarios is employed to avoid biasing the agent to a few scenarios and make it robust to the natural variability in grid operations. Without these modifications to the training procedure, the RL agent failed for most test scenarios, illustrating the importance of properly integrating domain knowledge of physical systems for real-world RL learning. The agent was tested by RTE for the 2019 learning to run the power network challenge and was awarded the 2nd place in accuracy and 1st place in speed. The developed code is open-sourced for public use.

Sparse evolutionary Deep Learning with over one million artificial neurons on commodity hardware

Jan 26, 2019

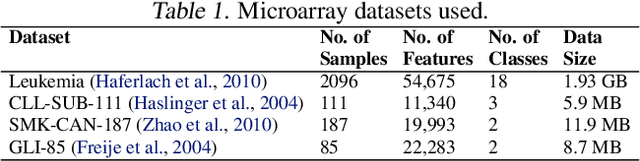

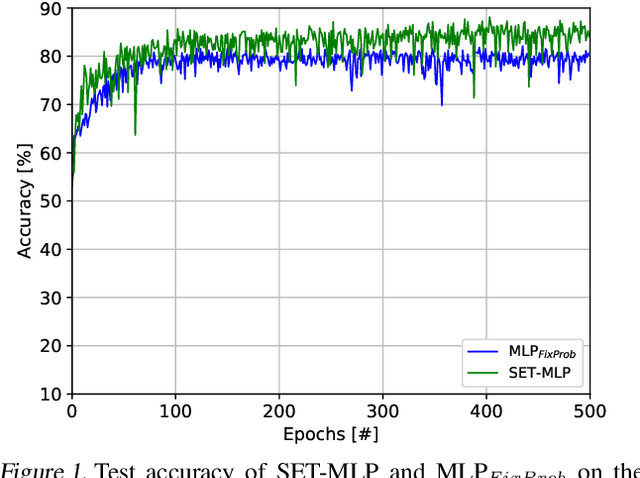

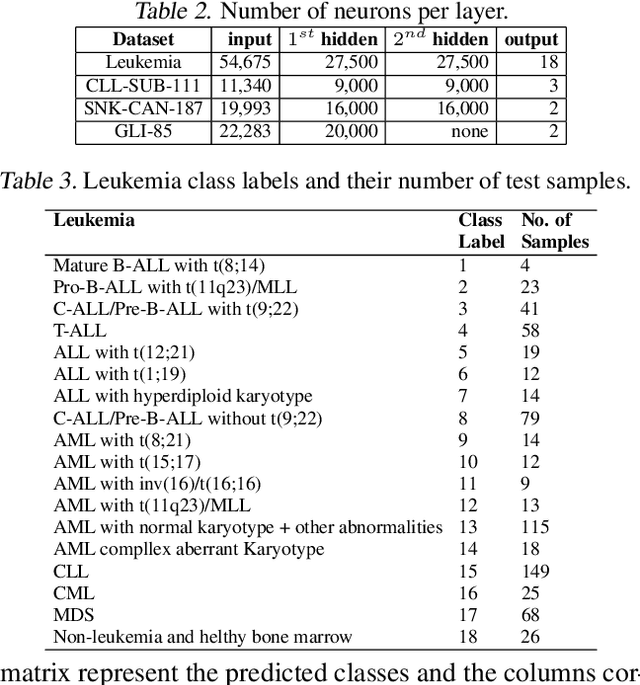

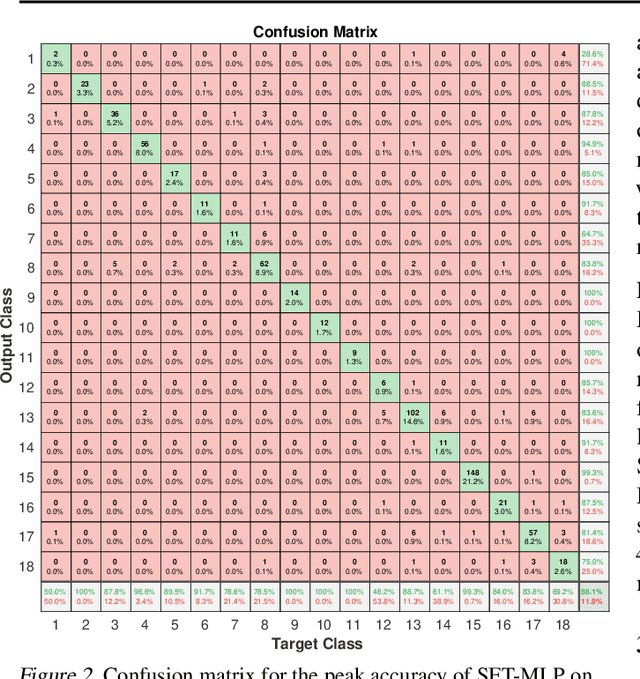

Abstract:Microarray gene expression has widely attracted the eyes of the public as an efficient tool for cancer diagnosis and classification. However, the very-high dimensionality and the small number of samples make it difficult for traditional machine learning algorithms to address this problem due to the high amount of computations required and overfitting. So far, the existing approaches of processing microarray datasets are still far from satisfactory and they employ two phases, feature selection (or extraction) followed by a machine learning algorithm. In this paper, we show that MultiLayer Perceptrons (MLPs) with adaptive sparse connectivity can directly handle this problem without features selection. Tested on four datasets, our novel results demonstrate that deep learning methods can be applied directly also to high dimensional non-grid like data, while learning from a small amount of labeled examples with imbalanced classes and achieving better accuracy than the traditional two phases approach. Moreover, we have been able to create sparse MLP models with over one million neurons and to train them on a typical laptop without GPU. This is with two orders of magnitude more than the largest MLPs which can run currently on commodity hardware.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge