Alyssa Goodman

AstroLLaMA: Towards Specialized Foundation Models in Astronomy

Sep 12, 2023

Abstract:Large language models excel in many human-language tasks but often falter in highly specialized domains like scholarly astronomy. To bridge this gap, we introduce AstroLLaMA, a 7-billion-parameter model fine-tuned from LLaMA-2 using over 300,000 astronomy abstracts from arXiv. Optimized for traditional causal language modeling, AstroLLaMA achieves a 30% lower perplexity than Llama-2, showing marked domain adaptation. Our model generates more insightful and scientifically relevant text completions and embedding extraction than state-of-the-arts foundation models despite having significantly fewer parameters. AstroLLaMA serves as a robust, domain-specific model with broad fine-tuning potential. Its public release aims to spur astronomy-focused research, including automatic paper summarization and conversational agent development.

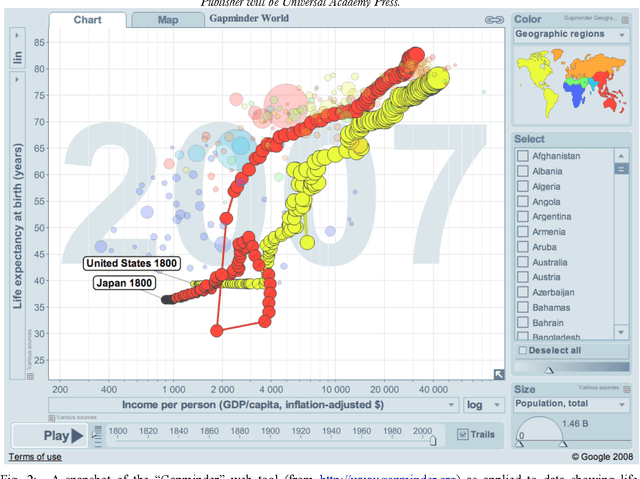

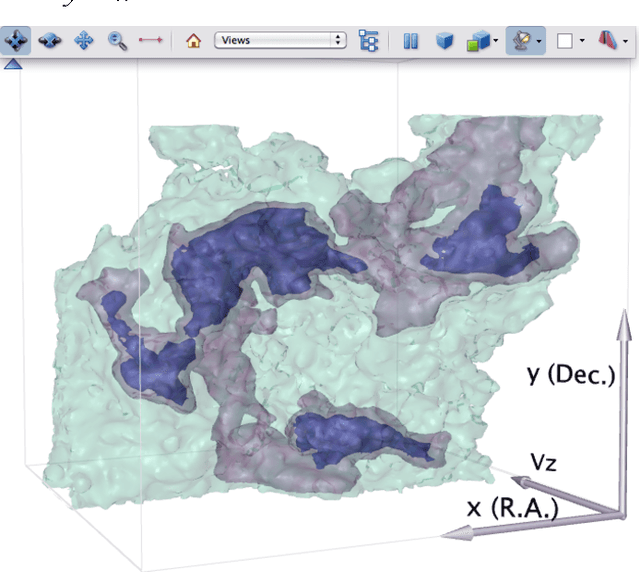

Seeing Science

Nov 17, 2009

Abstract:The ability to represent scientific data and concepts visually is becoming increasingly important due to the unprecedented exponential growth of computational power during the present digital age. The data sets and simulations scientists in all fields can now create are literally thousands of times as large as those created just 20 years ago. Historically successful methods for data visualization can, and should, be applied to today's huge data sets, but new approaches, also enabled by technology, are needed as well. Increasingly, "modular craftsmanship" will be applied, as relevant functionality from the graphically and technically best tools for a job are combined as-needed, without low-level programming.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge