Alwin Hoffmann

XITASO GmbH

SUNSET -- A Sensor-fUsioN based semantic SegmEnTation exemplar for ROS-based self-adaptation

Jan 20, 2026Abstract:The fact that robots are getting deployed more often in dynamic environments, together with the increasing complexity of their software systems, raises the need for self-adaptive approaches. In these environments robotic software systems increasingly operate amid (1) uncertainties, where symptoms are easy to observe but root causes are ambiguous, or (2) multiple uncertainties appear concurrently. We present SUNSET, a ROS2-based exemplar that enables rigorous, repeatable evaluation of architecture-based self-adaptation in such conditions. It implements a sensor fusion semantic-segmentation pipeline driven by a trained Machine Learning (ML) model whose input preprocessing can be perturbed to induce realistic performance degradations. The exemplar exposes five observable symptoms, where each can be caused by different root causes and supports concurrent uncertainties spanning self-healing and self-optimisation. SUNSET includes the segmentation pipeline, a trained ML model, uncertainty-injection scripts, a baseline controller, and step-by-step integration and evaluation documentation to facilitate reproducible studies and fair comparison.

Combining Quality of Service and System Health Metrics in MAPE-K based ROS Systems through Behavior Trees

Apr 29, 2025Abstract:In recent years, the field of robotics has witnessed a significant shift from operating in structured environments to handling dynamic and unpredictable settings. To tackle these challenges, methodologies from the field of self-adaptive systems enabling these systems to react to unforeseen circumstances during runtime have been applied. The Monitoring-Analysis- Planning-Execution over Knowledge (MAPE-K) feedback loop model is a popular approach, often implemented in a managing subsystem, responsible for monitoring and adapting a managed subsystem. This work explores the implementation of the MAPE- K feedback loop based on Behavior Trees (BTs) within the Robot Operating System 2 (ROS2) framework. By delineating the managed and managing subsystems, our approach enhances the flexibility and adaptability of ROS-based systems, ensuring they not only meet Quality-of-Service (QoS), but also system health metric requirements, namely availability of ROS nodes and communication channels. Our implementation allows for the application of the method to new managed subsystems without needing custom BT nodes as the desired behavior can be configured within a specific rule set. We demonstrate the effectiveness of our method through various experiments on a system showcasing an aerial perception use case. By evaluating different failure cases, we show both an increased perception quality and a higher system availability. Our code is open source

Masked Autoencoder Self Pre-Training for Defect Detection in Microelectronics

Apr 14, 2025Abstract:Whereas in general computer vision, transformer-based architectures have quickly become the gold standard, microelectronics defect detection still heavily relies on convolutional neural networks (CNNs). We hypothesize that this is due to the fact that a) transformers have an increased need for data and b) labelled image generation procedures for microelectronics are costly, and labelled data is therefore sparse. Whereas in other domains, pre-training on large natural image datasets can mitigate this problem, in microelectronics transfer learning is hindered due to the dissimilarity of domain data and natural images. Therefore, we evaluate self pre-training, where models are pre-trained on the target dataset, rather than another dataset. We propose a vision transformer (ViT) pre-training framework for defect detection in microelectronics based on masked autoencoders (MAE). In MAE, a large share of image patches is masked and reconstructed by the model during pre-training. We perform pre-training and defect detection using a dataset of less than 10.000 scanning acoustic microscopy (SAM) images labelled using transient thermal analysis (TTA). Our experimental results show that our approach leads to substantial performance gains compared to a) supervised ViT, b) ViT pre-trained on natural image datasets, and c) state-of-the-art CNN-based defect detection models used in the literature. Additionally, interpretability analysis reveals that our self pre-trained models, in comparison to ViT baselines, correctly focus on defect-relevant features such as cracks in the solder material. This demonstrates that our approach yields fault-specific feature representations, making our self pre-trained models viable for real-world defect detection in microelectronics.

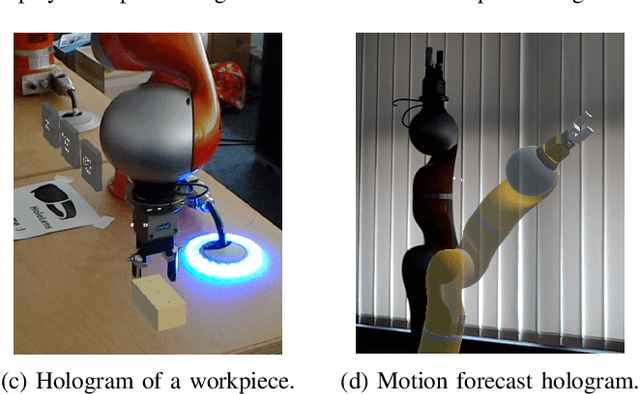

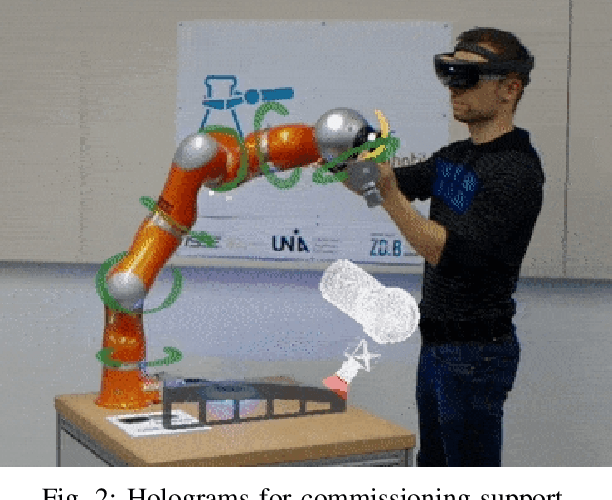

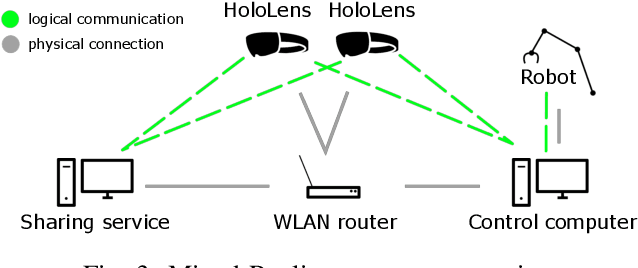

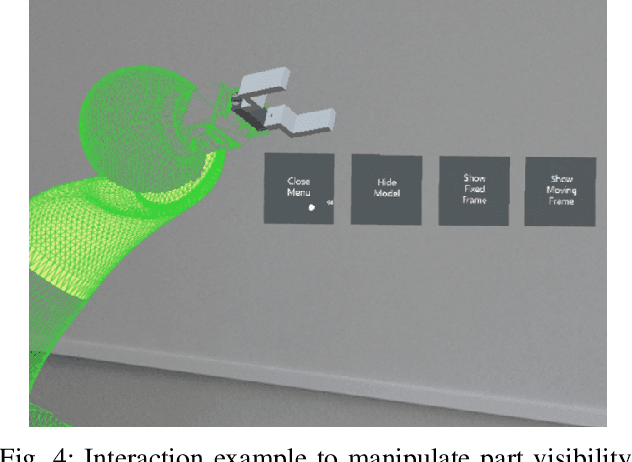

Opportunities and Limitations of Mixed Reality Holograms in Industrial Robotics

Jan 22, 2020

Abstract:This paper introduces two case studies combining the field of industrial robotics with Mixed Reality (MR). The goal of those case studies is to get a better understanding of how MR can be useful and what are the limitations. The first case study describes an approach to visualize the digital twin of a robot arm. The second case study aims at facilitating the commissioning of industrial robots. Furthermore, this paper reports the experiences gained by implementing those two scenarios and discusses the limitations.

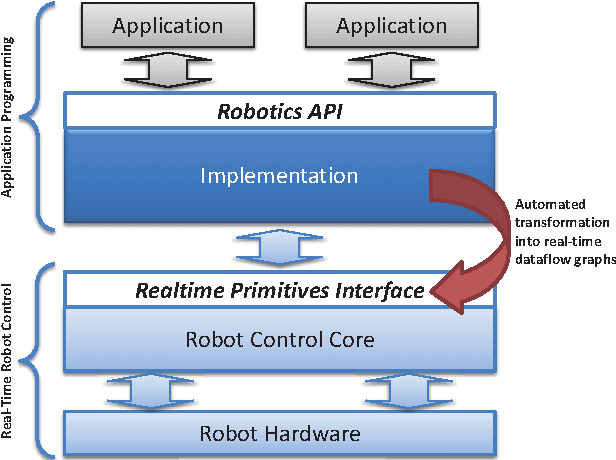

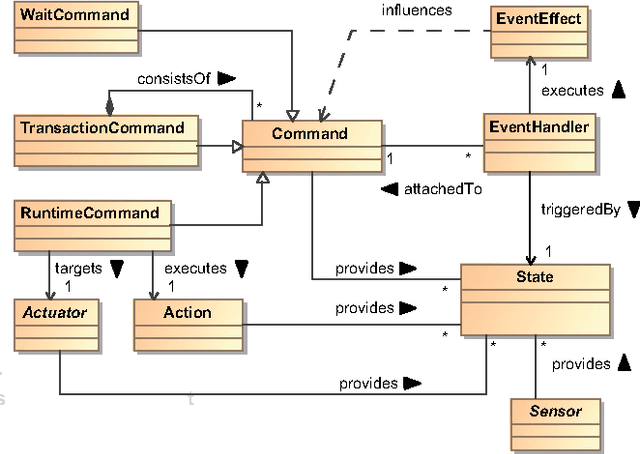

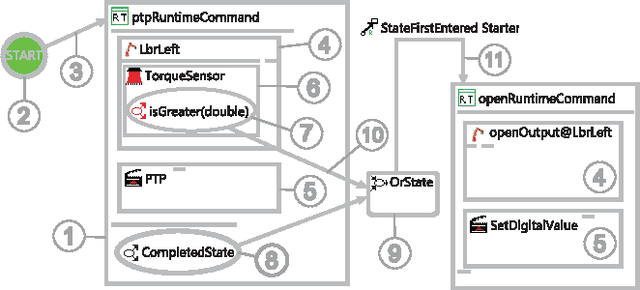

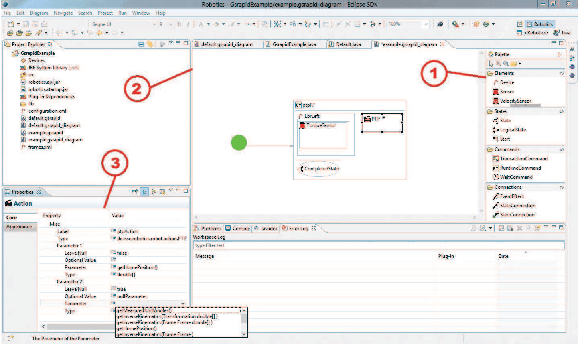

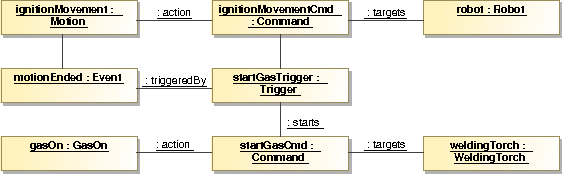

A Graphical Language for Real-Time Critical Robot Commands

Mar 27, 2013

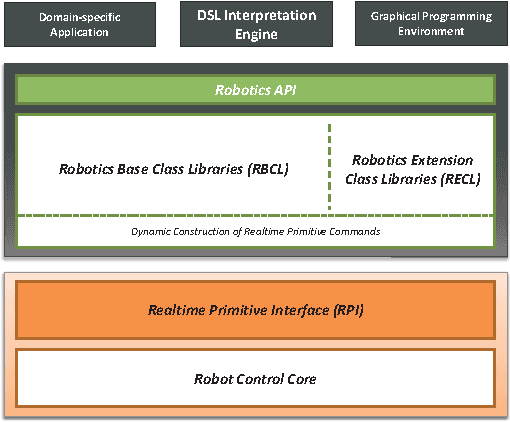

Abstract:Industrial robotics is characterized by sophisticated mechanical components and highly-developed real-time control algorithms. However, the efficient use of robotic systems is very much limited by existing proprietary programming methods. In the research project SoftRobot, a software architecture was developed that enables the programming of complex real-time critical robot tasks with an object-oriented general purpose language. On top of this architecture, a graphical language was developed to ease the specification of complex robot commands, which can then be used as part of robot application workflows. This paper gives an overview about the design and implementation of this graphical language and illustrates its usefulness with some examples.

On reverse-engineering the KUKA Robot Language

Sep 25, 2010

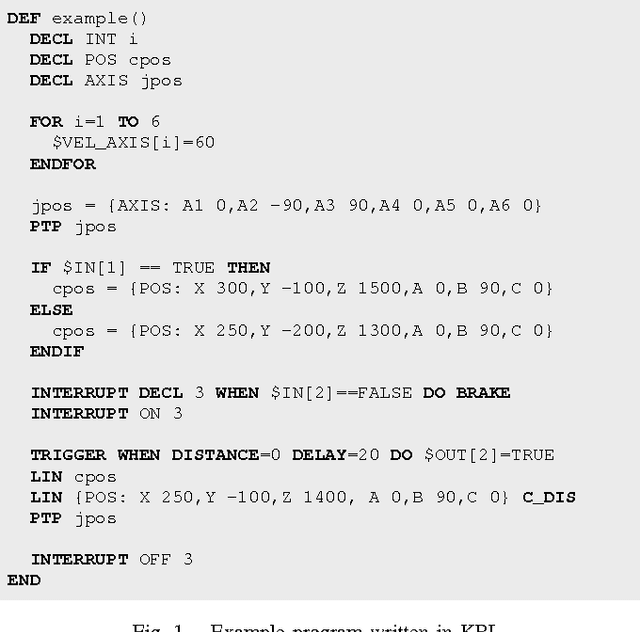

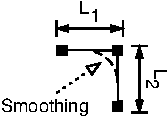

Abstract:Most commercial manufacturers of industrial robots require their robots to be programmed in a proprietary language tailored to the domain - a typical domain-specific language (DSL). However, these languages oftentimes suffer from shortcomings such as controller-specific design, limited expressiveness and a lack of extensibility. For that reason, we developed the extensible Robotics API for programming industrial robots on top of a general-purpose language. Although being a very flexible approach to programming industrial robots, a fully-fledged language can be too complex for simple tasks. Additionally, legacy support for code written in the original DSL has to be maintained. For these reasons, we present a lightweight implementation of a typical robotic DSL, the KUKA Robot Language (KRL), on top of our Robotics API. This work deals with the challenges in reverse-engineering the language and mapping its specifics to the Robotics API. We introduce two different approaches of interpreting and executing KRL programs: tree-based and bytecode-based interpretation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge