Allen Hao Huang

Apertus: Democratizing Open and Compliant LLMs for Global Language Environments

Sep 17, 2025

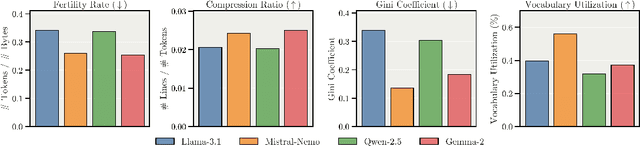

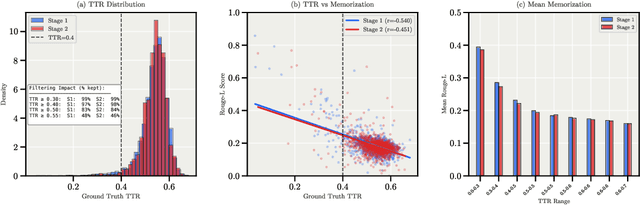

Abstract:We present Apertus, a fully open suite of large language models (LLMs) designed to address two systemic shortcomings in today's open model ecosystem: data compliance and multilingual representation. Unlike many prior models that release weights without reproducible data pipelines or regard for content-owner rights, Apertus models are pretrained exclusively on openly available data, retroactively respecting robots.txt exclusions and filtering for non-permissive, toxic, and personally identifiable content. To mitigate risks of memorization, we adopt the Goldfish objective during pretraining, strongly suppressing verbatim recall of data while retaining downstream task performance. The Apertus models also expand multilingual coverage, training on 15T tokens from over 1800 languages, with ~40% of pretraining data allocated to non-English content. Released at 8B and 70B scales, Apertus approaches state-of-the-art results among fully open models on multilingual benchmarks, rivalling or surpassing open-weight counterparts. Beyond model weights, we release all scientific artifacts from our development cycle with a permissive license, including data preparation scripts, checkpoints, evaluation suites, and training code, enabling transparent audit and extension.

Deriving Activation Functions via Integration

Nov 20, 2024

Abstract:Activation functions play a crucial role in introducing non-linearities to deep neural networks. We propose a novel approach to designing activation functions by focusing on their gradients and deriving the corresponding functions through integration. Our work introduces the Expanded Integral of the Exponential Linear Unit (xIELU), a trainable piecewise activation function derived by integrating trainable affine transformations applied on the ELU activation function. xIELU combines two key gradient properties: a trainable and linearly increasing gradient for positive inputs, similar to ReLU$^2$, and a trainable negative gradient flow for negative inputs, akin to xSiLU. Conceptually, xIELU can be viewed as extending ReLU$^2$ to effectively handle negative inputs. In experiments with 1.1B parameter Llama models trained on 126B tokens of FineWeb Edu, xIELU achieves lower perplexity compared to both ReLU$^2$ and SwiGLU when matched for the same compute cost and parameter count.

Expanded Gating Ranges Improve Activation Functions

May 25, 2024Abstract:Activation functions are core components of all deep learning architectures. Currently, the most popular activation functions are smooth ReLU variants like GELU and SiLU. These are self-gated activation functions where the range of the gating function is between zero and one. In this paper, we explore the viability of using arctan as a gating mechanism. A self-gated activation function that uses arctan as its gating function has a monotonically increasing first derivative. To make this activation function competitive, it is necessary to introduce a trainable parameter for every MLP block to expand the range of the gating function beyond zero and one. We find that this technique also improves existing self-gated activation functions. We conduct an empirical evaluation of Expanded ArcTan Linear Unit (xATLU), Expanded GELU (xGELU), and Expanded SiLU (xSiLU) and show that they outperform existing activation functions within a transformer architecture. Additionally, expanded gating ranges show promising results in improving first-order Gated Linear Units (GLU).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge