Alice Le Brigant

UP1

On the Wasserstein Geodesic Principal Component Analysis of probability measures

Jun 04, 2025

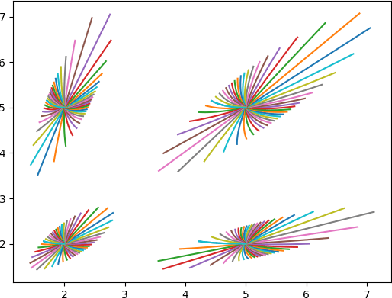

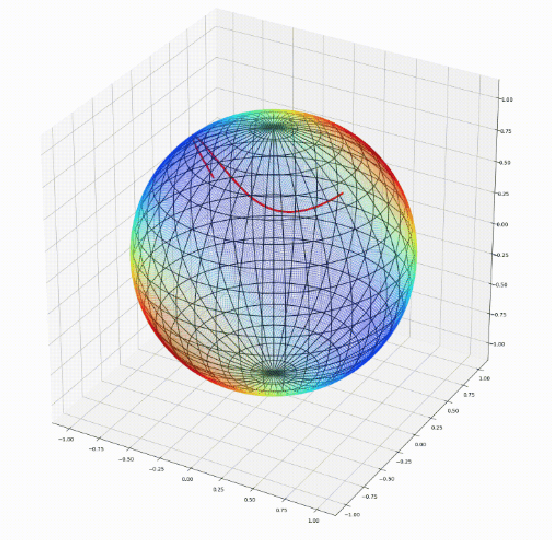

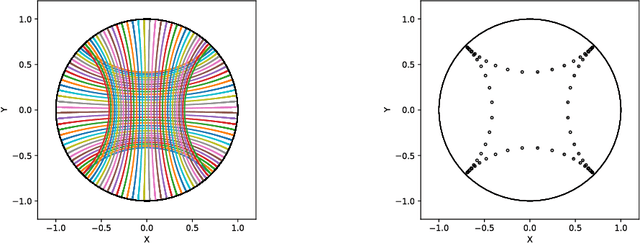

Abstract:This paper focuses on Geodesic Principal Component Analysis (GPCA) on a collection of probability distributions using the Otto-Wasserstein geometry. The goal is to identify geodesic curves in the space of probability measures that best capture the modes of variation of the underlying dataset. We first address the case of a collection of Gaussian distributions, and show how to lift the computations in the space of invertible linear maps. For the more general setting of absolutely continuous probability measures, we leverage a novel approach to parameterizing geodesics in Wasserstein space with neural networks. Finally, we compare to classical tangent PCA through various examples and provide illustrations on real-world datasets.

Parametric information geometry with the package Geomstats

Nov 21, 2022

Abstract:We introduce the information geometry module of the Python package Geomstats. The module first implements Fisher-Rao Riemannian manifolds of widely used parametric families of probability distributions, such as normal, gamma, beta, Dirichlet distributions, and more. The module further gives the Fisher-Rao Riemannian geometry of any parametric family of distributions of interest, given a parameterized probability density function as input. The implemented Riemannian geometry tools allow users to compare, average, interpolate between distributions inside a given family. Importantly, such capabilities open the door to statistics and machine learning on probability distributions. We present the object-oriented implementation of the module along with illustrative examples and show how it can be used to perform learning on manifolds of parametric probability distributions.

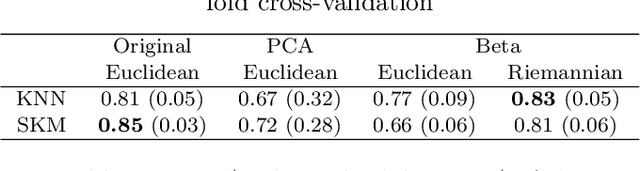

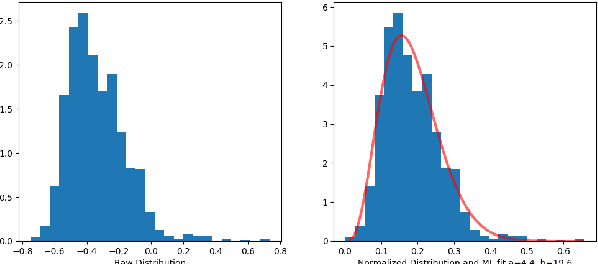

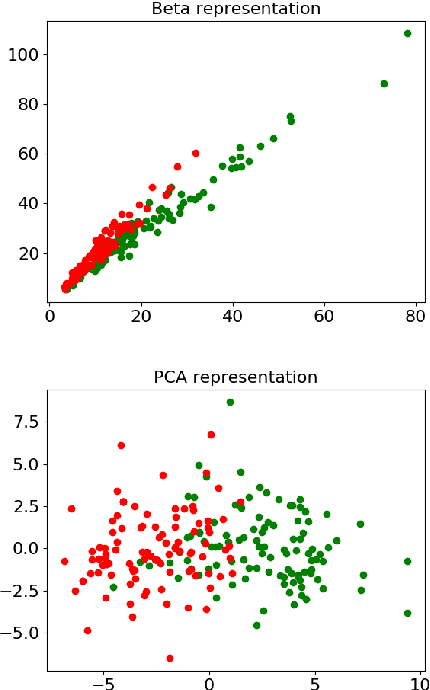

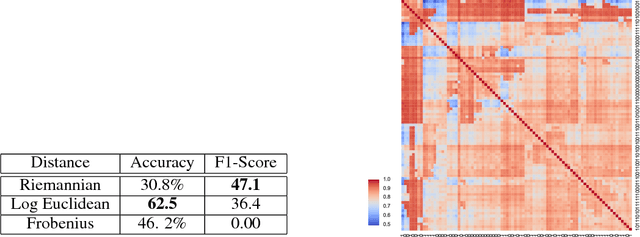

Classifying histograms of medical data using information geometry of beta distributions

Jun 03, 2020

Abstract:In this paper, we use tools of information geometry to compare, average and classify histograms. Beta distributions are fitted to the histograms and the corresponding Fisher information geometry is used for comparison. We show that this geometry is negatively curved, which guarantees uniqueness of the notion of mean, and makes it suitable to classify histograms through the popular K-means algorithm. We illustrate the use of these geometric tools in supervised and unsupervised classification procedures of two medical data-sets, cardiac shape deformations for the detection of pulmonary hypertension and brain cortical thickness for the diagnosis of Alzheimer's disease.

Geomstats: A Python Package for Riemannian Geometry in Machine Learning

Apr 07, 2020

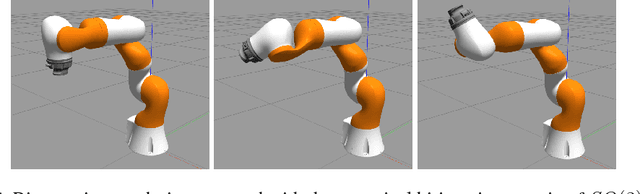

Abstract:We introduce Geomstats, an open-source Python toolbox for computations and statistics on nonlinear manifolds, such as hyperbolic spaces, spaces of symmetric positive definite matrices, Lie groups of transformations, and many more. We provide object-oriented and extensively unit-tested implementations. Among others, manifolds come equipped with families of Riemannian metrics, with associated exponential and logarithmic maps, geodesics and parallel transport. Statistics and learning algorithms provide methods for estimation, clustering and dimension reduction on manifolds. All associated operations are vectorized for batch computation and provide support for different execution backends, namely NumPy, PyTorch and TensorFlow, enabling GPU acceleration. This paper presents the package, compares it with related libraries and provides relevant code examples. We show that Geomstats provides reliable building blocks to foster research in differential geometry and statistics, and to democratize the use of Riemannian geometry in machine learning applications. The source code is freely available under the MIT license at \url{geomstats.ai}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge