Alexander Hadjiivanov

Energy efficiency analysis of Spiking Neural Networks for space applications

May 16, 2025Abstract:While the exponential growth of the space sector and new operative concepts ask for higher spacecraft autonomy, the development of AI-assisted space systems was so far hindered by the low availability of power and energy typical of space applications. In this context, Spiking Neural Networks (SNN) are highly attractive due to their theoretically superior energy efficiency due to their inherently sparse activity induced by neurons communicating by means of binary spikes. Nevertheless, the ability of SNN to reach such efficiency on real world tasks is still to be demonstrated in practice. To evaluate the feasibility of utilizing SNN onboard spacecraft, this work presents a numerical analysis and comparison of different SNN techniques applied to scene classification for the EuroSAT dataset. Such tasks are of primary importance for space applications and constitute a valuable test case given the abundance of competitive methods available to establish a benchmark. Particular emphasis is placed on models based on temporal coding, where crucial information is encoded in the timing of neuron spikes. These models promise even greater efficiency of resulting networks, as they maximize the sparsity properties inherent in SNN. A reliable metric capable of comparing different architectures in a hardware-agnostic way is developed to establish a clear theoretical dependence between architecture parameters and the energy consumption that can be expected onboard the spacecraft. The potential of this novel method and his flexibility to describe specific hardware platforms is demonstrated by its application to predicting the energy consumption of a BrainChip Akida AKD1000 neuromorphic processor.

Wandering around: A bioinspired approach to visual attention through object motion sensitivity

Feb 10, 2025

Abstract:Active vision enables dynamic visual perception, offering an alternative to static feedforward architectures in computer vision, which rely on large datasets and high computational resources. Biological selective attention mechanisms allow agents to focus on salient Regions of Interest (ROIs), reducing computational demand while maintaining real-time responsiveness. Event-based cameras, inspired by the mammalian retina, enhance this capability by capturing asynchronous scene changes enabling efficient low-latency processing. To distinguish moving objects while the event-based camera is in motion the agent requires an object motion segmentation mechanism to accurately detect targets and center them in the visual field (fovea). Integrating event-based sensors with neuromorphic algorithms represents a paradigm shift, using Spiking Neural Networks to parallelize computation and adapt to dynamic environments. This work presents a Spiking Convolutional Neural Network bioinspired attention system for selective attention through object motion sensitivity. The system generates events via fixational eye movements using a Dynamic Vision Sensor integrated into the Speck neuromorphic hardware, mounted on a Pan-Tilt unit, to identify the ROI and saccade toward it. The system, characterized using ideal gratings and benchmarked against the Event Camera Motion Segmentation Dataset, reaches a mean IoU of 82.2% and a mean SSIM of 96% in multi-object motion segmentation. The detection of salient objects reaches 88.8% accuracy in office scenarios and 89.8% in low-light conditions on the Event-Assisted Low-Light Video Object Segmentation Dataset. A real-time demonstrator shows the system's 0.12 s response to dynamic scenes. Its learning-free design ensures robustness across perceptual scenes, making it a reliable foundation for real-time robotic applications serving as a basis for more complex architectures.

Asteroid Mining: ACT&Friends' Results for the GTOC 12 Problem

Oct 28, 2024Abstract:In 2023, the 12th edition of Global Trajectory Competition was organised around the problem referred to as "Sustainable Asteroid Mining". This paper reports the developments that led to the solution proposed by ESA's Advanced Concepts Team. Beyond the fact that the proposed approach failed to rank higher than fourth in the final competition leader-board, several innovative fundamental methodologies were developed which have a broader application. In particular, new methods based on machine learning as well as on manipulating the fundamental laws of astrodynamics were developed and able to fill with remarkable accuracy the gap between full low-thrust trajectories and their representation as impulsive Lambert transfers. A novel technique was devised to formulate the challenge of optimal subset selection from a repository of pre-existing optimal mining trajectories as an integer linear programming problem. Finally, the fundamental problem of searching for single optimal mining trajectories (mining and collecting all resources), albeit ignoring the possibility of having intra-ship collaboration and thus sub-optimal in the case of the GTOC12 problem, was efficiently solved by means of a novel search based on a look-ahead score and thus making sure to select asteroids that had chances to be re-visited later on.

On the Generation of a Synthetic Event-Based Vision Dataset for Navigation and Landing

Aug 01, 2023

Abstract:An event-based camera outputs an event whenever a change in scene brightness of a preset magnitude is detected at a particular pixel location in the sensor plane. The resulting sparse and asynchronous output coupled with the high dynamic range and temporal resolution of this novel camera motivate the study of event-based cameras for navigation and landing applications. However, the lack of real-world and synthetic datasets to support this line of research has limited its consideration for onboard use. This paper presents a methodology and a software pipeline for generating event-based vision datasets from optimal landing trajectories during the approach of a target body. We construct sequences of photorealistic images of the lunar surface with the Planet and Asteroid Natural Scene Generation Utility at different viewpoints along a set of optimal descent trajectories obtained by varying the boundary conditions. The generated image sequences are then converted into event streams by means of an event-based camera emulator. We demonstrate that the pipeline can generate realistic event-based representations of surface features by constructing a dataset of 500 trajectories, complete with event streams and motion field ground truth data. We anticipate that novel event-based vision datasets can be generated using this pipeline to support various spacecraft pose reconstruction problems given events as input, and we hope that the proposed methodology would attract the attention of researchers working at the intersection of neuromorphic vision and guidance navigation and control.

Neuromorphic Computing and Sensing in Space

Dec 17, 2022Abstract:The term ``neuromorphic'' refers to systems that are closely resembling the architecture and/or the dynamics of biological neural networks. Typical examples are novel computer chips designed to mimic the architecture of a biological brain, or sensors that get inspiration from, e.g., the visual or olfactory systems in insects and mammals to acquire information about the environment. This approach is not without ambition as it promises to enable engineered devices able to reproduce the level of performance observed in biological organisms -- the main immediate advantage being the efficient use of scarce resources, which translates into low power requirements. The emphasis on low power and energy efficiency of neuromorphic devices is a perfect match for space applications. Spacecraft -- especially miniaturized ones -- have strict energy constraints as they need to operate in an environment which is scarce with resources and extremely hostile. In this work we present an overview of early attempts made to study a neuromorphic approach in a space context at the European Space Agency's (ESA) Advanced Concepts Team (ACT).

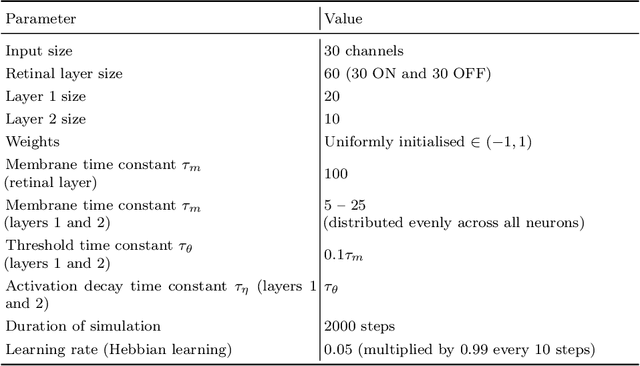

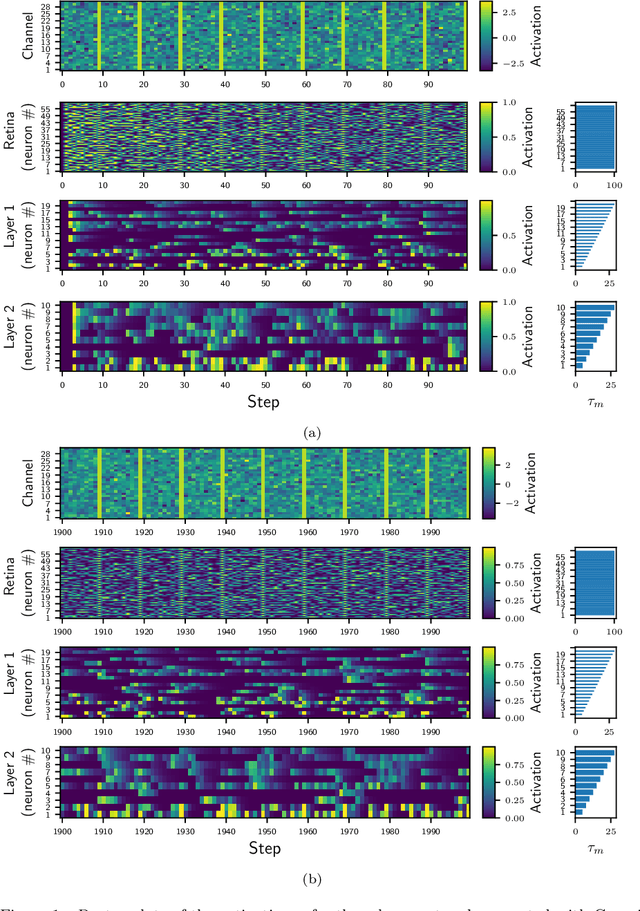

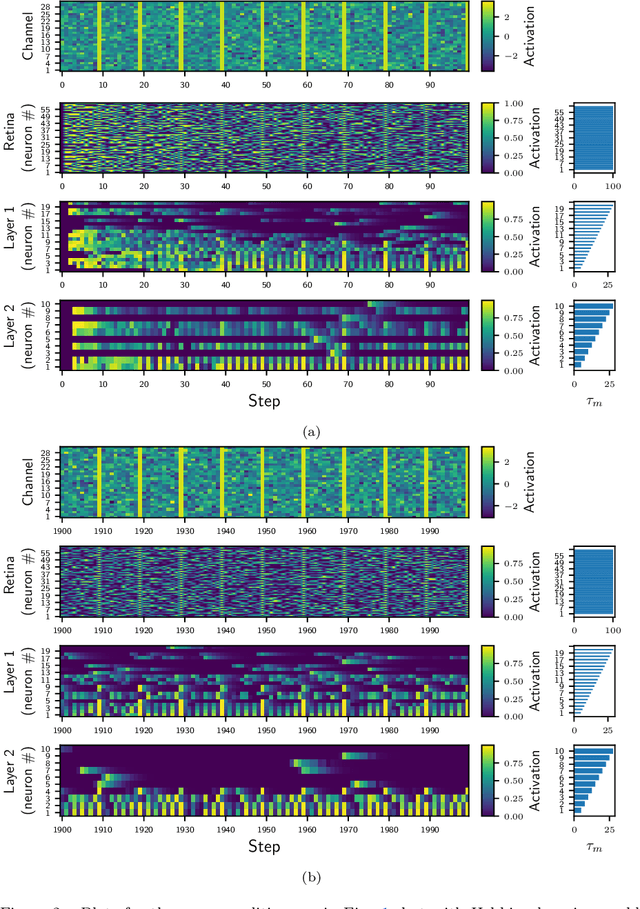

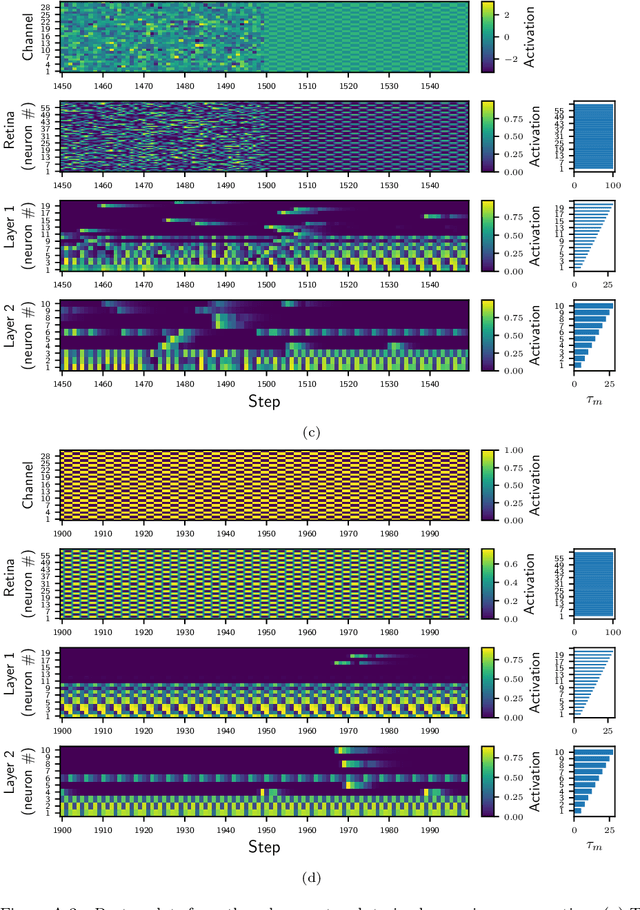

Continuous Learning and Adaptation with Membrane Potential and Activation Threshold Homeostasis

May 08, 2021

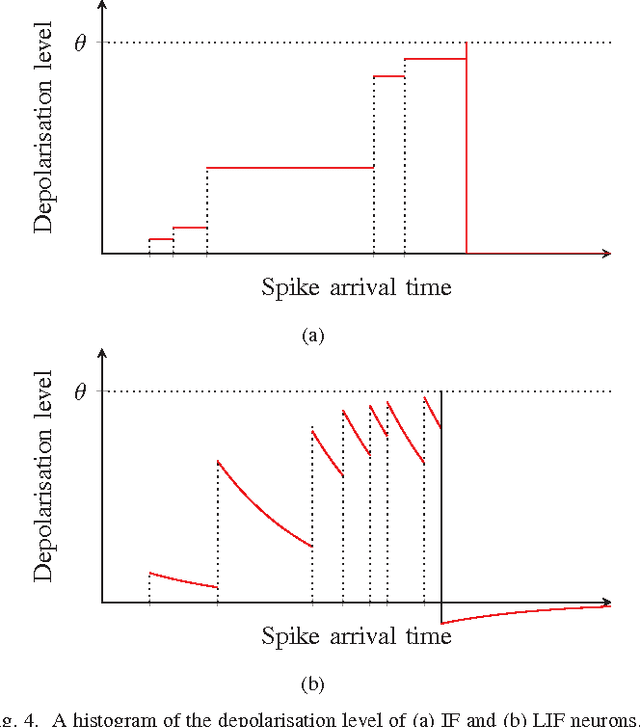

Abstract:Most classical (non-spiking) neural network models disregard internal neuron dynamics and treat neurons as simple input integrators. However, biological neurons have an internal state governed by complex dynamics that plays a crucial role in learning, adaptation and the overall network activity and behaviour. This paper presents the Membrane Potential and Activation Threshold Homeostasis (MPATH) neuron model, which combines several biologically inspired mechanisms to efficiently simulate internal neuron dynamics with a single parameter analogous to the membrane time constant in biological neurons. The model allows neurons to maintain a form of dynamic equilibrium by automatically regulating their activity when presented with fluctuating input. One consequence of the MPATH model is that it imbues neurons with a sense of time without recurrent connections, paving the way for modelling processes that depend on temporal aspects of neuron activity. Experiments demonstrate the model's ability to adapt to and continually learn from its input.

Epigenetic evolution of deep convolutional models

Apr 12, 2021

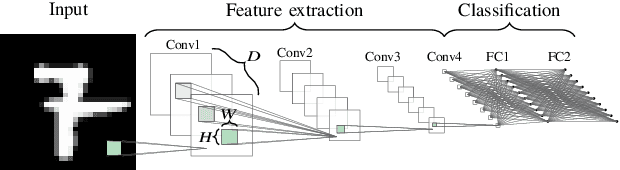

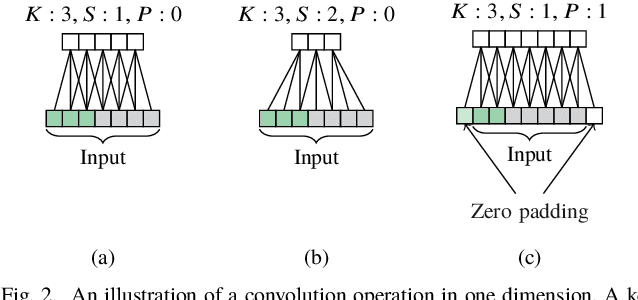

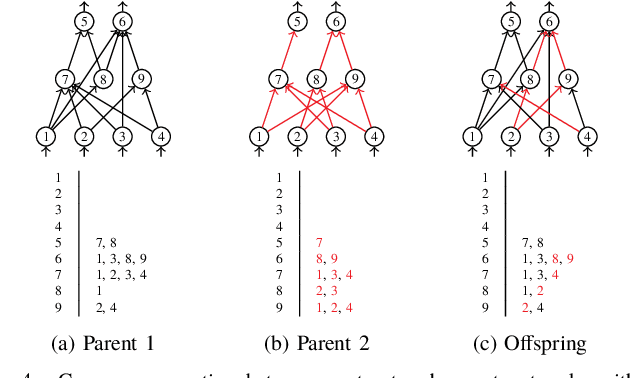

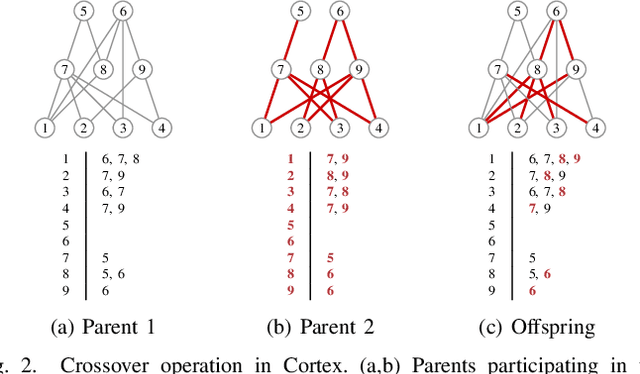

Abstract:In this study, we build upon a previously proposed neuroevolution framework to evolve deep convolutional models. Specifically, the genome encoding and the crossover operator are extended to make them applicable to layered networks. We also propose a convolutional layer layout which allows kernels of different shapes and sizes to coexist within the same layer, and present an argument as to why this may be beneficial. The proposed layout enables the size and shape of individual kernels within a convolutional layer to be evolved with a corresponding new mutation operator. The proposed framework employs a hybrid optimisation strategy involving structural changes through epigenetic evolution and weight update through backpropagation in a population-based setting. Experiments on several image classification benchmarks demonstrate that the crossover operator is sufficiently robust to produce increasingly performant offspring even when the parents are trained on only a small random subset of the training dataset in each epoch, thus providing direct confirmation that learned features and behaviour can be successfully transferred from parent networks to offspring in the next generation.

* 8 pages

Adaptive conversion of real-valued input into spike trains

Apr 12, 2021

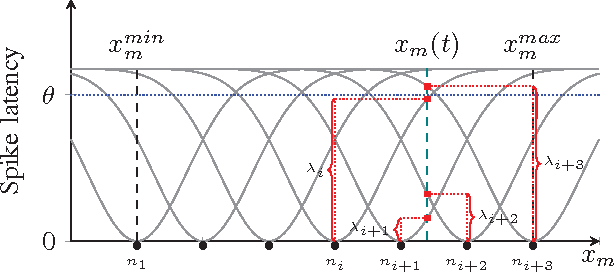

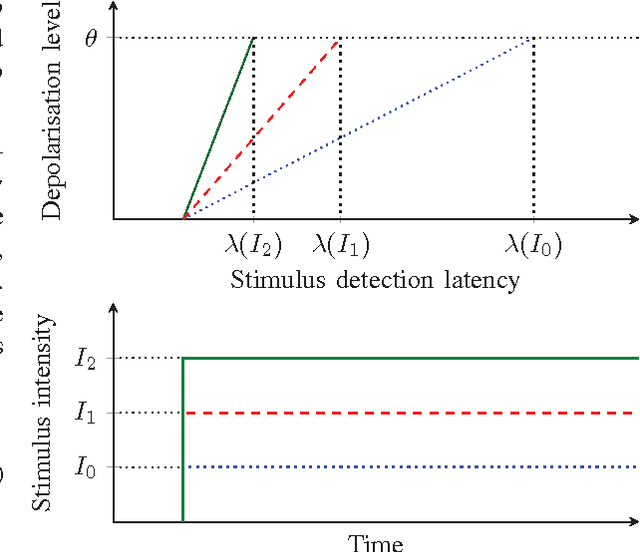

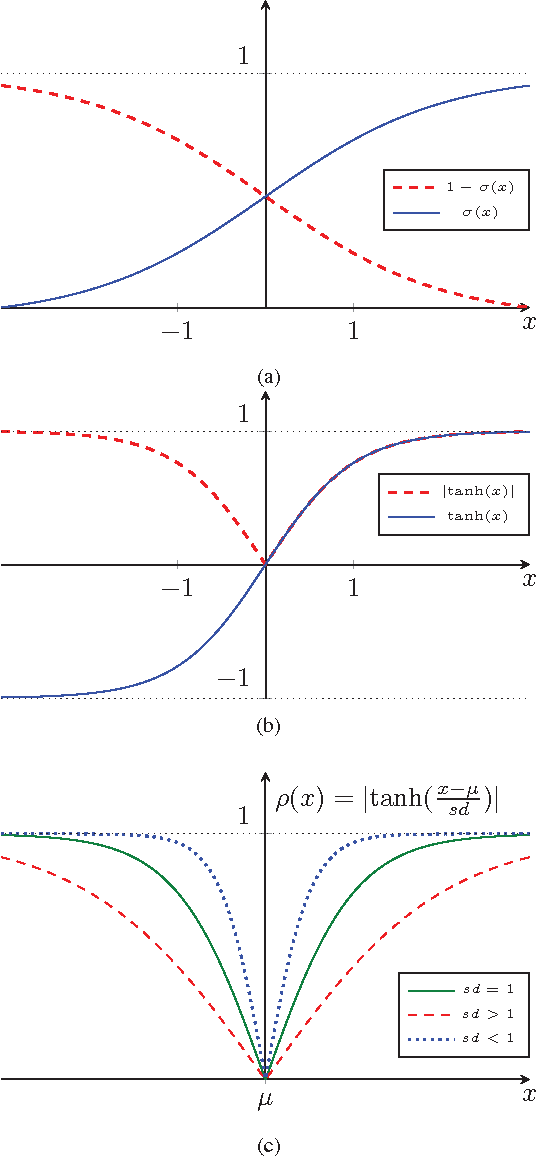

Abstract:This paper presents a biologically plausible method for converting real-valued input into spike trains for processing with spiking neural networks. The proposed method mimics the adaptive behaviour of retinal ganglion cells and allows input neurons to adapt their response to changes in the statistics of the input. Thus, rather than passively receiving values and forwarding them to the hidden and output layers, the input layer acts as a self-regulating filter which emphasises deviations from the average while allowing the input neurons to become effectively desensitised to the average itself. Another merit of the proposed method is that it requires only one input neuron per variable, rather than an entire population of neurons as in the case of the commonly used conversion method based on Gaussian receptive fields. In addition, since the statistics of the input emerge naturally over time, it becomes unnecessary to pre-process the data before feeding it to the network. This enables spiking neural networks to process raw, non-normalised streaming data. A proof-of-concept experiment is performed to demonstrate that the proposed method operates as expected.

* 8 pages

Complexity-based speciation and genotype representation for neuroevolution

Oct 11, 2020

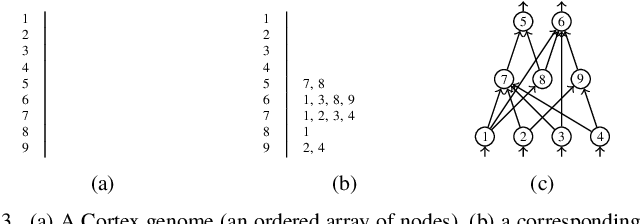

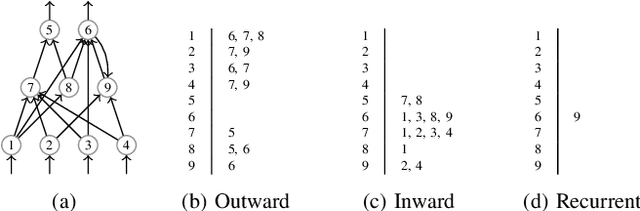

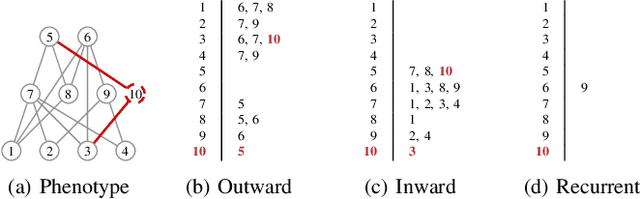

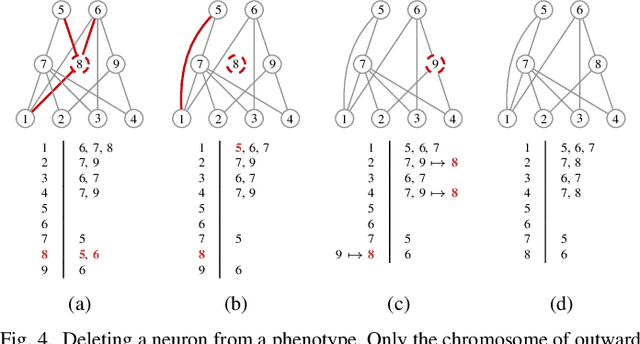

Abstract:This paper introduces a speciation principle for neuroevolution where evolving networks are grouped into species based on the number of hidden neurons, which is indicative of the complexity of the search space. This speciation principle is indivisibly coupled with a novel genotype representation which is characterised by zero genome redundancy, high resilience to bloat, explicit marking of recurrent connections, as well as an efficient and reproducible stack-based evaluation procedure for networks with arbitrary topology. Furthermore, the proposed speciation principle is employed in several techniques designed to promote and preserve diversity within species and in the ecosystem as a whole. The competitive performance of the proposed framework, named Cortex, is demonstrated through experiments. A highly customisable software platform which implements the concepts proposed in this study is also introduced in the hope that it will serve as a useful and reliable tool for experimentation in the field of neuroevolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge