Alejandro Murillo-Gonzalez

Situationally-Aware Dynamics Learning

May 26, 2025Abstract:Autonomous robots operating in complex, unstructured environments face significant challenges due to latent, unobserved factors that obscure their understanding of both their internal state and the external world. Addressing this challenge would enable robots to develop a more profound grasp of their operational context. To tackle this, we propose a novel framework for online learning of hidden state representations, with which the robots can adapt in real-time to uncertain and dynamic conditions that would otherwise be ambiguous and result in suboptimal or erroneous behaviors. Our approach is formalized as a Generalized Hidden Parameter Markov Decision Process, which explicitly models the influence of unobserved parameters on both transition dynamics and reward structures. Our core innovation lies in learning online the joint distribution of state transitions, which serves as an expressive representation of latent ego- and environmental-factors. This probabilistic approach supports the identification and adaptation to different operational situations, improving robustness and safety. Through a multivariate extension of Bayesian Online Changepoint Detection, our method segments changes in the underlying data generating process governing the robot's dynamics. The robot's transition model is then informed with a symbolic representation of the current situation derived from the joint distribution of latest state transitions, enabling adaptive and context-aware decision-making. To showcase the real-world effectiveness, we validate our approach in the challenging task of unstructured terrain navigation, where unmodeled and unmeasured terrain characteristics can significantly impact the robot's motion. Extensive experiments in both simulation and real world reveal significant improvements in data efficiency, policy performance, and the emergence of safer, adaptive navigation strategies.

Action Flow Matching for Continual Robot Learning

Apr 25, 2025

Abstract:Continual learning in robotics seeks systems that can constantly adapt to changing environments and tasks, mirroring human adaptability. A key challenge is refining dynamics models, essential for planning and control, while addressing issues such as safe adaptation, catastrophic forgetting, outlier management, data efficiency, and balancing exploration with exploitation -- all within task and onboard resource constraints. Towards this goal, we introduce a generative framework leveraging flow matching for online robot dynamics model alignment. Rather than executing actions based on a misaligned model, our approach refines planned actions to better match with those the robot would take if its model was well aligned. We find that by transforming the actions themselves rather than exploring with a misaligned model -- as is traditionally done -- the robot collects informative data more efficiently, thereby accelerating learning. Moreover, we validate that the method can handle an evolving and possibly imperfect model while reducing, if desired, the dependency on replay buffers or legacy model snapshots. We validate our approach using two platforms: an unmanned ground vehicle and a quadrotor. The results highlight the method's adaptability and efficiency, with a record 34.2\% higher task success rate, demonstrating its potential towards enabling continual robot learning. Code: https://github.com/AlejandroMllo/action_flow_matching.

Data-Assisted Vision-Based Hybrid Control for Robust Stabilization with Obstacle Avoidance via Learning of Perception Maps

Sep 04, 2022

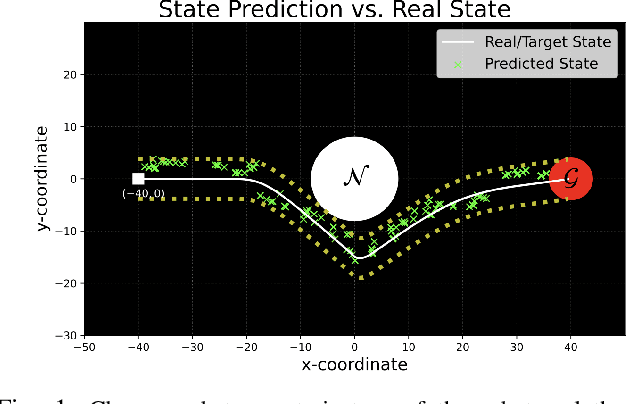

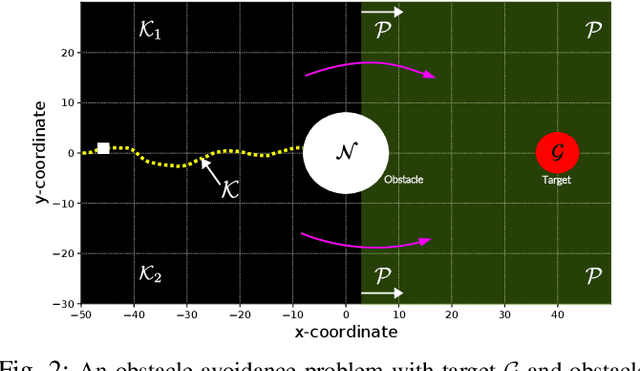

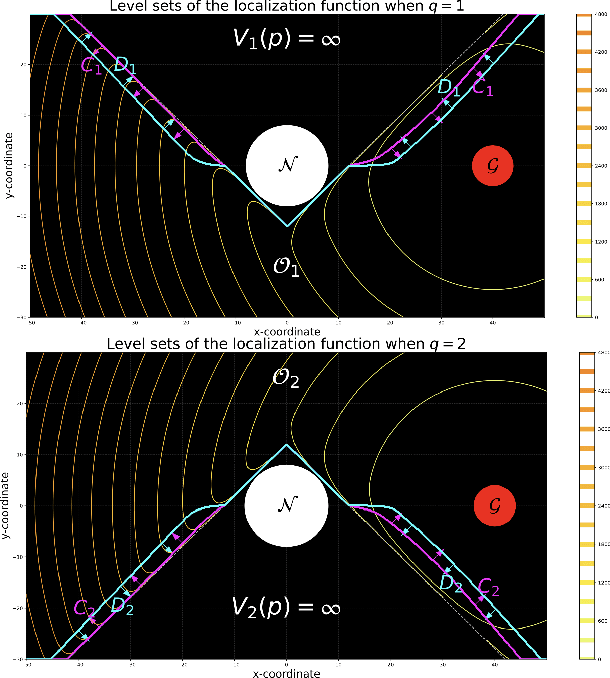

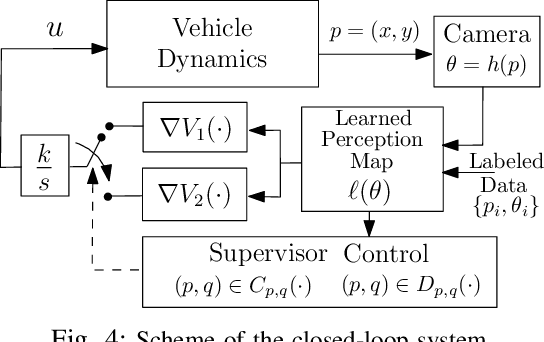

Abstract:We study the problem of target stabilization with robust obstacle avoidance in robots and vehicles that have access only to vision-based sensors for the purpose of realtime localization. This problem is particularly challenging due to the topological obstructions induced by the obstacle, which preclude the existence of smooth feedback controllers able to achieve simultaneous stabilization and robust obstacle avoidance. To overcome this issue, we develop a vision-based hybrid controller that switches between two different feedback laws depending on the current position of the vehicle using a hysteresis mechanism and a data-assisted supervisor. The main innovation of the paper is the incorporation of suitable perception maps into the hybrid controller. These maps can be learned from data obtained from cameras in the vehicles and trained via convolutional neural networks (CNN). Under suitable assumptions on this perception map, we establish theoretical guarantees for the trajectories of the vehicle in terms of convergence and obstacle avoidance. Moreover, the proposed vision-based hybrid controller is numerically tested under different scenarios, including noisy data, sensors with failures, and cameras with occlusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge