Achilleas Kourtellis

DARTS: A Drone-Based AI-Powered Real-Time Traffic Incident Detection System

Oct 29, 2025Abstract:Rapid and reliable incident detection is critical for reducing crash-related fatalities, injuries, and congestion. However, conventional methods, such as closed-circuit television, dashcam footage, and sensor-based detection, separate detection from verification, suffer from limited flexibility, and require dense infrastructure or high penetration rates, restricting adaptability and scalability to shifting incident hotspots. To overcome these challenges, we developed DARTS, a drone-based, AI-powered real-time traffic incident detection system. DARTS integrates drones' high mobility and aerial perspective for adaptive surveillance, thermal imaging for better low-visibility performance and privacy protection, and a lightweight deep learning framework for real-time vehicle trajectory extraction and incident detection. The system achieved 99% detection accuracy on a self-collected dataset and supports simultaneous online visual verification, severity assessment, and incident-induced congestion propagation monitoring via a web-based interface. In a field test on Interstate 75 in Florida, DARTS detected and verified a rear-end collision 12 minutes earlier than the local transportation management center and monitored incident-induced congestion propagation, suggesting potential to support faster emergency response and enable proactive traffic control to reduce congestion and secondary crash risk. Crucially, DARTS's flexible deployment architecture reduces dependence on frequent physical patrols, indicating potential scalability and cost-effectiveness for use in remote areas and resource-constrained settings. This study presents a promising step toward a more flexible and integrated real-time traffic incident detection system, with significant implications for the operational efficiency and responsiveness of modern transportation management.

Comparison of Object Detection Algorithms Using Video and Thermal Images Collected from a UAS Platform: An Application of Drones in Traffic Management

Sep 27, 2021

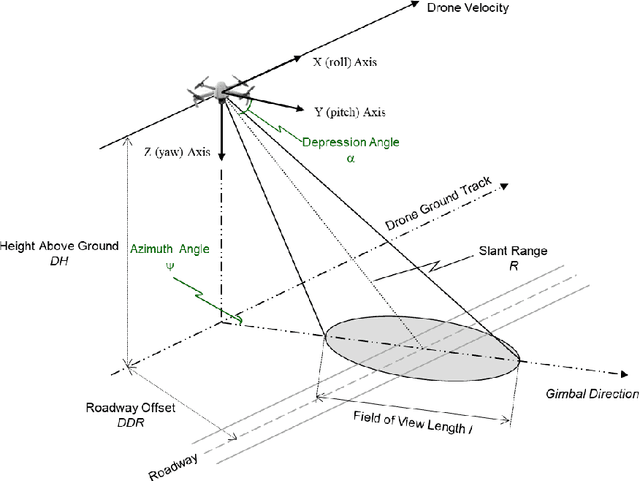

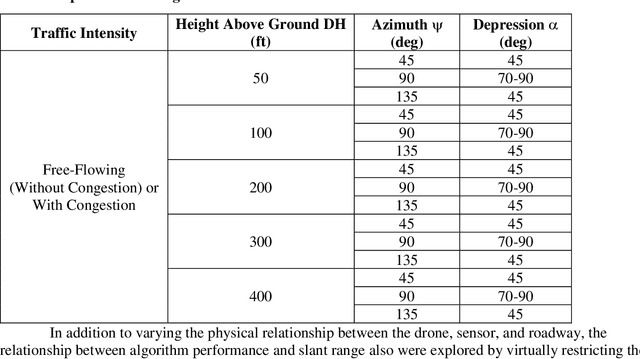

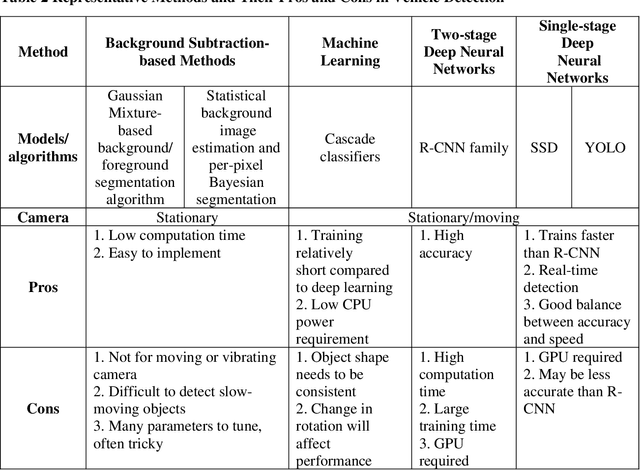

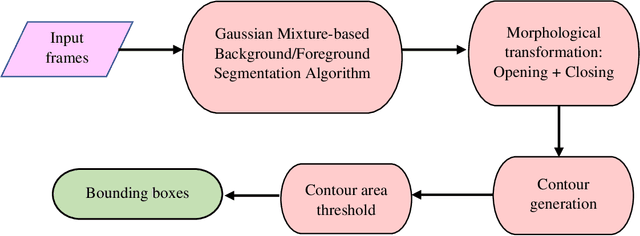

Abstract:There is a rapid growth of applications of Unmanned Aerial Vehicles (UAVs) in traffic management, such as traffic surveillance, monitoring, and incident detection. However, the existing literature lacks solutions to real-time incident detection while addressing privacy issues in practice. This study explored real-time vehicle detection algorithms on both visual and infrared cameras and conducted experiments comparing their performance. Red Green Blue (RGB) videos and thermal images were collected from a UAS platform along highways in the Tampa, Florida, area. Experiments were designed to quantify the performance of a real-time background subtraction-based method in vehicle detection from a stationary camera on hovering UAVs under free-flow conditions. Several parameters were set in the experiments based on the geometry of the drone and sensor relative to the roadway. The results show that a background subtraction-based method can achieve good detection performance on RGB images (F1 scores around 0.9 for most cases), and a more varied performance is seen on thermal images with different azimuth angles. The results of these experiments will help inform the development of protocols, standards, and guidance for the use of drones to detect highway congestion and provide input for the development of incident detection algorithms.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge