RetroMAE: Pre-training Retrieval-oriented Transformers via Masked Auto-Encoder

Paper and Code

May 24, 2022

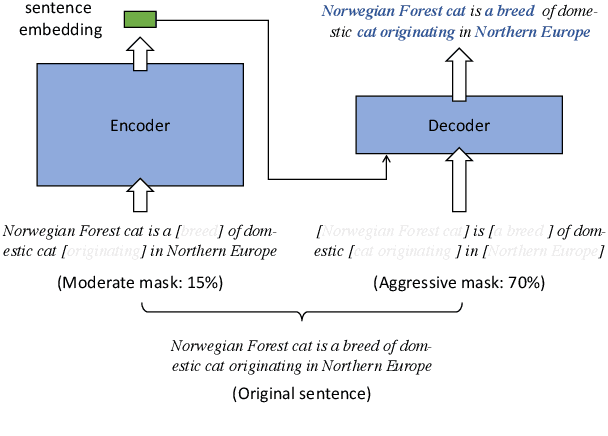

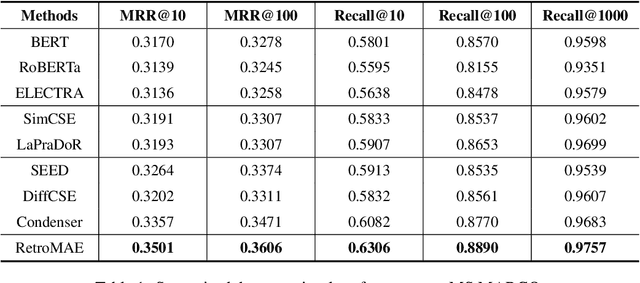

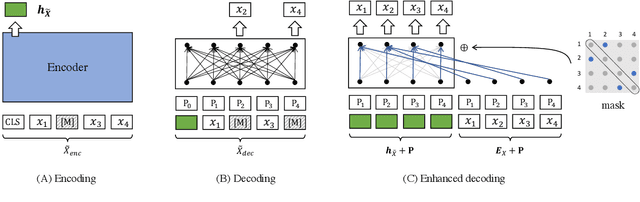

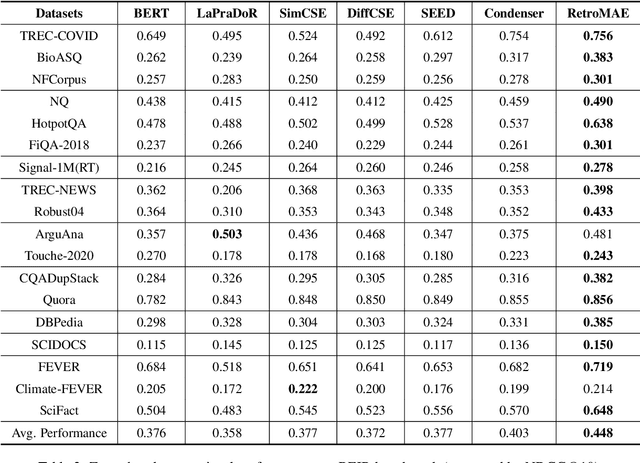

Pre-trained models have demonstrated superior power on many important tasks. However, it is still an open problem of designing effective pre-training strategies so as to promote the models' usability on dense retrieval. In this paper, we propose a novel pre-training framework for dense retrieval based on the Masked Auto-Encoder, known as RetroMAE. Our proposed framework is highlighted for the following critical designs: 1) a MAE based pre-training workflow, where the input sentence is polluted on both encoder and decoder side with different masks, and original sentence is reconstructed based on both sentence embedding and masked sentence; 2) asymmetric model architectures, with a large-scale expressive transformer for sentence encoding and a extremely simplified transformer for sentence reconstruction; 3) asymmetric masking ratios, with a moderate masking on the encoder side (15%) and an aggressive masking ratio on the decoder side (50~90%). We pre-train a BERT like encoder on English Wikipedia and BookCorpus, where it notably outperforms the existing pre-trained models on a wide range of dense retrieval benchmarks, like MS MARCO, Open-domain Question Answering, and BEIR.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge