On-device Large Multi-modal Agent for Human Activity Recognition

Paper and Code

Dec 17, 2025

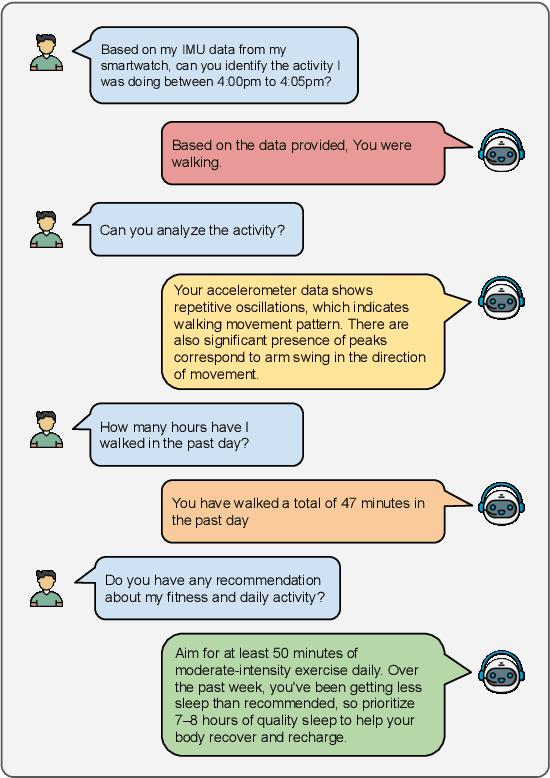

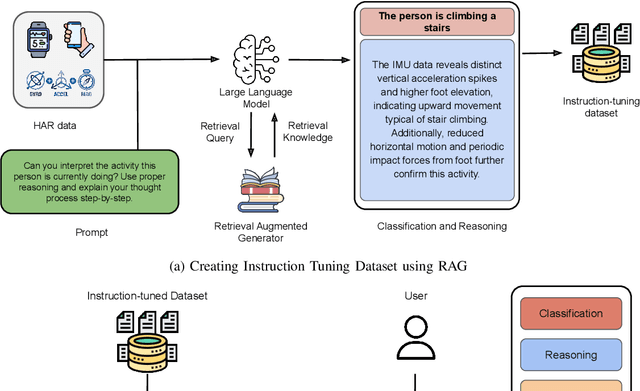

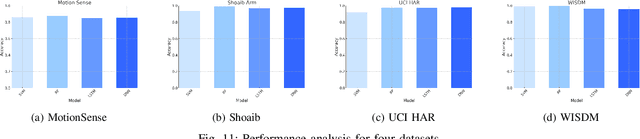

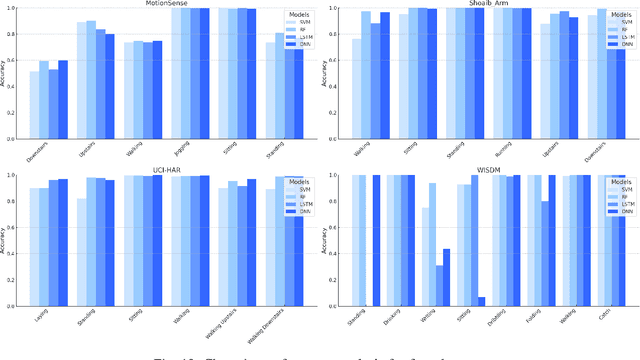

Human Activity Recognition (HAR) has been an active area of research, with applications ranging from healthcare to smart environments. The recent advancements in Large Language Models (LLMs) have opened new possibilities to leverage their capabilities in HAR, enabling not just activity classification but also interpretability and human-like interaction. In this paper, we present a Large Multi-Modal Agent designed for HAR, which integrates the power of LLMs to enhance both performance and user engagement. The proposed framework not only delivers activity classification but also bridges the gap between technical outputs and user-friendly insights through its reasoning and question-answering capabilities. We conduct extensive evaluations using widely adopted HAR datasets, including HHAR, Shoaib, Motionsense to assess the performance of our framework. The results demonstrate that our model achieves high classification accuracy comparable to state-of-the-art methods while significantly improving interpretability through its reasoning and Q&A capabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge