HitNet: a neural network with capsules embedded in a Hit-or-Miss layer, extended with hybrid data augmentation and ghost capsules

Paper and Code

Jun 18, 2018

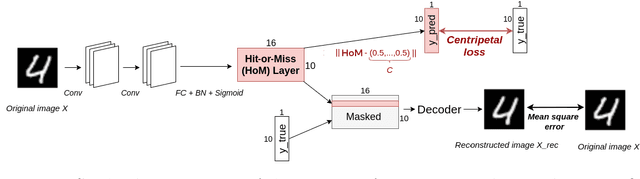

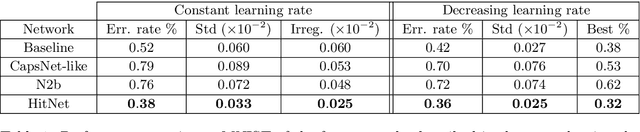

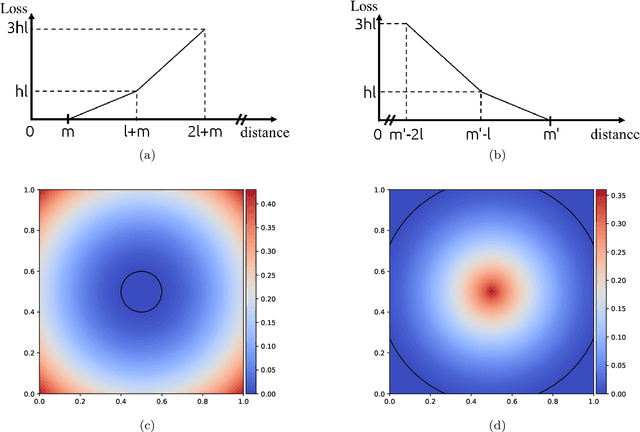

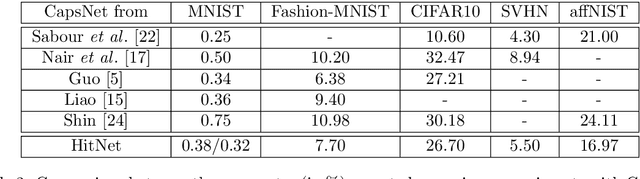

Neural networks designed for the task of classification have become a commodity in recent years. Many works target the development of better networks, which results in a complexification of their architectures with more layers, multiple sub-networks, or even the combination of multiple classifiers. In this paper, we show how to redesign a simple network to reach excellent performances, which are better than the results reproduced with CapsNet on several datasets, by replacing a layer with a Hit-or-Miss layer. This layer contains activated vectors, called capsules, that we train to hit or miss a central capsule by tailoring a specific centripetal loss function. We also show how our network, named HitNet, is capable of synthesizing a representative sample of the images of a given class by including a reconstruction network. This possibility allows to develop a data augmentation step combining information from the data space and the feature space, resulting in a hybrid data augmentation process. In addition, we introduce the possibility for HitNet, to adopt an alternative to the true target when needed by using the new concept of ghost capsules, which is used here to detect potentially mislabeled images in the training data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge