D$\textbf{S}^3$L: Deep Self-Semi-Supervised Learning for Image Recognition

Paper and Code

May 23, 2019

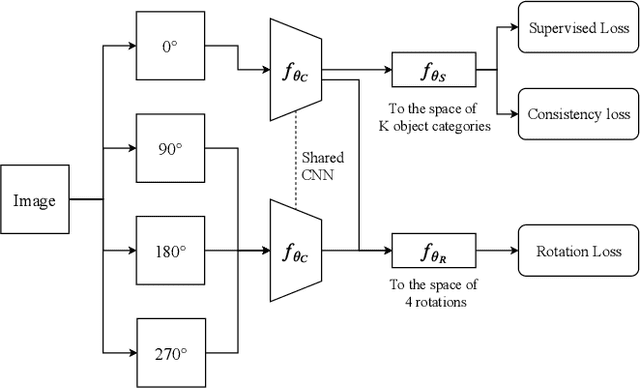

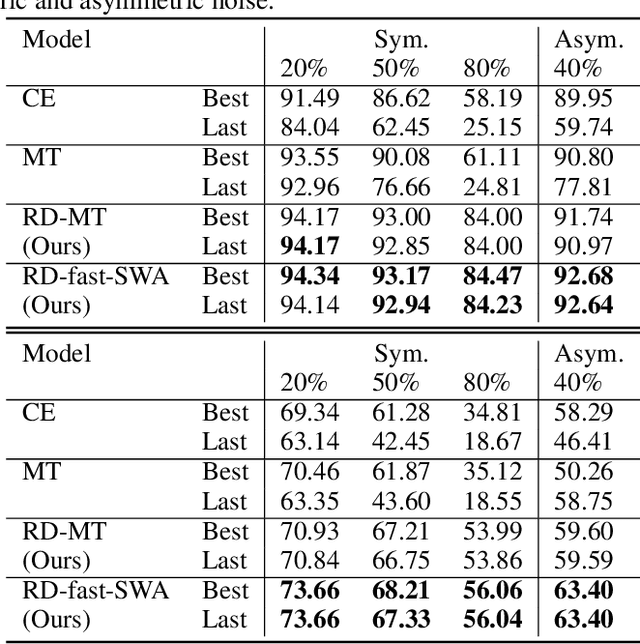

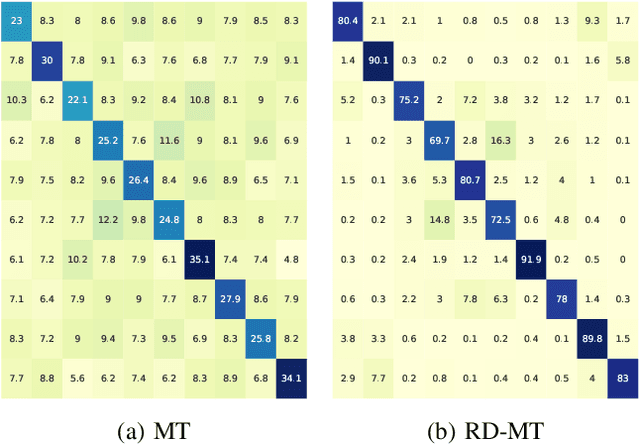

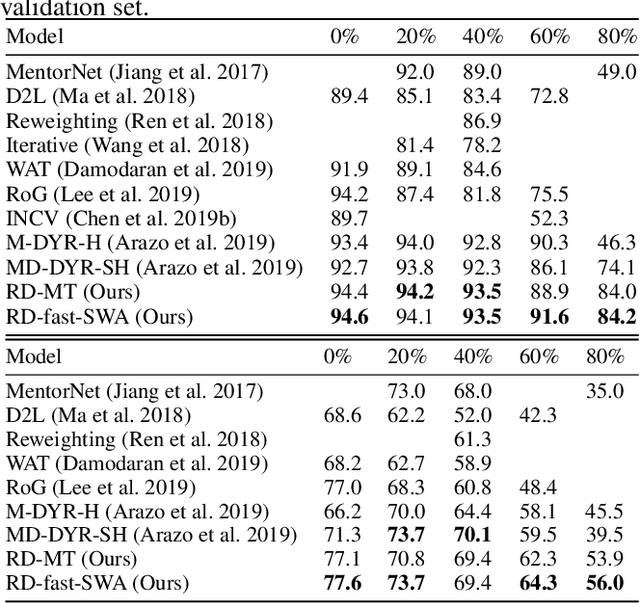

Despite the recent progress in deep semi-supervised learning (Semi-SL), the amount of labels still plays a dominant role. The success in self-supervised learning (Self-SL) hints a promising direction to exploit the vast unlabeled data by leveraging an additional set of deterministic labels. In this paper, we propose Deep Self-Semi-Supervised learning (D$S^3$L), a flexible multi-task framework with shared parameters that integrates the rotation task in Self-SL with the consistency-based methods in deep Semi-SL. Our method is easy to implement and is complementary to all consistency-based approaches. The experiments demonstrate that our method significantly improves over the published state-of-the-art methods on several standard benchmarks, especially when fewer labels are presented.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge