Zhenqiang Ye

FairGSE: Fairness-Aware Graph Neural Network without High False Positive Rates

Nov 15, 2025Abstract:Graph neural networks (GNNs) have emerged as the mainstream paradigm for graph representation learning due to their effective message aggregation. However, this advantage also amplifies biases inherent in graph topology, raising fairness concerns. Existing fairness-aware GNNs provide satisfactory performance on fairness metrics such as Statistical Parity and Equal Opportunity while maintaining acceptable accuracy trade-offs. Unfortunately, we observe that this pursuit of fairness metrics neglects the GNN's ability to predict negative labels, which renders their predictions with extremely high False Positive Rates (FPR), resulting in negative effects in high-risk scenarios. To this end, we advocate that classification performance should be carefully calibrated while improving fairness, rather than simply constraining accuracy loss. Furthermore, we propose Fair GNN via Structural Entropy (\textbf{FairGSE}), a novel framework that maximizes two-dimensional structural entropy (2D-SE) to improve fairness without neglecting false positives. Experiments on several real-world datasets show FairGSE reduces FPR by 39\% vs. state-of-the-art fairness-aware GNNs, with comparable fairness improvement.

Temporal Fuzzy Utility Maximization with Remaining Measure

Aug 26, 2022

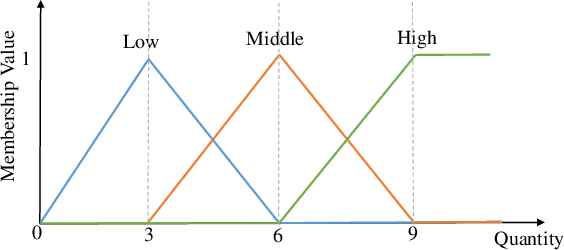

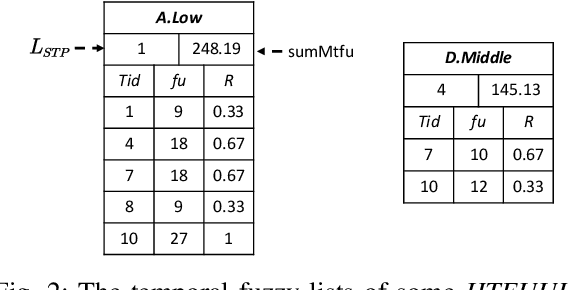

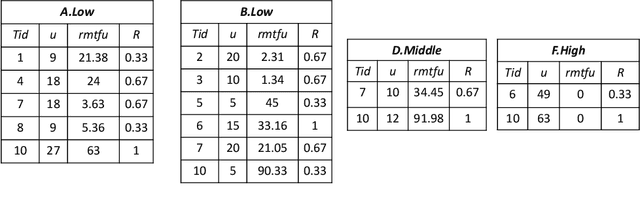

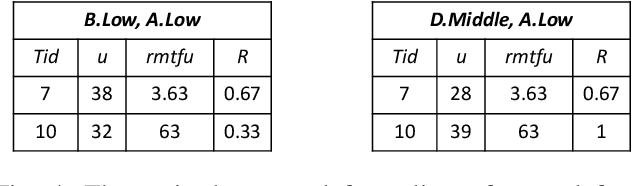

Abstract:High utility itemset mining approaches discover hidden patterns from large amounts of temporal data. However, an inescapable problem of high utility itemset mining is that its discovered results hide the quantities of patterns, which causes poor interpretability. The results only reflect the shopping trends of customers, which cannot help decision makers quantify collected information. In linguistic terms, computers use mathematical or programming languages that are precisely formalized, but the language used by humans is always ambiguous. In this paper, we propose a novel one-phase temporal fuzzy utility itemset mining approach called TFUM. It revises temporal fuzzy-lists to maintain less but major information about potential high temporal fuzzy utility itemsets in memory, and then discovers a complete set of real interesting patterns in a short time. In particular, the remaining measure is the first adopted in the temporal fuzzy utility itemset mining domain in this paper. The remaining maximal temporal fuzzy utility is a tighter and stronger upper bound than that of previous studies adopted. Hence, it plays an important role in pruning the search space in TFUM. Finally, we also evaluate the efficiency and effectiveness of TFUM on various datasets. Extensive experimental results indicate that TFUM outperforms the state-of-the-art algorithms in terms of runtime cost, memory usage, and scalability. In addition, experiments prove that the remaining measure can significantly prune unnecessary candidates during mining.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge