Yueyang Wu

Is Intermediate Fusion All You Need for UAV-based Collaborative Perception?

Apr 30, 2025Abstract:Collaborative perception enhances environmental awareness through inter-agent communication and is regarded as a promising solution to intelligent transportation systems. However, existing collaborative methods for Unmanned Aerial Vehicles (UAVs) overlook the unique characteristics of the UAV perspective, resulting in substantial communication overhead. To address this issue, we propose a novel communication-efficient collaborative perception framework based on late-intermediate fusion, dubbed LIF. The core concept is to exchange informative and compact detection results and shift the fusion stage to the feature representation level. In particular, we leverage vision-guided positional embedding (VPE) and box-based virtual augmented feature (BoBEV) to effectively integrate complementary information from various agents. Additionally, we innovatively introduce an uncertainty-driven communication mechanism that uses uncertainty evaluation to select high-quality and reliable shared areas. Experimental results demonstrate that our LIF achieves superior performance with minimal communication bandwidth, proving its effectiveness and practicality. Code and models are available at https://github.com/uestchjw/LIF.

Attention module improves both performance and interpretability of 4D fMRI decoding neural network

Oct 03, 2021

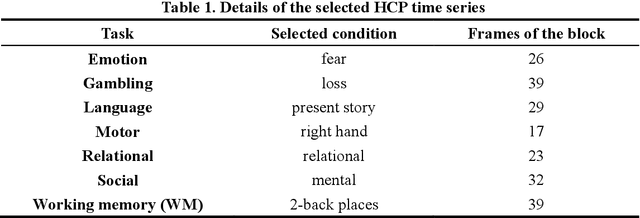

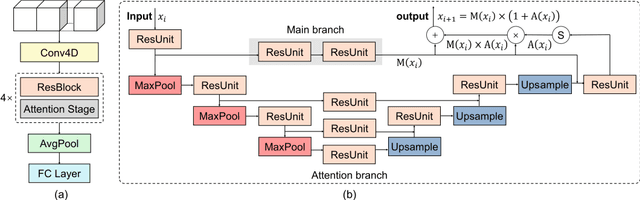

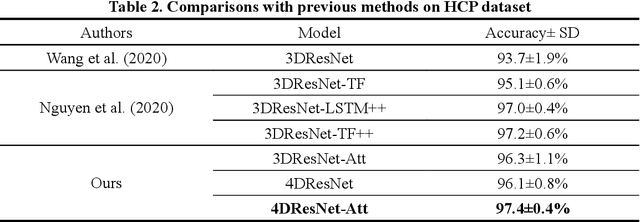

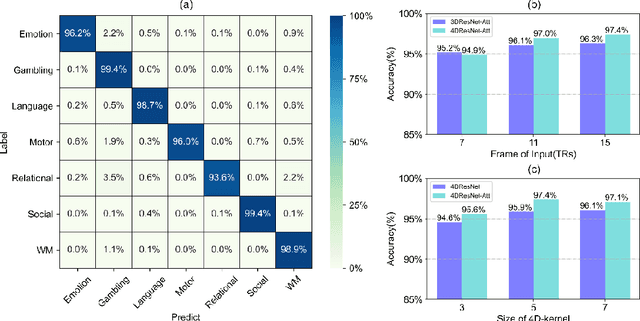

Abstract:Decoding brain cognitive states from neuroimaging signals is an important topic in neuroscience. In recent years, deep neural networks (DNNs) have been recruited for multiple brain state decoding and achieved good performance. However, the open question of how to interpret the DNN black box remains unanswered. Capitalizing on advances in machine learning, we integrated attention modules into brain decoders to facilitate an in-depth interpretation of DNN channels. A 4D convolution operation was also included to extract temporo-spatial interaction within the fMRI signal. The experiments showed that the proposed model obtains a very high accuracy (97.4%) and outperforms previous researches on the 7 different task benchmarks from the Human Connectome Project (HCP) dataset. The visualization analysis further illustrated the hierarchical emergence of task-specific masks with depth. Finally, the model was retrained to regress individual traits within the HCP and to classify viewing images from the BOLD5000 dataset, respectively. Transfer learning also achieves good performance. A further visualization analysis shows that, after transfer learning, low-level attention masks remained similar to the source domain, whereas high-level attention masks changed adaptively. In conclusion, the proposed 4D model with attention module performed well and facilitated interpretation of DNNs, which is helpful for subsequent research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge