Yeojoon Youn

Randomized Quantization is All You Need for Differential Privacy in Federated Learning

Jun 20, 2023

Abstract:Federated learning (FL) is a common and practical framework for learning a machine model in a decentralized fashion. A primary motivation behind this decentralized approach is data privacy, ensuring that the learner never sees the data of each local source itself. Federated learning then comes with two majors challenges: one is handling potentially complex model updates between a server and a large number of data sources; the other is that de-centralization may, in fact, be insufficient for privacy, as the local updates themselves can reveal information about the sources' data. To address these issues, we consider an approach to federated learning that combines quantization and differential privacy. Absent privacy, Federated Learning often relies on quantization to reduce communication complexity. We build upon this approach and develop a new algorithm called the \textbf{R}andomized \textbf{Q}uantization \textbf{M}echanism (RQM), which obtains privacy through a two-levels of randomization. More precisely, we randomly sub-sample feasible quantization levels, then employ a randomized rounding procedure using these sub-sampled discrete levels. We are able to establish that our results preserve ``Renyi differential privacy'' (Renyi DP). We empirically study the performance of our algorithm and demonstrate that compared to previous work it yields improved privacy-accuracy trade-offs for DP federated learning. To the best of our knowledge, this is the first study that solely relies on randomized quantization without incorporating explicit discrete noise to achieve Renyi DP guarantees in Federated Learning systems.

Online Kernel based Generative Adversarial Networks

Jun 19, 2020

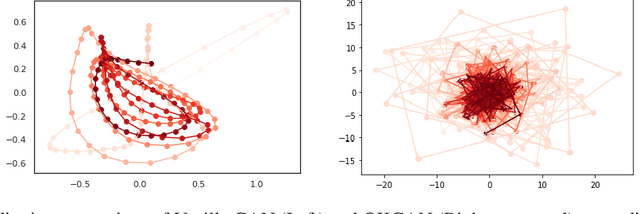

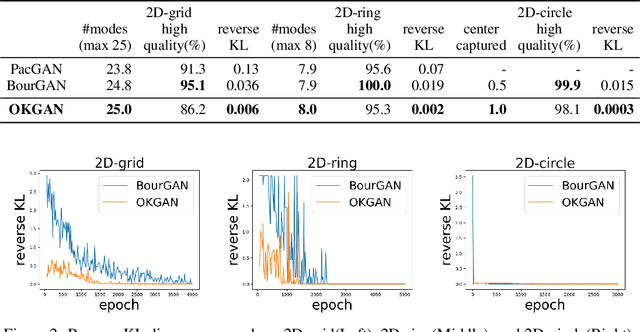

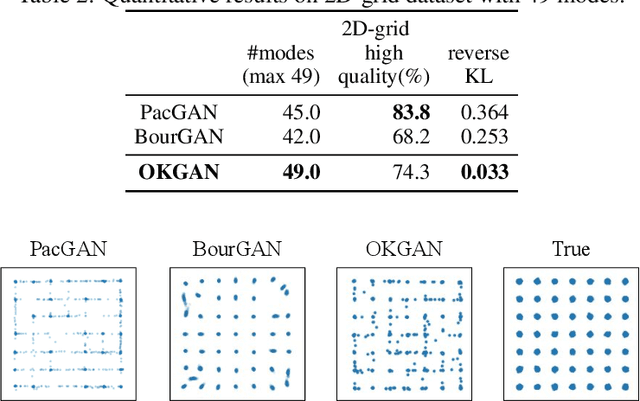

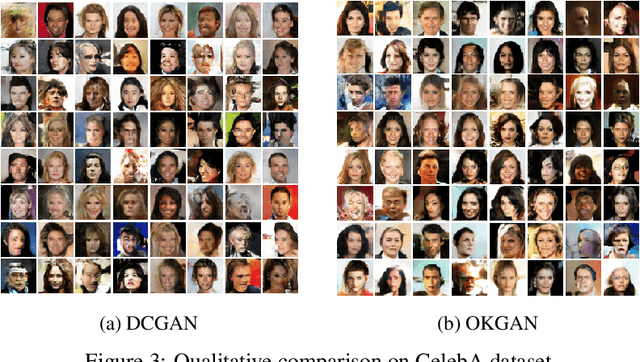

Abstract:One of the major breakthroughs in deep learning over the past five years has been the Generative Adversarial Network (GAN), a neural network-based generative model which aims to mimic some underlying distribution given a dataset of samples. In contrast to many supervised problems, where one tries to minimize a simple objective function of the parameters, GAN training is formulated as a min-max problem over a pair of network parameters. While empirically GANs have shown impressive success in several domains, researchers have been puzzled by unusual training behavior, including cycling so-called mode collapse. In this paper, we begin by providing a quantitative method to explore some of the challenges in GAN training, and we show empirically how this relates fundamentally to the parametric nature of the discriminator network. We propose a novel approach that resolves many of these issues by relying on a kernel-based non-parametric discriminator that is highly amenable to online training---we call this the Online Kernel-based Generative Adversarial Networks (OKGAN). We show empirically that OKGANs mitigate a number of training issues, including mode collapse and cycling, and are much more amenable to theoretical guarantees. OKGANs empirically perform dramatically better, with respect to reverse KL-divergence, than other GAN formulations on synthetic data; on classical vision datasets such as MNIST, SVHN, and CelebA, show comparable performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge