Yangfu Li

Why 1 + 1 < 1 in Visual Token Pruning: Beyond Naive Integration via Multi-Objective Balanced Covering

May 15, 2025Abstract:Existing visual token pruning methods target prompt alignment and visual preservation with static strategies, overlooking the varying relative importance of these objectives across tasks, which leads to inconsistent performance. To address this, we derive the first closed-form error bound for visual token pruning based on the Hausdorff distance, uniformly characterizing the contributions of both objectives. Moreover, leveraging $\epsilon$-covering theory, we reveal an intrinsic trade-off between these objectives and quantify their optimal attainment levels under a fixed budget. To practically handle this trade-off, we propose Multi-Objective Balanced Covering (MoB), which reformulates visual token pruning as a bi-objective covering problem. In this framework, the attainment trade-off reduces to budget allocation via greedy radius trading. MoB offers a provable performance bound and linear scalability with respect to the number of input visual tokens, enabling adaptation to challenging pruning scenarios. Extensive experiments show that MoB preserves 96.4% of performance for LLaVA-1.5-7B using only 11.1% of the original visual tokens and accelerates LLaVA-Next-7B by 1.3-1.5$\times$ with negligible performance loss. Additionally, evaluations on Qwen2-VL and Video-LLaVA confirm that MoB integrates seamlessly into advanced MLLMs and diverse vision-language tasks.

Dual-stream Time-Delay Neural Network with Dynamic Global Filter for Speaker Verification

Mar 20, 2023Abstract:The time-delay neural network (TDNN) is one of the state-of-the-art models for text-independent speaker verification. However, it is difficult for conventional TDNN to capture global context that has been proven critical for robust speaker representations and long-duration speaker verification in many recent works. Besides, the common solutions, e.g., self-attention, have quadratic complexity for input tokens, which makes them computationally unaffordable when applied to the feature maps with large sizes in TDNN. To address these issues, we propose the Global Filter for TDNN, which applies log-linear complexity FFT/IFFT and a set of differentiable frequency-domain filters to efficiently model the long-term dependencies in speech. Besides, a dynamic filtering strategy, and a sparse regularization method are specially designed to enhance the performance of the global filter and prevent it from overfitting. Furthermore, we construct a dual-stream TDNN (DS-TDNN), which splits the basic channels for complexity reduction and employs the global filter to increase recognition performance. Experiments on Voxceleb and SITW databases show that the DS-TDNN achieves approximate 10% improvement with a decline over 28% and 15% in complexity and parameters compared with the ECAPA-TDNN. Besides, it has the best trade-off between efficiency and effectiveness compared with other popular baseline systems when facing long-duration speech. Finally, visualizations and a detailed ablation study further reveal the advantages of the DS-TDNN.

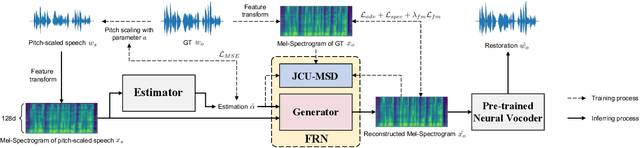

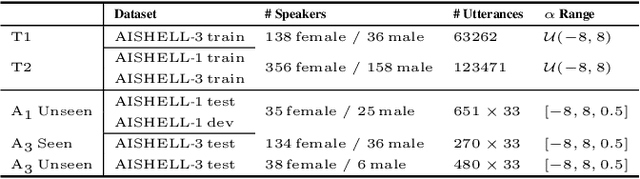

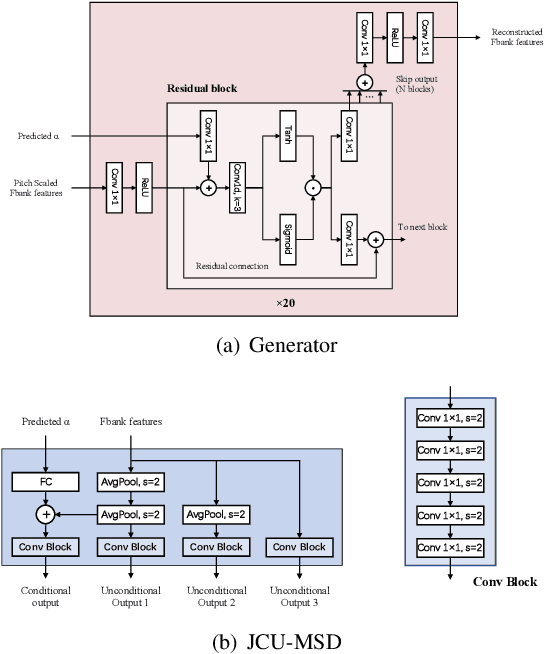

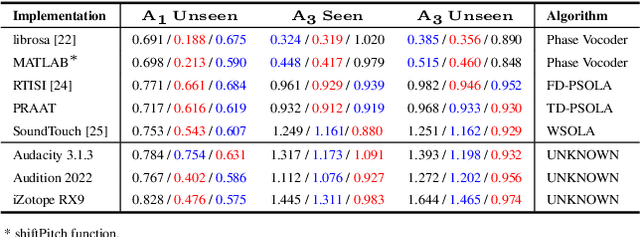

PSVRF: Learning to restore Pitch-Shifted Voice without reference

Oct 06, 2022

Abstract:Pitch scaling algorithms have a significant impact on the security of Automatic Speaker Verification (ASV) systems. Although numerous anti-spoofing algorithms have been proposed to identify the pitch-shifted voice and even restore it to the original version, they either have poor performance or require the original voice as a reference, limiting the prospects of applications. In this paper, we propose a no-reference approach termed PSVRF$^1$ for high-quality restoration of pitch-shifted voice. Experiments on AISHELL-1 and AISHELL-3 demonstrate that PSVRF can restore the voice disguised by various pitch-scaling techniques, which obviously enhances the robustness of ASV systems to pitch-scaling attacks. Furthermore, the performance of PSVRF even surpasses that of the state-of-the-art reference-based approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge