Xu Xuesong

Building a Question Answering System for the Manufacturing Domain

Nov 19, 2021

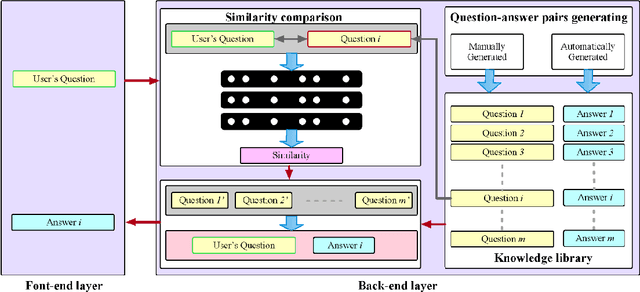

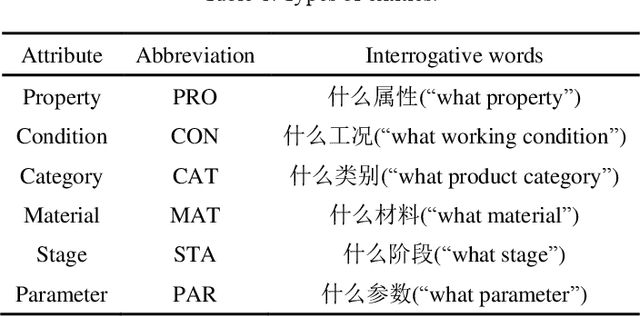

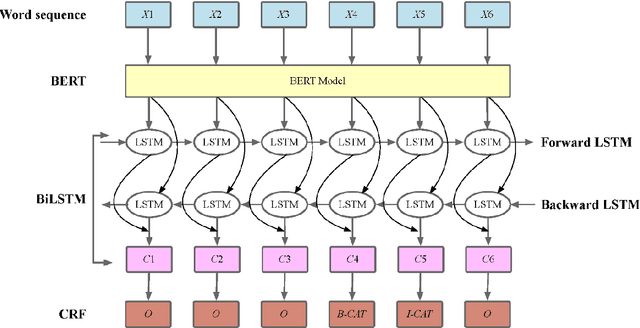

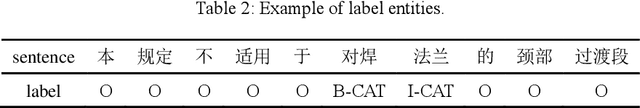

Abstract:The design or simulation analysis of special equipment products must follow the national standards, and hence it may be necessary to repeatedly consult the contents of the standards in the design process. However, it is difficult for the traditional question answering system based on keyword retrieval to give accurate answers to technical questions. Therefore, we use natural language processing techniques to design a question answering system for the decision-making process in pressure vessel design. To solve the problem of insufficient training data for the technology question answering system, we propose a method to generate questions according to a declarative sentence from several different dimensions so that multiple question-answer pairs can be obtained from a declarative sentence. In addition, we designed an interactive attention model based on a bidirectional long short-term memory (BiLSTM) network to improve the performance of the similarity comparison of two question sentences. Finally, the performance of the question answering system was tested on public and technical domain datasets.

MLPerf Tiny Benchmark

Jun 28, 2021

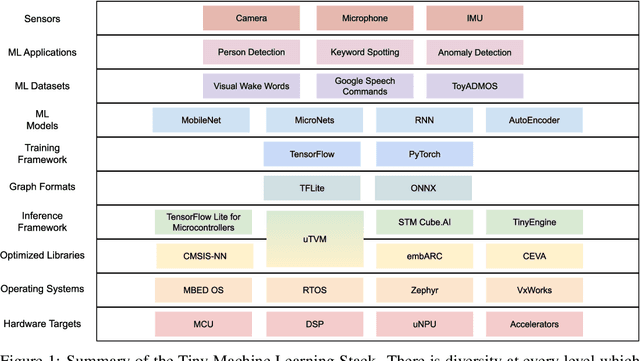

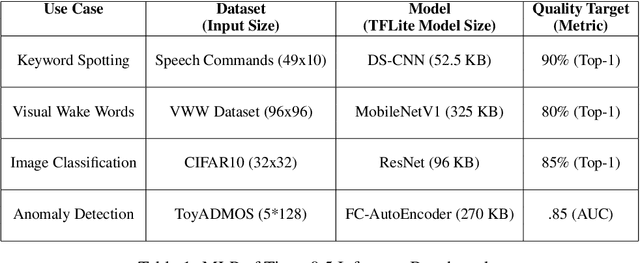

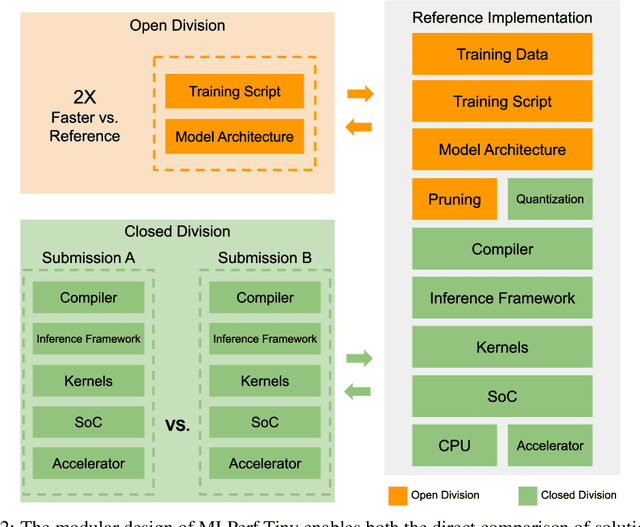

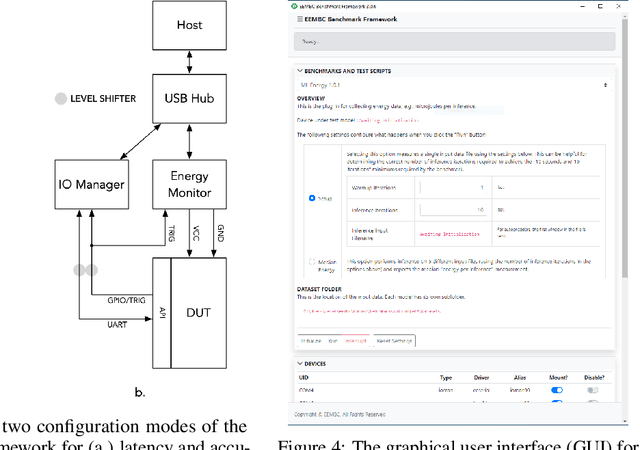

Abstract:Advancements in ultra-low-power tiny machine learning (TinyML) systems promise to unlock an entirely new class of smart applications. However, continued progress is limited by the lack of a widely accepted and easily reproducible benchmark for these systems. To meet this need, we present MLPerf Tiny, the first industry-standard benchmark suite for ultra-low-power tiny machine learning systems. The benchmark suite is the collaborative effort of more than 50 organizations from industry and academia and reflects the needs of the community. MLPerf Tiny measures the accuracy, latency, and energy of machine learning inference to properly evaluate the tradeoffs between systems. Additionally, MLPerf Tiny implements a modular design that enables benchmark submitters to show the benefits of their product, regardless of where it falls on the ML deployment stack, in a fair and reproducible manner. The suite features four benchmarks: keyword spotting, visual wake words, image classification, and anomaly detection.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge