Xingyi Liu

The Rise of Small Language Models in Healthcare: A Comprehensive Survey

Apr 23, 2025Abstract:Despite substantial progress in healthcare applications driven by large language models (LLMs), growing concerns around data privacy, and limited resources; the small language models (SLMs) offer a scalable and clinically viable solution for efficient performance in resource-constrained environments for next-generation healthcare informatics. Our comprehensive survey presents a taxonomic framework to identify and categorize them for healthcare professionals and informaticians. The timeline of healthcare SLM contributions establishes a foundational framework for analyzing models across three dimensions: NLP tasks, stakeholder roles, and the continuum of care. We present a taxonomic framework to identify the architectural foundations for building models from scratch; adapting SLMs to clinical precision through prompting, instruction fine-tuning, and reasoning; and accessibility and sustainability through compression techniques. Our primary objective is to offer a comprehensive survey for healthcare professionals, introducing recent innovations in model optimization and equipping them with curated resources to support future research and development in the field. Aiming to showcase the groundbreaking advancements in SLMs for healthcare, we present a comprehensive compilation of experimental results across widely studied NLP tasks in healthcare to highlight the transformative potential of SLMs in healthcare. The updated repository is available at Github

Tensor Decomposition for Model Reduction in Neural Networks: A Review

Apr 26, 2023Abstract:Modern neural networks have revolutionized the fields of computer vision (CV) and Natural Language Processing (NLP). They are widely used for solving complex CV tasks and NLP tasks such as image classification, image generation, and machine translation. Most state-of-the-art neural networks are over-parameterized and require a high computational cost. One straightforward solution is to replace the layers of the networks with their low-rank tensor approximations using different tensor decomposition methods. This paper reviews six tensor decomposition methods and illustrates their ability to compress model parameters of convolutional neural networks (CNNs), recurrent neural networks (RNNs) and Transformers. The accuracy of some compressed models can be higher than the original versions. Evaluations indicate that tensor decompositions can achieve significant reductions in model size, run-time and energy consumption, and are well suited for implementing neural networks on edge devices.

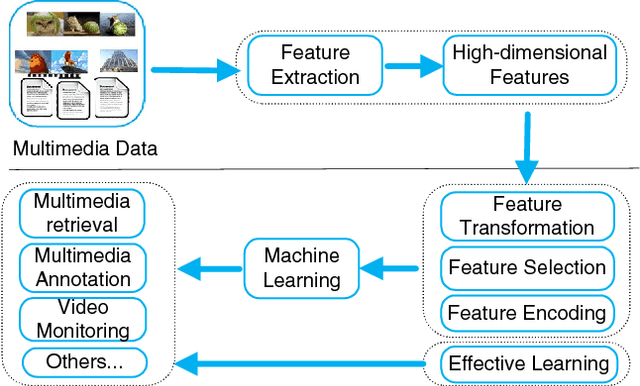

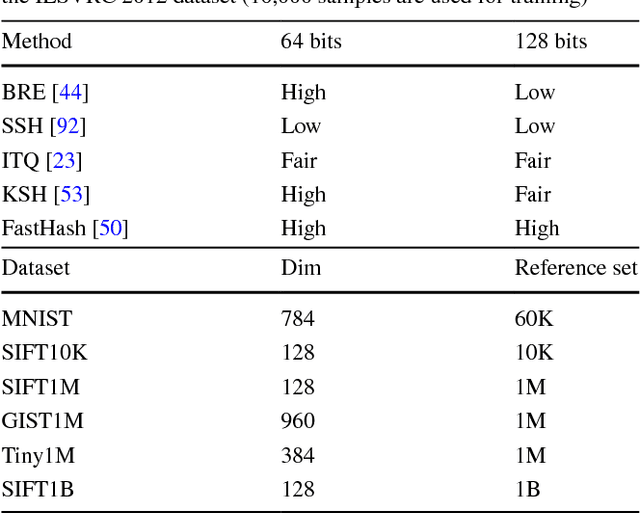

Learning in High-Dimensional Multimedia Data: The State of the Art

Jul 10, 2017

Abstract:During the last decade, the deluge of multimedia data has impacted a wide range of research areas, including multimedia retrieval, 3D tracking, database management, data mining, machine learning, social media analysis, medical imaging, and so on. Machine learning is largely involved in multimedia applications of building models for classification and regression tasks etc., and the learning principle consists in designing the models based on the information contained in the multimedia dataset. While many paradigms exist and are widely used in the context of machine learning, most of them suffer from the `curse of dimensionality', which means that some strange phenomena appears when data are represented in a high-dimensional space. Given the high dimensionality and the high complexity of multimedia data, it is important to investigate new machine learning algorithms to facilitate multimedia data analysis. To deal with the impact of high dimensionality, an intuitive way is to reduce the dimensionality. On the other hand, some researchers devoted themselves to designing some effective learning schemes for high-dimensional data. In this survey, we cover feature transformation, feature selection and feature encoding, three approaches fighting the consequences of the curse of dimensionality. Next, we briefly introduce some recent progress of effective learning algorithms. Finally, promising future trends on multimedia learning are envisaged.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge