Xiangheng Xie

HetEmotionNet: Two-Stream Heterogeneous Graph Recurrent Neural Network for Multi-modal Emotion Recognition

Aug 07, 2021

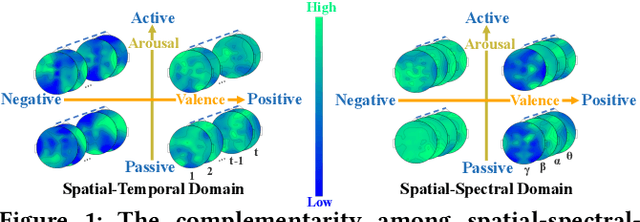

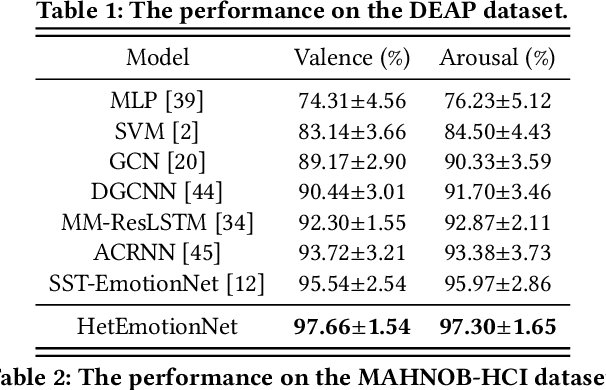

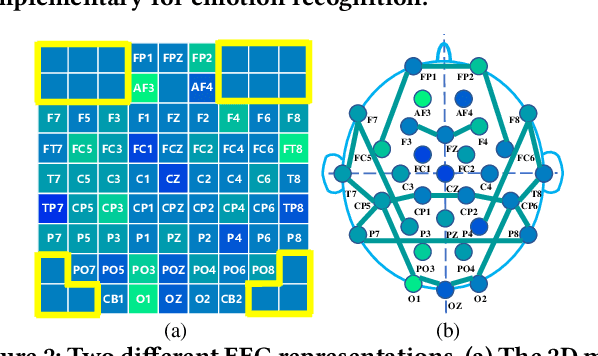

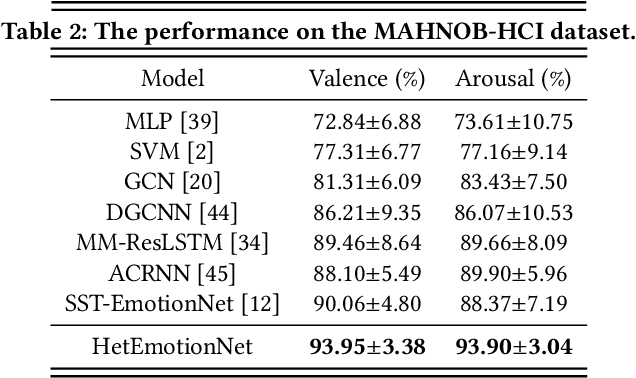

Abstract:The research on human emotion under multimedia stimulation based on physiological signals is an emerging field, and important progress has been achieved for emotion recognition based on multi-modal signals. However, it is challenging to make full use of the complementarity among spatial-spectral-temporal domain features for emotion recognition, as well as model the heterogeneity and correlation among multi-modal signals. In this paper, we propose a novel two-stream heterogeneous graph recurrent neural network, named HetEmotionNet, fusing multi-modal physiological signals for emotion recognition. Specifically, HetEmotionNet consists of the spatial-temporal stream and the spatial-spectral stream, which can fuse spatial-spectral-temporal domain features in a unified framework. Each stream is composed of the graph transformer network for modeling the heterogeneity, the graph convolutional network for modeling the correlation, and the gated recurrent unit for capturing the temporal domain or spectral domain dependency. Extensive experiments on two real-world datasets demonstrate that our proposed model achieves better performance than state-of-the-art baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge