Weiguang Huo

K2MUSE: A human lower limb multimodal dataset under diverse conditions for facilitating rehabilitation robotics

Apr 20, 2025Abstract:The natural interaction and control performance of lower limb rehabilitation robots are closely linked to biomechanical information from various human locomotion activities. Multidimensional human motion data significantly deepen the understanding of the complex mechanisms governing neuromuscular alterations, thereby facilitating the development and application of rehabilitation robots in multifaceted real-world environments. However, currently available lower limb datasets are inadequate for supplying the essential multimodal data and large-scale gait samples necessary for effective data-driven approaches, and they neglect the significant effects of acquisition interference in real applications.To fill this gap, we present the K2MUSE dataset, which includes a comprehensive collection of multimodal data, comprising kinematic, kinetic, amplitude-mode ultrasound (AUS), and surface electromyography (sEMG) measurements. The proposed dataset includes lower limb multimodal data from 30 able-bodied participants walking under different inclines (0$^\circ$, $\pm$5$^\circ$, and $\pm$10$^\circ$), various speeds (0.5 m/s, 1.0 m/s, and 1.5 m/s), and different nonideal acquisition conditions (muscle fatigue, electrode shifts, and inter-day differences). The kinematic and ground reaction force data were collected via a Vicon motion capture system and an instrumented treadmill with embedded force plates, whereas the sEMG and AUS data were synchronously recorded for thirteen muscles on the bilateral lower limbs. This dataset offers a new resource for designing control frameworks for rehabilitation robots and conducting biomechanical analyses of lower limb locomotion. The dataset is available at https://k2muse.github.io/.

Model Predictive Control for Human-Centred Lower Limb Robotic Assistance

Nov 10, 2020

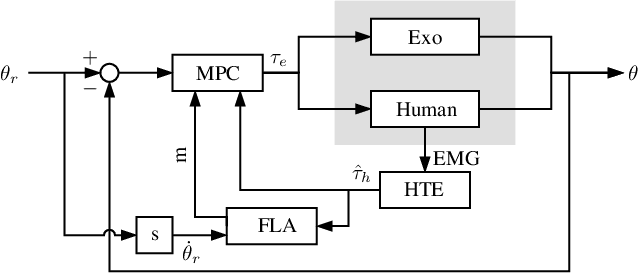

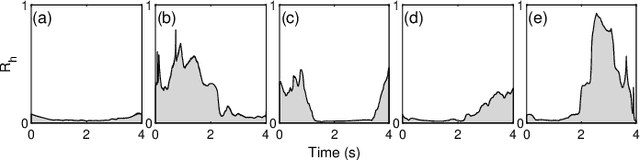

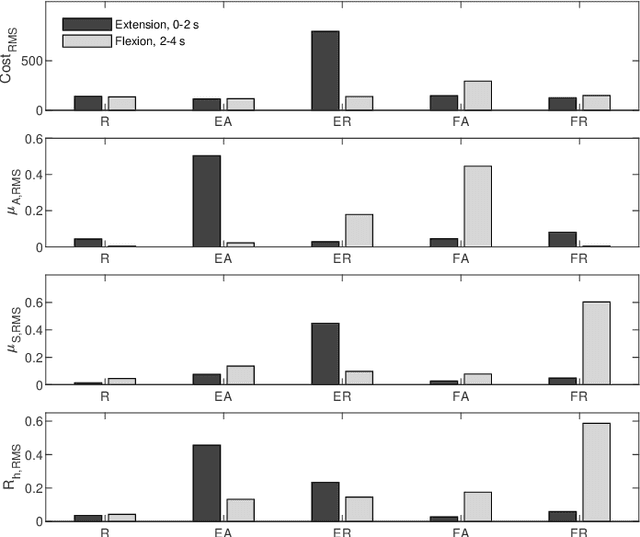

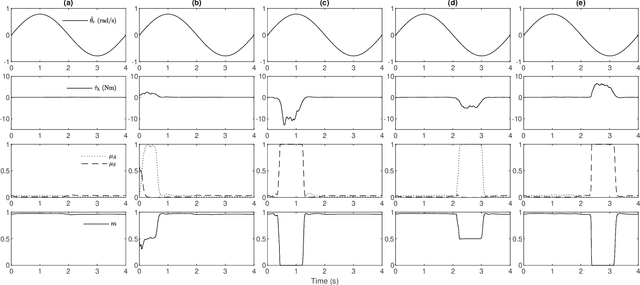

Abstract:Loss of mobility or balance resulting from neural trauma is a critical consideration in public health. Robotic exoskeletons hold great potential for rehabilitation and assisted movement, yet optimal assist-as-needed (AAN) control remains unresolved given pathological variance among patients. We introduce a model predictive control (MPC) architecture for lower limb exoskeletons centred around a fuzzy logic algorithm (FLA) identifying modes of assistance based on human involvement. Assistance modes are: 1) passive for human relaxed and robot dominant, 2) active-assist for human cooperation with the task, and 3) safety in the case of human resistance to the robot. Human torque is estimated from electromyography (EMG) signals prior to joint motions, enabling advanced prediction of torque by the MPC and selection of assistance mode by the FLA. The controller is demonstrated in hardware with three subjects on a 1-DOF knee exoskeleton tracking a sinusoidal trajectory with human relaxed assistive, and resistive. Experimental results show quick and appropriate transfers among the assistance modes and satisfied assistive performance in each mode. Results illustrate an objective approach to lower limb robotic assistance through on-the-fly transition between modes of movement, providing a new level of human-robot synergy for mobility assist and rehabilitation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge