Wayne E. Mackey

Cross-View World Models

Feb 07, 2026Abstract:World models enable agents to plan by imagining future states, but existing approaches operate from a single viewpoint, typically egocentric, even when other perspectives would make planning easier; navigation, for instance, benefits from a bird's-eye view. We introduce Cross-View World Models (XVWM), trained with a cross-view prediction objective: given a sequence of frames from one viewpoint, predict the future state from the same or a different viewpoint after an action is taken. Enforcing cross-view consistency acts as geometric regularization: because the input and output views may share little or no visual overlap, to predict across viewpoints, the model must learn view-invariant representations of the environment's 3D structure. We train on synchronized multi-view gameplay data from Aimlabs, an aim-training platform providing precisely aligned multi-camera recordings with high-frequency action labels. The resulting model gives agents parallel imagination streams across viewpoints, enabling planning in whichever frame of reference best suits the task while executing from the egocentric view. Our results show that multi-view consistency provides a strong learning signal for spatially grounded representations. Finally, predicting the consequences of one's actions from another viewpoint may offer a foundation for perspective-taking in multi-agent settings.

ORGaNICs: A Theory of Working Memory in Brains and Machines

May 25, 2018

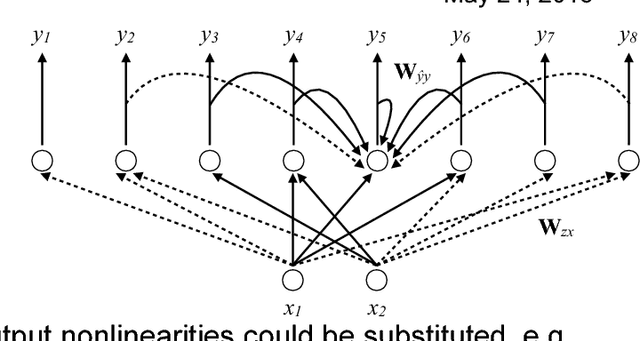

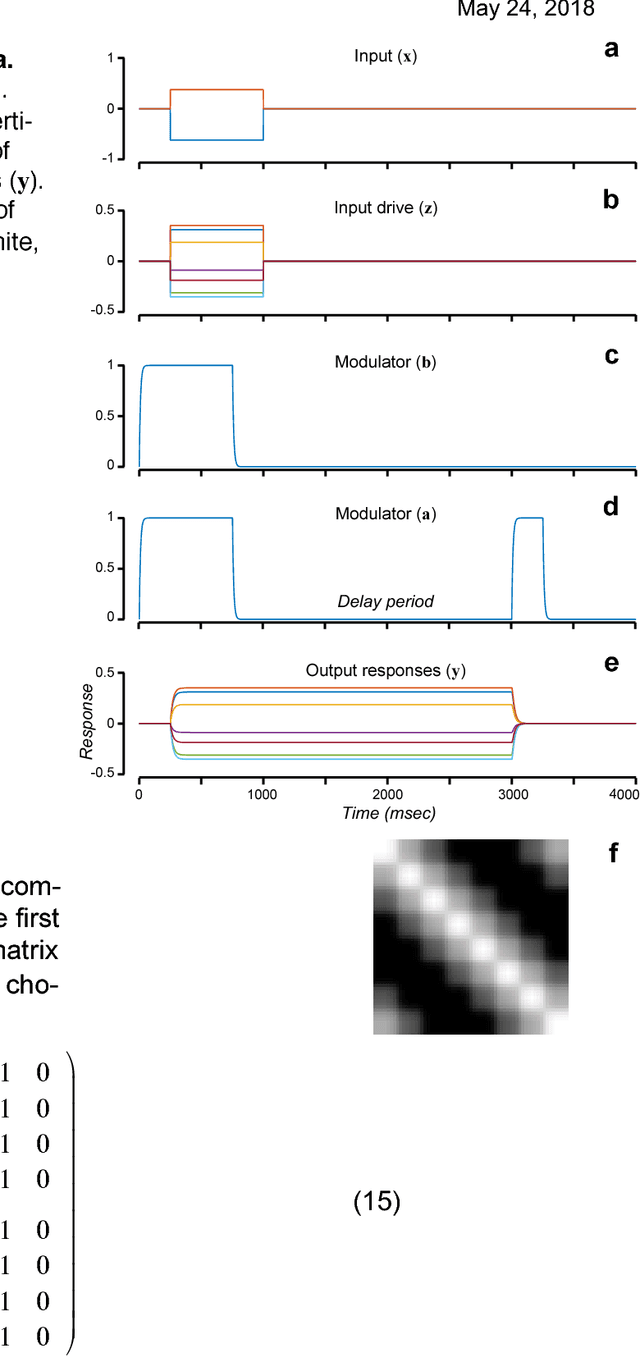

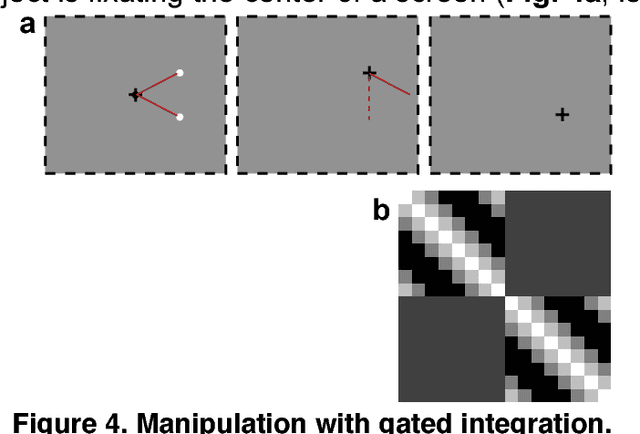

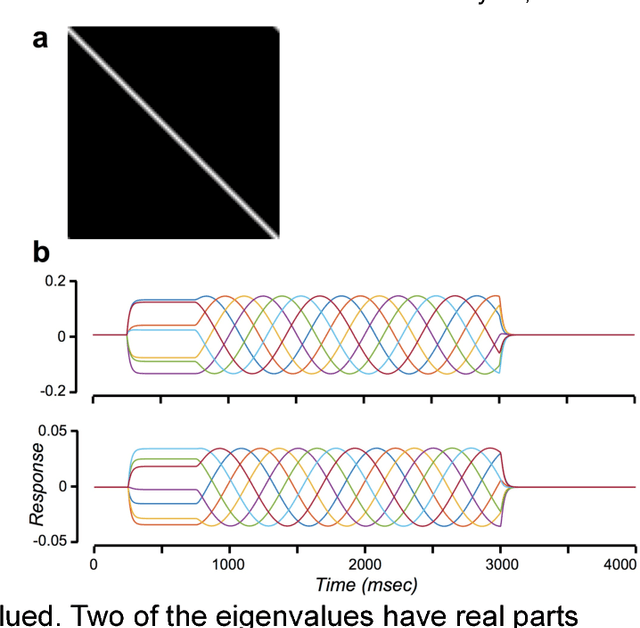

Abstract:Working memory is a cognitive process that is responsible for temporarily holding and manipulating information. Most of the empirical neuroscience research on working memory has focused on measuring sustained activity in prefrontal cortex (PFC) and/or parietal cortex during simple delayed-response tasks, and most of the models of working memory have been based on neural integrators. But working memory means much more than just holding a piece of information online. We describe a new theory of working memory, based on a recurrent neural circuit that we call ORGaNICs (Oscillatory Recurrent GAted Neural Integrator Circuits). ORGaNICs are a variety of Long Short Term Memory units (LSTMs), imported from machine learning and artificial intelligence. ORGaNICs can be used to explain the complex dynamics of delay-period activity in prefrontal cortex (PFC) during a working memory task. The theory is analytically tractable so that we can characterize the dynamics, and the theory provides a means for reading out information from the dynamically varying responses at any point in time, in spite of the complex dynamics. ORGaNICs can be implemented with a biophysical (electrical circuit) model of pyramidal cells, combined with shunting inhibition via a thalamocortical loop. Although introduced as a computational theory of working memory, ORGaNICs are also applicable to models of sensory processing, motor preparation and motor control. ORGaNICs offer computational advantages compared to other varieties of LSTMs that are commonly used in AI applications. Consequently, ORGaNICs are a framework for canonical computation in brains and machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge