Trung-Nghia Le

Handling Supervision Scarcity in Chest X-ray Classification: Long-Tailed and Zero-Shot Learning

Feb 13, 2026Abstract:Chest X-ray (CXR) classification in clinical practice is often limited by imperfect supervision, arising from (i) extreme long-tailed multi-label disease distributions and (ii) missing annotations for rare or previously unseen findings. The CXR-LT 2026 challenge addresses these issues on a PadChest-based benchmark with a 36-class label space split into 30 in-distribution classes for training and 6 out-of-distribution (OOD) classes for zero-shot evaluation. We present task-specific solutions tailored to the distinct supervision regimes. For Task 1 (long-tailed multi-label classification), we adopt an imbalance-aware multi-label learning strategy to improve recognition of tail classes while maintaining stable performance on frequent findings. For Task 2 (zero-shot OOD recognition), we propose a prediction approach that produces scores for unseen disease categories without using any supervised labels or examples from the OOD classes during training. Evaluated with macro-averaged mean Average Precision (mAP), our method achieves strong performance on both tasks, ranking first on the public leaderboard of the development phase. Code and pre-trained models are available at https://github.com/hieuphamha19/CXR_LT.

GenKOL: Modular Generative AI Framework For Scalable Virtual KOL Generation

Sep 18, 2025Abstract:Key Opinion Leader (KOL) play a crucial role in modern marketing by shaping consumer perceptions and enhancing brand credibility. However, collaborating with human KOLs often involves high costs and logistical challenges. To address this, we present GenKOL, an interactive system that empowers marketing professionals to efficiently generate high-quality virtual KOL images using generative AI. GenKOL enables users to dynamically compose promotional visuals through an intuitive interface that integrates multiple AI capabilities, including garment generation, makeup transfer, background synthesis, and hair editing. These capabilities are implemented as modular, interchangeable services that can be deployed flexibly on local machines or in the cloud. This modular architecture ensures adaptability across diverse use cases and computational environments. Our system can significantly streamline the production of branded content, lowering costs and accelerating marketing workflows through scalable virtual KOL creation.

Event-Enriched Image Analysis Grand Challenge at ACM Multimedia 2025

Aug 26, 2025Abstract:The Event-Enriched Image Analysis (EVENTA) Grand Challenge, hosted at ACM Multimedia 2025, introduces the first large-scale benchmark for event-level multimodal understanding. Traditional captioning and retrieval tasks largely focus on surface-level recognition of people, objects, and scenes, often overlooking the contextual and semantic dimensions that define real-world events. EVENTA addresses this gap by integrating contextual, temporal, and semantic information to capture the who, when, where, what, and why behind an image. Built upon the OpenEvents V1 dataset, the challenge features two tracks: Event-Enriched Image Retrieval and Captioning, and Event-Based Image Retrieval. A total of 45 teams from six countries participated, with evaluation conducted through Public and Private Test phases to ensure fairness and reproducibility. The top three teams were invited to present their solutions at ACM Multimedia 2025. EVENTA establishes a foundation for context-aware, narrative-driven multimedia AI, with applications in journalism, media analysis, cultural archiving, and accessibility. Further details about the challenge are available at the official homepage: https://ltnghia.github.io/eventa/eventa-2025.

SHREC 2025: Retrieval of Optimal Objects for Multi-modal Enhanced Language and Spatial Assistance (ROOMELSA)

Aug 12, 2025Abstract:Recent 3D retrieval systems are typically designed for simple, controlled scenarios, such as identifying an object from a cropped image or a brief description. However, real-world scenarios are more complex, often requiring the recognition of an object in a cluttered scene based on a vague, free-form description. To this end, we present ROOMELSA, a new benchmark designed to evaluate a system's ability to interpret natural language. Specifically, ROOMELSA attends to a specific region within a panoramic room image and accurately retrieves the corresponding 3D model from a large database. In addition, ROOMELSA includes over 1,600 apartment scenes, nearly 5,200 rooms, and more than 44,000 targeted queries. Empirically, while coarse object retrieval is largely solved, only one top-performing model consistently ranked the correct match first across nearly all test cases. Notably, a lightweight CLIP-based model also performed well, although it struggled with subtle variations in materials, part structures, and contextual cues, resulting in occasional errors. These findings highlight the importance of tightly integrating visual and language understanding. By bridging the gap between scene-level grounding and fine-grained 3D retrieval, ROOMELSA establishes a new benchmark for advancing robust, real-world 3D recognition systems.

GenFlow: Interactive Modular System for Image Generation

Jun 26, 2025Abstract:Generative art unlocks boundless creative possibilities, yet its full potential remains untapped due to the technical expertise required for advanced architectural concepts and computational workflows. To bridge this gap, we present GenFlow, a novel modular framework that empowers users of all skill levels to generate images with precision and ease. Featuring a node-based editor for seamless customization and an intelligent assistant powered by natural language processing, GenFlow transforms the complexity of workflow creation into an intuitive and accessible experience. By automating deployment processes and minimizing technical barriers, our framework makes cutting-edge generative art tools available to everyone. A user study demonstrated GenFlow's ability to optimize workflows, reduce task completion times, and enhance user understanding through its intuitive interface and adaptive features. These results position GenFlow as a groundbreaking solution that redefines accessibility and efficiency in the realm of generative art.

VisionGuard: Synergistic Framework for Helmet Violation Detection

Jun 26, 2025Abstract:Enforcing helmet regulations among motorcyclists is essential for enhancing road safety and ensuring the effectiveness of traffic management systems. However, automatic detection of helmet violations faces significant challenges due to environmental variability, camera angles, and inconsistencies in the data. These factors hinder reliable detection of motorcycles and riders and disrupt consistent object classification. To address these challenges, we propose VisionGuard, a synergistic multi-stage framework designed to overcome the limitations of frame-wise detectors, especially in scenarios with class imbalance and inconsistent annotations. VisionGuard integrates two key components: Adaptive Labeling and Contextual Expander modules. The Adaptive Labeling module is a tracking-based refinement technique that enhances classification consistency by leveraging a tracking algorithm to assign persistent labels across frames and correct misclassifications. The Contextual Expander module improves recall for underrepresented classes by generating virtual bounding boxes with appropriate confidence scores, effectively addressing the impact of data imbalance. Experimental results show that VisionGuard improves overall mAP by 3.1% compared to baseline detectors, demonstrating its effectiveness and potential for real-world deployment in traffic surveillance systems, ultimately promoting safety and regulatory compliance.

Shape2Animal: Creative Animal Generation from Natural Silhouettes

Jun 25, 2025Abstract:Humans possess a unique ability to perceive meaningful patterns in ambiguous stimuli, a cognitive phenomenon known as pareidolia. This paper introduces Shape2Animal framework to mimics this imaginative capacity by reinterpreting natural object silhouettes, such as clouds, stones, or flames, as plausible animal forms. Our automated framework first performs open-vocabulary segmentation to extract object silhouette and interprets semantically appropriate animal concepts using vision-language models. It then synthesizes an animal image that conforms to the input shape, leveraging text-to-image diffusion model and seamlessly blends it into the original scene to generate visually coherent and spatially consistent compositions. We evaluated Shape2Animal on a diverse set of real-world inputs, demonstrating its robustness and creative potential. Our Shape2Animal can offer new opportunities for visual storytelling, educational content, digital art, and interactive media design. Our project page is here: https://shape2image.github.io

Automated Image Recognition Framework

Jun 24, 2025Abstract:While the efficacy of deep learning models heavily relies on data, gathering and annotating data for specific tasks, particularly when addressing novel or sensitive subjects lacking relevant datasets, poses significant time and resource challenges. In response to this, we propose a novel Automated Image Recognition (AIR) framework that harnesses the power of generative AI. AIR empowers end-users to synthesize high-quality, pre-annotated datasets, eliminating the necessity for manual labeling. It also automatically trains deep learning models on the generated datasets with robust image recognition performance. Our framework includes two main data synthesis processes, AIR-Gen and AIR-Aug. The AIR-Gen enables end-users to seamlessly generate datasets tailored to their specifications. To improve image quality, we introduce a novel automated prompt engineering module that leverages the capabilities of large language models. We also introduce a distribution adjustment algorithm to eliminate duplicates and outliers, enhancing the robustness and reliability of generated datasets. On the other hand, the AIR-Aug enhances a given dataset, thereby improving the performance of deep classifier models. AIR-Aug is particularly beneficial when users have limited data for specific tasks. Through comprehensive experiments, we demonstrated the efficacy of our generated data in training deep learning models and showcased the system's potential to provide image recognition models for a wide range of objects. We also conducted a user study that achieved an impressive score of 4.4 out of 5.0, underscoring the AI community's positive perception of AIR.

FaR: Enhancing Multi-Concept Text-to-Image Diffusion via Concept Fusion and Localized Refinement

Apr 04, 2025Abstract:Generating multiple new concepts remains a challenging problem in the text-to-image task. Current methods often overfit when trained on a small number of samples and struggle with attribute leakage, particularly for class-similar subjects (e.g., two specific dogs). In this paper, we introduce Fuse-and-Refine (FaR), a novel approach that tackles these challenges through two key contributions: Concept Fusion technique and Localized Refinement loss function. Concept Fusion systematically augments the training data by separating reference subjects from backgrounds and recombining them into composite images to increase diversity. This augmentation technique tackles the overfitting problem by mitigating the narrow distribution of the limited training samples. In addition, Localized Refinement loss function is introduced to preserve subject representative attributes by aligning each concept's attention map to its correct region. This approach effectively prevents attribute leakage by ensuring that the diffusion model distinguishes similar subjects without mixing their attention maps during the denoising process. By fine-tuning specific modules at the same time, FaR balances the learning of new concepts with the retention of previously learned knowledge. Empirical results show that FaR not only prevents overfitting and attribute leakage while maintaining photorealism, but also outperforms other state-of-the-art methods.

LookupForensics: A Large-Scale Multi-Task Dataset for Multi-Phase Image-Based Fact Verification

Jul 26, 2024

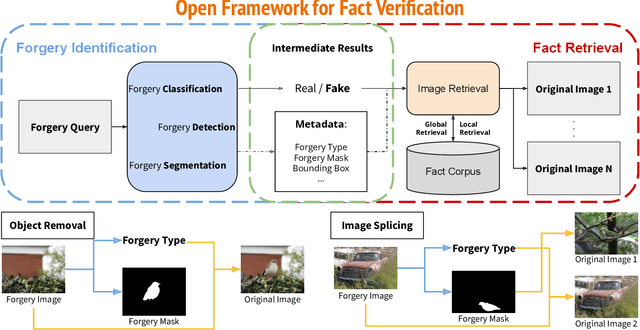

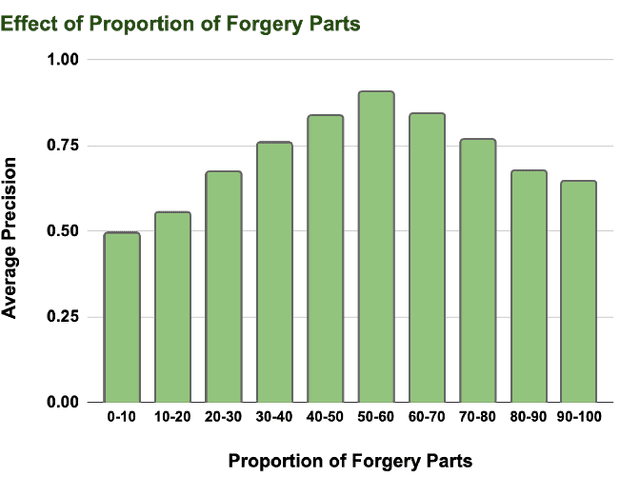

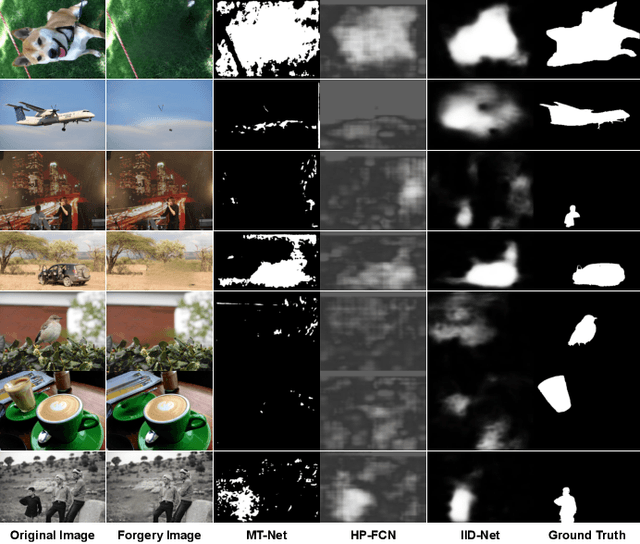

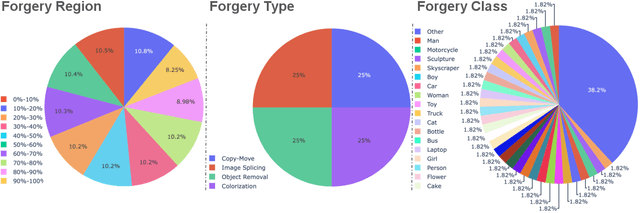

Abstract:Amid the proliferation of forged images, notably the tsunami of deepfake content, extensive research has been conducted on using artificial intelligence (AI) to identify forged content in the face of continuing advancements in counterfeiting technologies. We have investigated the use of AI to provide the original authentic image after deepfake detection, which we believe is a reliable and persuasive solution. We call this "image-based automated fact verification," a name that originated from a text-based fact-checking system used by journalists. We have developed a two-phase open framework that integrates detection and retrieval components. Additionally, inspired by a dataset proposed by Meta Fundamental AI Research, we further constructed a large-scale dataset that is specifically designed for this task. This dataset simulates real-world conditions and includes both content-preserving and content-aware manipulations that present a range of difficulty levels and have potential for ongoing research. This multi-task dataset is fully annotated, enabling it to be utilized for sub-tasks within the forgery identification and fact retrieval domains. This paper makes two main contributions: (1) We introduce a new task, "image-based automated fact verification," and present a novel two-phase open framework combining "forgery identification" and "fact retrieval." (2) We present a large-scale dataset tailored for this new task that features various hand-crafted image edits and machine learning-driven manipulations, with extensive annotations suitable for various sub-tasks. Extensive experimental results validate its practicality for fact verification research and clarify its difficulty levels for various sub-tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge