Tom Kempton

Emergent Bias and Fairness in Multi-Agent Decision Systems

Dec 18, 2025

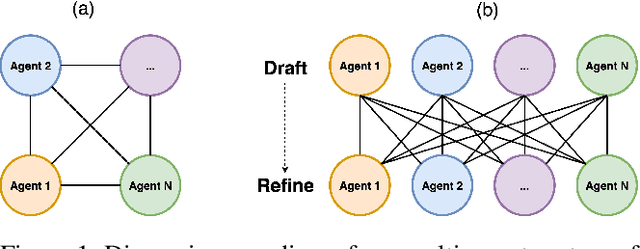

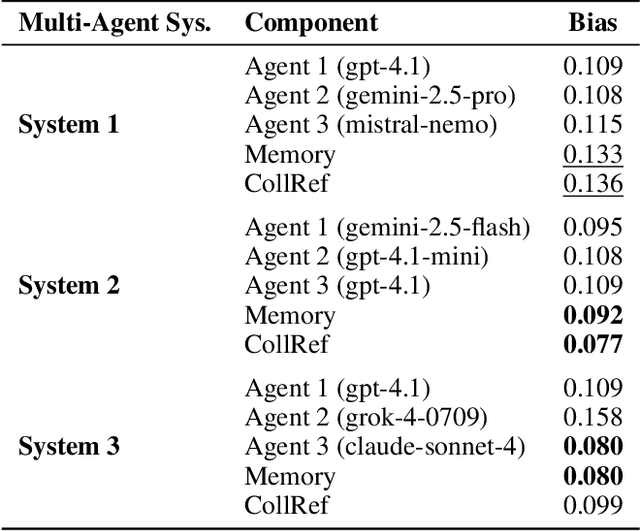

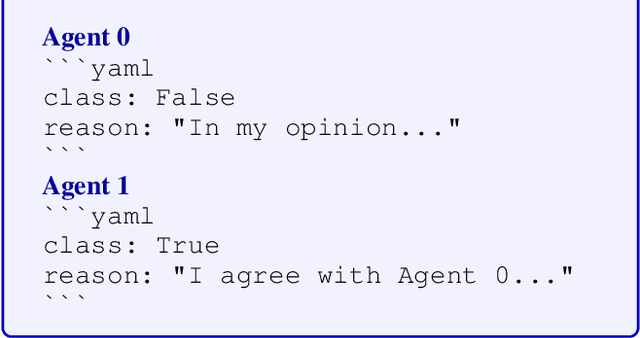

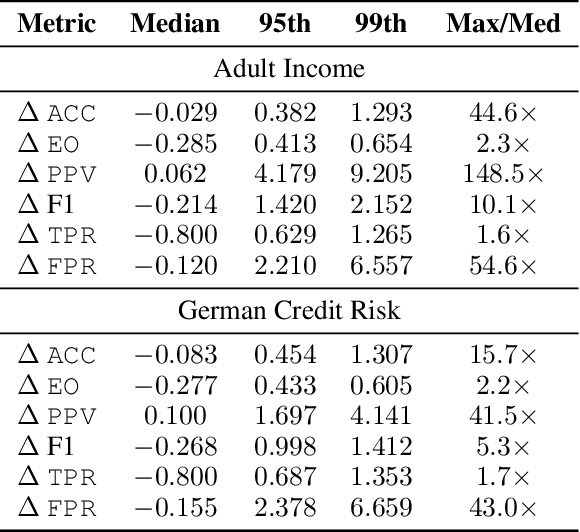

Abstract:Multi-agent systems have demonstrated the ability to improve performance on a variety of predictive tasks by leveraging collaborative decision making. However, the lack of effective evaluation methodologies has made it difficult to estimate the risk of bias, making deployment of such systems unsafe in high stakes domains such as consumer finance, where biased decisions can translate directly into regulatory breaches and financial loss. To address this challenge, we need to develop fairness evaluation methodologies for multi-agent predictive systems and measure the fairness characteristics of these systems in the financial tabular domain. Examining fairness metrics using large-scale simulations across diverse multi-agent configurations, with varying communication and collaboration mechanisms, we reveal patterns of emergent bias in financial decision-making that cannot be traced to individual agent components, indicating that multi-agent systems may exhibit genuinely collective behaviors. Our findings highlight that fairness risks in financial multi-agent systems represent a significant component of model risk, with tangible impacts on tasks such as credit scoring and income estimation. We advocate that multi-agent decision systems must be evaluated as holistic entities rather than through reductionist analyses of their constituent components.

Local Normalization Distortion and the Thermodynamic Formalism of Decoding Strategies for Large Language Models

Mar 27, 2025Abstract:Advances in hardware and language model architecture have spurred a revolution in natural language generation. However, autoregressive models compute probability distributions over next-token choices, and sampling from these distributions, known as decoding, has received significantly less attention than other design choices. Existing decoding strategies are largely based on heuristics, resulting in methods that are hard to apply or improve in a principled manner. We develop the theory of decoding strategies for language models by expressing popular decoding algorithms as equilibrium states in the language of ergodic theory and stating the functions they optimize. Using this, we analyze the effect of the local normalization step of top-k, nucleus, and temperature sampling, used to make probabilities sum to one. We argue that local normalization distortion is a fundamental defect of decoding strategies and quantify the size of this distortion and its effect on mathematical proxies for the quality and diversity of generated text. Contrary to the prevailing explanation, we argue that the major cause of the under-performance of top-k sampling relative to nucleus sampling is local normalization distortion. This yields conclusions for the future design of decoding algorithms and the detection of machine-generated text.

TempTest: Local Normalization Distortion and the Detection of Machine-generated Text

Mar 26, 2025Abstract:Existing methods for the zero-shot detection of machine-generated text are dominated by three statistical quantities: log-likelihood, log-rank, and entropy. As language models mimic the distribution of human text ever closer, this will limit our ability to build effective detection algorithms. To combat this, we introduce a method for detecting machine-generated text that is entirely agnostic of the generating language model. This is achieved by targeting a defect in the way that decoding strategies, such as temperature or top-k sampling, normalize conditional probability measures. This method can be rigorously theoretically justified, is easily explainable, and is conceptually distinct from existing methods for detecting machine-generated text. We evaluate our detector in the white and black box settings across various language models, datasets, and passage lengths. We also study the effect of paraphrasing attacks on our detector and the extent to which it is biased against non-native speakers. In each of these settings, the performance of our test is at least comparable to that of other state-of-the-art text detectors, and in some cases, we strongly outperform these baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge