Tianhu Peng

A High-Fidelity Digital Twin for Robotic Manipulation Based on 3D Gaussian Splatting

Jan 06, 2026Abstract:Developing high-fidelity, interactive digital twins is crucial for enabling closed-loop motion planning and reliable real-world robot execution, which are essential to advancing sim-to-real transfer. However, existing approaches often suffer from slow reconstruction, limited visual fidelity, and difficulties in converting photorealistic models into planning-ready collision geometry. We present a practical framework that constructs high-quality digital twins within minutes from sparse RGB inputs. Our system employs 3D Gaussian Splatting (3DGS) for fast, photorealistic reconstruction as a unified scene representation. We enhance 3DGS with visibility-aware semantic fusion for accurate 3D labelling and introduce an efficient, filter-based geometry conversion method to produce collision-ready models seamlessly integrated with a Unity-ROS2-MoveIt physics engine. In experiments with a Franka Emika Panda robot performing pick-and-place tasks, we demonstrate that this enhanced geometric accuracy effectively supports robust manipulation in real-world trials. These results demonstrate that 3DGS-based digital twins, enriched with semantic and geometric consistency, offer a fast, reliable, and scalable path from perception to manipulation in unstructured environments.

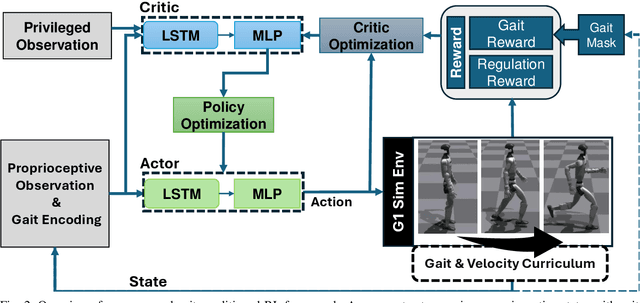

Gait-Conditioned Reinforcement Learning with Multi-Phase Curriculum for Humanoid Locomotion

May 27, 2025

Abstract:We present a unified gait-conditioned reinforcement learning framework that enables humanoid robots to perform standing, walking, running, and smooth transitions within a single recurrent policy. A compact reward routing mechanism dynamically activates gait-specific objectives based on a one-hot gait ID, mitigating reward interference and supporting stable multi-gait learning. Human-inspired reward terms promote biomechanically natural motions, such as straight-knee stance and coordinated arm-leg swing, without requiring motion capture data. A structured curriculum progressively introduces gait complexity and expands command space over multiple phases. In simulation, the policy successfully achieves robust standing, walking, running, and gait transitions. On the real Unitree G1 humanoid, we validate standing, walking, and walk-to-stand transitions, demonstrating stable and coordinated locomotion. This work provides a scalable, reference-free solution toward versatile and naturalistic humanoid control across diverse modes and environments.

Learning Bipedal Walking on a Quadruped Robot via Adversarial Motion Priors

Jul 02, 2024

Abstract:Previous studies have successfully demonstrated agile and robust locomotion in challenging terrains for quadrupedal robots. However, the bipedal locomotion mode for quadruped robots remains unverified. This paper explores the adaptation of a learning framework originally designed for quadrupedal robots to operate blind locomotion in biped mode. We leverage a framework that incorporates Adversarial Motion Priors with a teacher-student policy to enable imitation of a reference trajectory and navigation on tough terrain. Our work involves transferring and evaluating a similar learning framework on a quadruped robot in biped mode, aiming to achieve stable walking on both flat and complicated terrains. Our simulation results demonstrate that the trained policy enables the quadruped robot to navigate both flat and challenging terrains, including stairs and uneven surfaces.

Deep Reinforcement Learning for Bipedal Locomotion: A Brief Survey

Apr 25, 2024

Abstract:Bipedal robots are garnering increasing global attention due to their potential applications and advancements in artificial intelligence, particularly in Deep Reinforcement Learning (DRL). While DRL has driven significant progress in bipedal locomotion, developing a comprehensive and unified framework capable of adeptly performing a wide range of tasks remains a challenge. This survey systematically categorizes, compares, and summarizes existing DRL frameworks for bipedal locomotion, organizing them into end-to-end and hierarchical control schemes. End-to-end frameworks are assessed based on their learning approaches, whereas hierarchical frameworks are dissected into layers that utilize either learning-based methods or traditional model-based approaches. This survey provides a detailed analysis of the composition, capabilities, strengths, and limitations of each framework type. Furthermore, we identify critical research gaps and propose future directions aimed at achieving a more integrated and efficient framework for bipedal locomotion, with potential broad applications in everyday life.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge