Takuya Azumi

SC-MII: Infrastructure LiDAR-based 3D Object Detection on Edge Devices for Split Computing with Multiple Intermediate Outputs Integration

Jan 12, 2026Abstract:3D object detection using LiDAR-based point cloud data and deep neural networks is essential in autonomous driving technology. However, deploying state-of-the-art models on edge devices present challenges due to high computational demands and energy consumption. Additionally, single LiDAR setups suffer from blind spots. This paper proposes SC-MII, multiple infrastructure LiDAR-based 3D object detection on edge devices for Split Computing with Multiple Intermediate outputs Integration. In SC-MII, edge devices process local point clouds through the initial DNN layers and send intermediate outputs to an edge server. The server integrates these features and completes inference, reducing both latency and device load while improving privacy. Experimental results on a real-world dataset show a 2.19x speed-up and a 71.6% reduction in edge device processing time, with at most a 1.09% drop in accuracy.

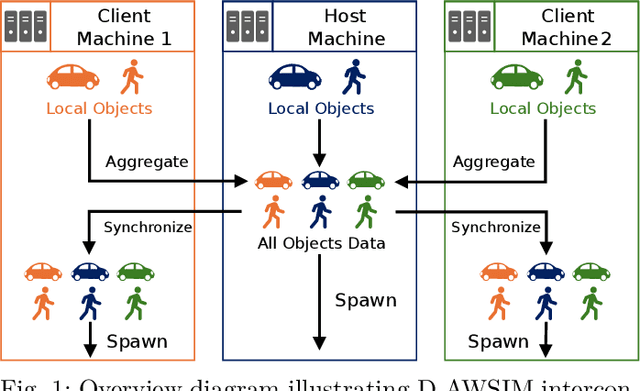

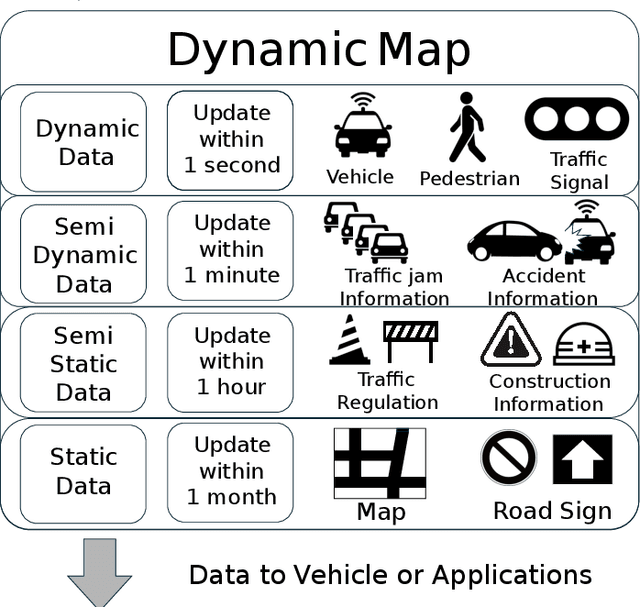

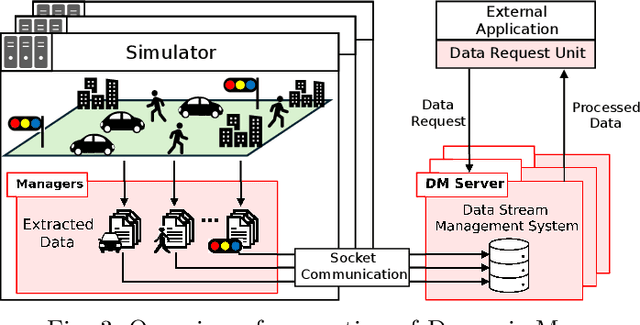

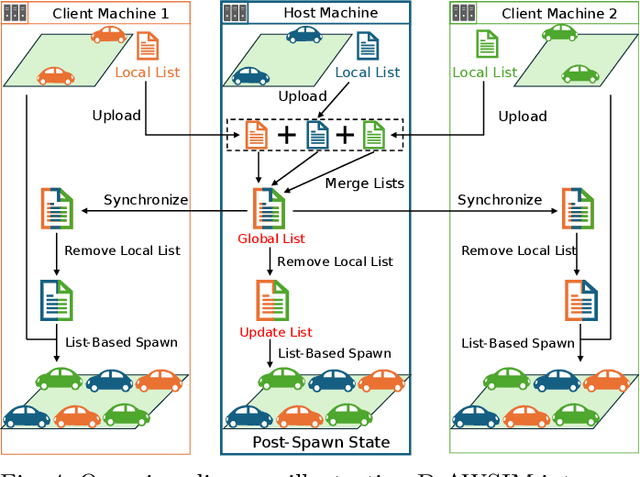

D-AWSIM: Distributed Autonomous Driving Simulator for Dynamic Map Generation Framework

Nov 12, 2025

Abstract:Autonomous driving systems have achieved significant advances, and full autonomy within defined operational design domains near practical deployment. Expanding these domains requires addressing safety assurance under diverse conditions. Information sharing through vehicle-to-vehicle and vehicle-to-infrastructure communication, enabled by a Dynamic Map platform built from vehicle and roadside sensor data, offers a promising solution. Real-world experiments with numerous infrastructure sensors incur high costs and regulatory challenges. Conventional single-host simulators lack the capacity for large-scale urban traffic scenarios. This paper proposes D-AWSIM, a distributed simulator that partitions its workload across multiple machines to support the simulation of extensive sensor deployment and dense traffic environments. A Dynamic Map generation framework on D-AWSIM enables researchers to explore information-sharing strategies without relying on physical testbeds. The evaluation shows that D-AWSIM increases throughput for vehicle count and LiDAR sensor processing substantially compared to a single-machine setup. Integration with Autoware demonstrates applicability for autonomous driving research.

Generalized Inequality-based Approach for Probabilistic WCET Estimation

Nov 12, 2025Abstract:Estimating the probabilistic Worst-Case Execution Time (pWCET) is essential for ensuring the timing correctness of real-time applications, such as in robot IoT systems and autonomous driving systems. While methods based on Extreme Value Theory (EVT) can provide tight bounds, they suffer from model uncertainty due to the need to decide where the upper tail of the distribution begins. Conversely, inequality-based approaches avoid this issue but can yield pessimistic results for heavy-tailed distributions. This paper proposes a method to reduce such pessimism by incorporating saturating functions (arctangent and hyperbolic tangent) into Chebyshev's inequality, which mitigates the influence of large outliers while preserving mathematical soundness. Evaluations on synthetic and real-world data from the Autoware autonomous driving stack demonstrate that the proposed method achieves safe and tighter bounds for such distributions.

Work in Progress: Middleware-Transparent Callback Enforcement in Commoditized Component-Oriented Real-time Systems

May 10, 2025Abstract:Real-time scheduling in commoditized component-oriented real-time systems, such as ROS 2 systems on Linux, has been studied under nested scheduling: OS thread scheduling and middleware layer scheduling (e.g., ROS 2 Executor). However, by establishing a persistent one-to-one correspondence between callbacks and OS threads, we can ignore the middleware layer and directly apply OS scheduling parameters (e.g., scheduling policy, priority, and affinity) to individual callbacks. We propose a middleware model that enables this idea and implements CallbackIsolatedExecutor as a novel ROS 2 Executor. We demonstrate that the costs (user-kernel switches, context switches, and memory usage) of CallbackIsolatedExecutor remain lower than those of the MultiThreadedExecutor, regardless of the number of callbacks. Additionally, the cost of CallbackIsolatedExecutor relative to SingleThreadedExecutor stays within a fixed ratio (1.4x for inter-process and 5x for intra-process communication). Future ROS 2 real-time scheduling research can avoid nested scheduling, ignoring the existence of the middleware layer.

* 4 pages, 5 figures. Accepted for IEEE RTAS 2025; this is the author-accepted manuscript. Final version in IEEE Xplore: https://doi.org/10.1109/RTAS65571.2025.00017

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge