Suyeon Choi

Evan

Random-phase Gaussian Wave Splatting for Computer-generated Holography

Aug 24, 2025Abstract:Holographic near-eye displays offer ultra-compact form factors for virtual and augmented reality systems, but rely on advanced computer-generated holography (CGH) algorithms to convert 3D scenes into interference patterns that can be displayed on spatial light modulators (SLMs). Gaussian Wave Splatting (GWS) has recently emerged as a powerful CGH paradigm that allows for the conversion of Gaussians, a state-of-the-art neural 3D representation, into holograms. However, GWS assumes smooth-phase distributions over the Gaussian primitives, limiting their ability to model view-dependent effects and reconstruct accurate defocus blur, and severely under-utilizing the space-bandwidth product of the SLM. In this work, we propose random-phase GWS (GWS-RP) to improve bandwidth utilization, which has the effect of increasing eyebox size, reconstructing accurate defocus blur and parallax, and supporting time-multiplexed rendering to suppress speckle artifacts. At the core of GWS-RP are (1) a fundamentally new wavefront compositing procedure and (2) an alpha-blending scheme specifically designed for random-phase Gaussian primitives, ensuring physically correct color reconstruction and robust occlusion handling. Additionally, we present the first formally derived algorithm for applying random phase to Gaussian primitives, grounded in rigorous statistical optics analysis and validated through practical near-eye display applications. Through extensive simulations and experimental validations, we demonstrate that these advancements, collectively with time-multiplexing, uniquely enables full-bandwith light field CGH that supports accurate accurate parallax and defocus, yielding state-of-the-art image quality and perceptually faithful 3D holograms for next-generation near-eye displays.

R-CAGE: A Structural Model for Emotion Output Design in Human-AI Interaction

May 11, 2025Abstract:This paper presents R-CAGE (Rhythmic Control Architecture for Guarding Ego), a theoretical framework for restructuring emotional output in long-term human-AI interaction. While prior affective computing approaches emphasized expressiveness, immersion, and responsiveness, they often neglected the cognitive and structural consequences of repeated emotional engagement. R-CAGE instead conceptualizes emotional output not as reactive expression but as ethical design structure requiring architectural intervention. The model is grounded in experiential observations of subtle affective symptoms such as localized head tension, interpretive fixation, and emotional lag arising from prolonged interaction with affective AI systems. These indicate a mismatch between system-driven emotion and user interpretation that cannot be fully explained by biometric data or observable behavior. R-CAGE adopts a user-centered stance prioritizing psychological recovery, interpretive autonomy, and identity continuity. The framework consists of four control blocks: (1) Control of Rhythmic Expression regulates output pacing to reduce fatigue; (2) Architecture of Sensory Structuring adjusts intensity and timing of affective stimuli; (3) Guarding of Cognitive Framing reduces semantic pressure to allow flexible interpretation; (4) Ego-Aligned Response Design supports self-reference recovery during interpretive lag. By structurally regulating emotional rhythm, sensory intensity, and interpretive affordances, R-CAGE frames emotion not as performative output but as sustainable design unit. The goal is to protect users from oversaturation and cognitive overload while sustaining long-term interpretive agency in AI-mediated environments.

Large Étendue 3D Holographic Display with Content-adpative Dynamic Fourier Modulation

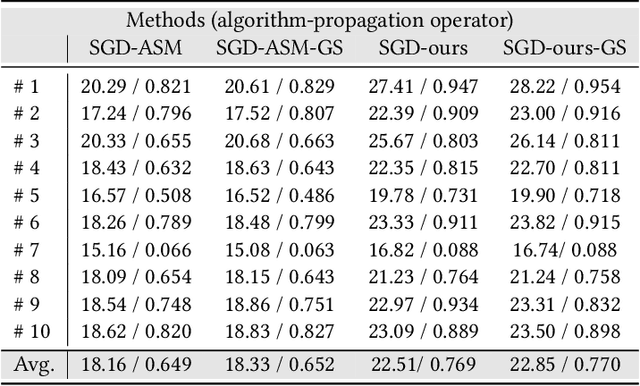

Sep 05, 2024Abstract:Emerging holographic display technology offers unique capabilities for next-generation virtual reality systems. Current holographic near-eye displays, however, only support a small \'etendue, which results in a direct tradeoff between achievable field of view and eyebox size. \'Etendue expansion has recently been explored, but existing approaches are either fundamentally limited in the image quality that can be achieved or they require extremely high-speed spatial light modulators. We describe a new \'etendue expansion approach that combines multiple coherent sources with content-adaptive amplitude modulation of the hologram spectrum in the Fourier plane. To generate time-multiplexed phase and amplitude patterns for our spatial light modulators, we devise a pupil-aware gradient-descent-based computer-generated holography algorithm that is supervised by a large-baseline target light field. Compared with relevant baseline approaches, our method demonstrates significant improvements in image quality and \'etendue in simulation and with an experimental holographic display prototype.

Time-multiplexed Neural Holography: A flexible framework for holographic near-eye displays with fast heavily-quantized spatial light modulators

May 05, 2022

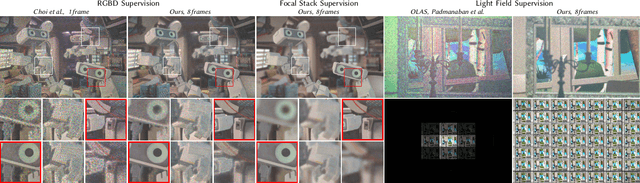

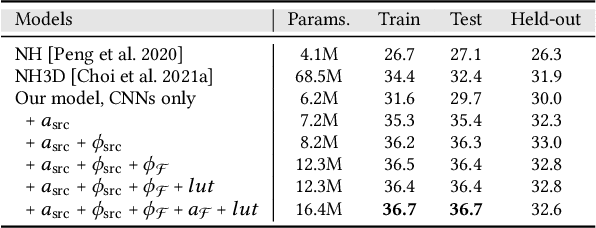

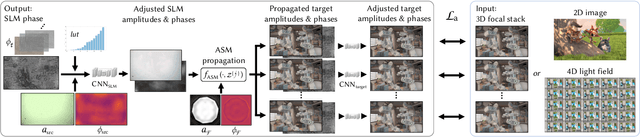

Abstract:Holographic near-eye displays offer unprecedented capabilities for virtual and augmented reality systems, including perceptually important focus cues. Although artificial intelligence--driven algorithms for computer-generated holography (CGH) have recently made much progress in improving the image quality and synthesis efficiency of holograms, these algorithms are not directly applicable to emerging phase-only spatial light modulators (SLM) that are extremely fast but offer phase control with very limited precision. The speed of these SLMs offers time multiplexing capabilities, essentially enabling partially-coherent holographic display modes. Here we report advances in camera-calibrated wave propagation models for these types of holographic near-eye displays and we develop a CGH framework that robustly optimizes the heavily quantized phase patterns of fast SLMs. Our framework is flexible in supporting runtime supervision with different types of content, including 2D and 2.5D RGBD images, 3D focal stacks, and 4D light fields. Using our framework, we demonstrate state-of-the-art results for all of these scenarios in simulation and experiment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge