Stefano Tubaro

Splicing Detection and Localization In Satellite Imagery Using Conditional GANs

May 03, 2022

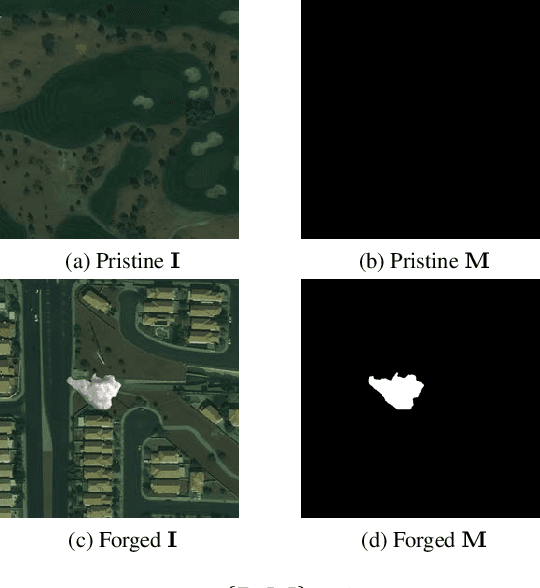

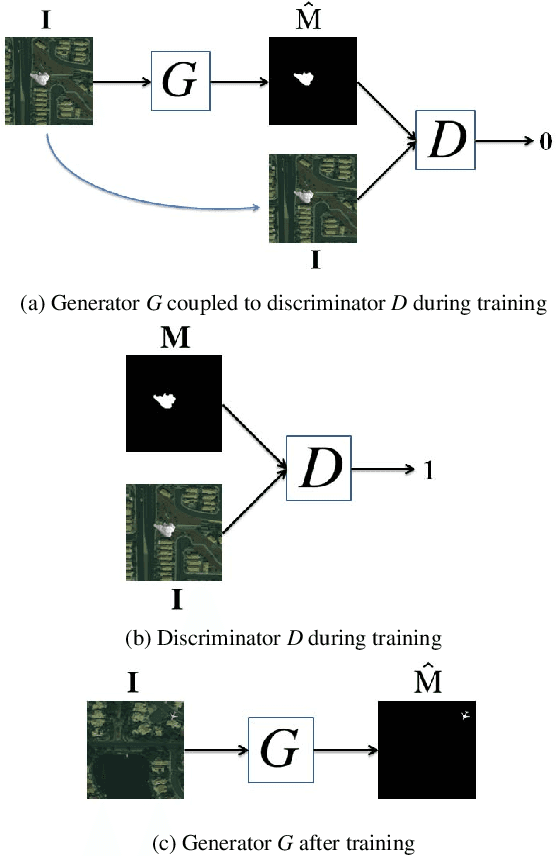

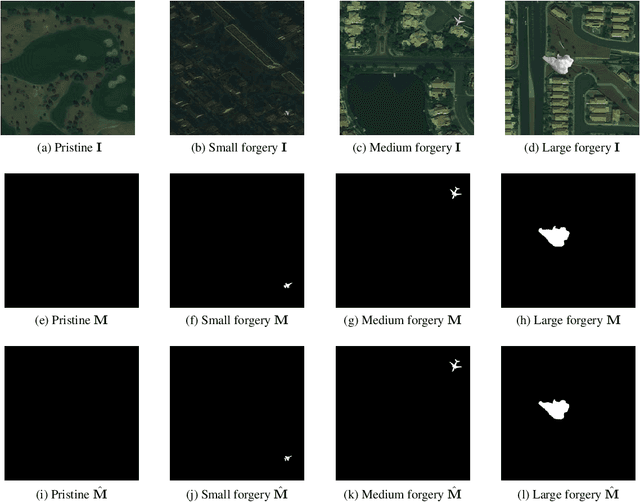

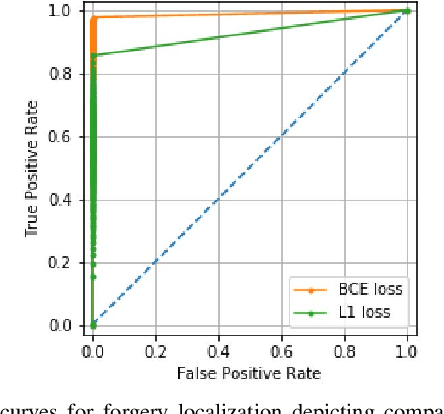

Abstract:The widespread availability of image editing tools and improvements in image processing techniques allow image manipulation to be very easy. Oftentimes, easy-to-use yet sophisticated image manipulation tools yields distortions/changes imperceptible to the human observer. Distribution of forged images can have drastic ramifications, especially when coupled with the speed and vastness of the Internet. Therefore, verifying image integrity poses an immense and important challenge to the digital forensic community. Satellite images specifically can be modified in a number of ways, including the insertion of objects to hide existing scenes and structures. In this paper, we describe the use of a Conditional Generative Adversarial Network (cGAN) to identify the presence of such spliced forgeries within satellite images. Additionally, we identify their locations and shapes. Trained on pristine and falsified images, our method achieves high success on these detection and localization objectives.

* Accepted to the 2019 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR)

Forensic Analysis and Localization of Multiply Compressed MP3 Audio Using Transformers

Mar 30, 2022

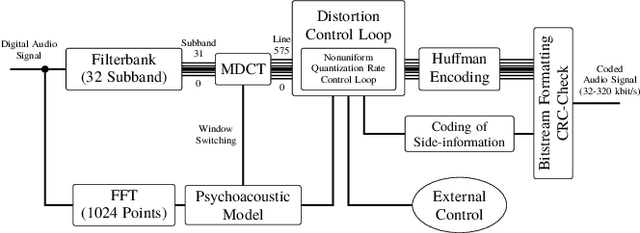

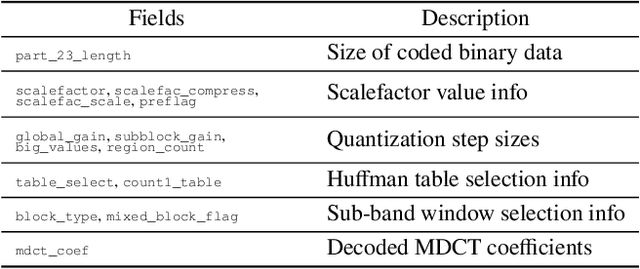

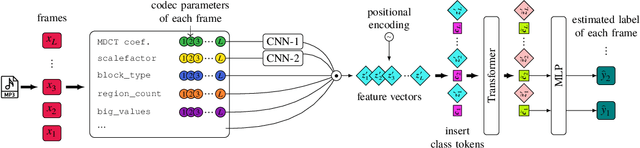

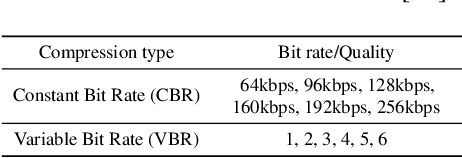

Abstract:Audio signals are often stored and transmitted in compressed formats. Among the many available audio compression schemes, MPEG-1 Audio Layer III (MP3) is very popular and widely used. Since MP3 is lossy it leaves characteristic traces in the compressed audio which can be used forensically to expose the past history of an audio file. In this paper, we consider the scenario of audio signal manipulation done by temporal splicing of compressed and uncompressed audio signals. We propose a method to find the temporal location of the splices based on transformer networks. Our method identifies which temporal portions of a audio signal have undergone single or multiple compression at the temporal frame level, which is the smallest temporal unit of MP3 compression. We tested our method on a dataset of 486,743 MP3 audio clips. Our method achieved higher performance and demonstrated robustness with respect to different MP3 data when compared with existing methods.

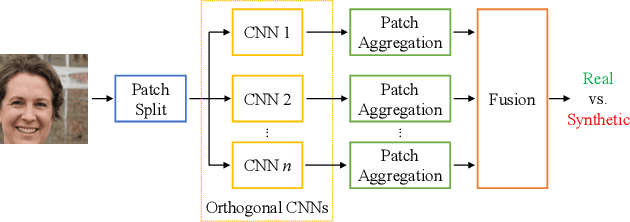

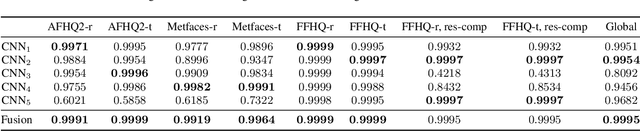

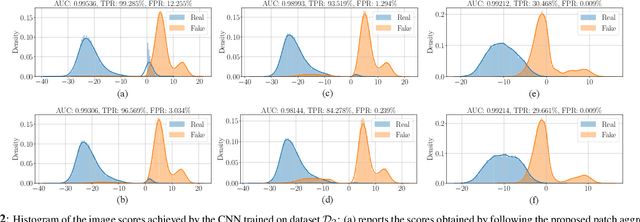

Detecting GAN-generated Images by Orthogonal Training of Multiple CNNs

Mar 04, 2022

Abstract:In the last few years, we have witnessed the rise of a series of deep learning methods to generate synthetic images that look extremely realistic. These techniques prove useful in the movie industry and for artistic purposes. However, they also prove dangerous if used to spread fake news or to generate fake online accounts. For this reason, detecting if an image is an actual photograph or has been synthetically generated is becoming an urgent necessity. This paper proposes a detector of synthetic images based on an ensemble of Convolutional Neural Networks (CNNs). We consider the problem of detecting images generated with techniques not available at training time. This is a common scenario, given that new image generators are published more and more frequently. To solve this issue, we leverage two main ideas: (i) CNNs should provide orthogonal results to better contribute to the ensemble; (ii) original images are better defined than synthetic ones, thus they should be better trusted at testing time. Experiments show that pursuing these two ideas improves the detector accuracy on NVIDIA's newly generated StyleGAN3 images, never used in training.

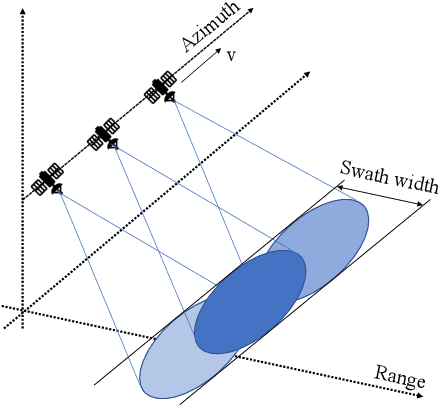

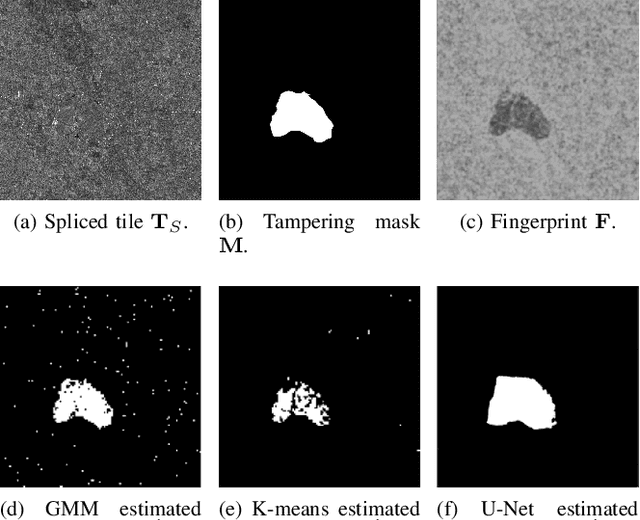

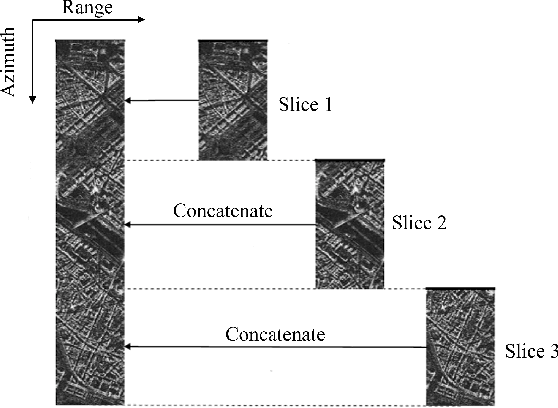

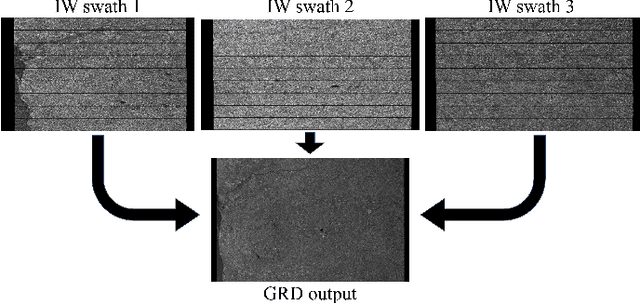

Amplitude SAR Imagery Splicing Localization

Jan 07, 2022

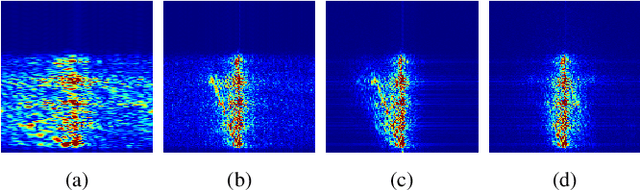

Abstract:Synthetic Aperture Radar (SAR) images are a valuable asset for a wide variety of tasks. In the last few years, many websites have been offering them for free in the form of easy to manage products, favoring their widespread diffusion and research work in the SAR field. The drawback of these opportunities is that such images might be exposed to forgeries and manipulations by malicious users, raising new concerns about their integrity and trustworthiness. Up to now, the multimedia forensics literature has proposed various techniques to localize manipulations in natural photographs, but the integrity assessment of SAR images was never investigated. This task poses new challenges, since SAR images are generated with a processing chain completely different from that of natural photographs. This implies that many forensics methods developed for natural images are not guaranteed to succeed. In this paper, we investigate the problem of amplitude SAR imagery splicing localization. Our goal is to localize regions of an amplitude SAR image that have been copied and pasted from another image, possibly undergoing some kind of editing in the process. To do so, we leverage a Convolutional Neural Network (CNN) to extract a fingerprint highlighting inconsistencies in the processing traces of the analyzed input. Then, we examine this fingerprint to produce a binary tampering mask indicating the pixel region under splicing attack. Results show that our proposed method, tailored to the nature of SAR signals, provides better performances than state-of-the-art forensic tools developed for natural images.

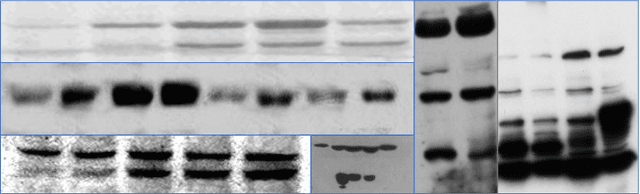

Forensic Analysis of Synthetically Generated Scientific Images

Dec 16, 2021

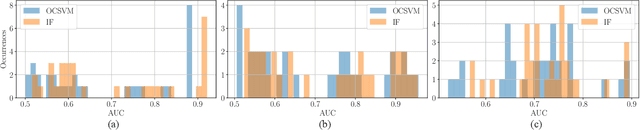

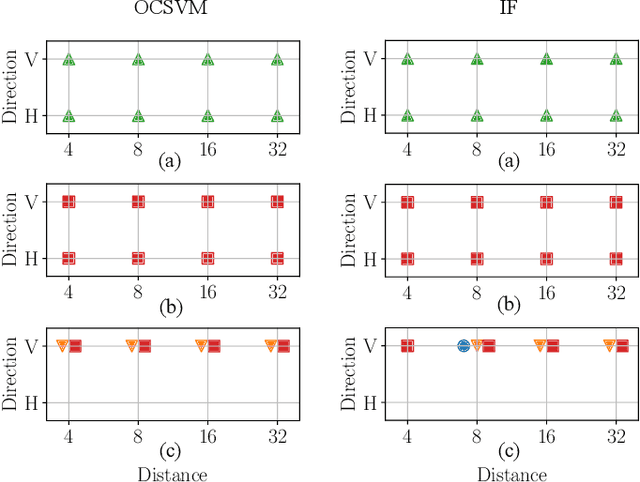

Abstract:The widespread diffusion of synthetically generated content is a serious threat that needs urgent countermeasures. The generation of synthetic content is not restricted to multimedia data like videos, photographs, or audio sequences, but covers a significantly vast area that can include biological images as well, such as western-blot and microscopic images. In this paper, we focus on the detection of synthetically generated western-blot images. Western-blot images are largely explored in the biomedical literature and it has been already shown how these images can be easily counterfeited with few hope to spot manipulations by visual inspection or by standard forensics detectors. To overcome the absence of a publicly available dataset, we create a new dataset comprising more than 14K original western-blot images and 18K synthetic western-blot images, generated by three different state-of-the-art generation methods. Then, we investigate different strategies to detect synthetic western blots, exploring binary classification methods as well as one-class detectors. In both scenarios, we never exploit synthetic western-blot images at training stage. The achieved results show that synthetically generated western-blot images can be spot with good accuracy, even though the exploited detectors are not optimized over synthetic versions of these scientific images.

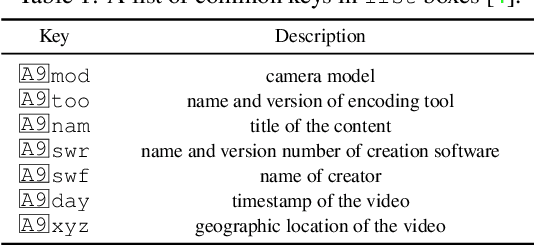

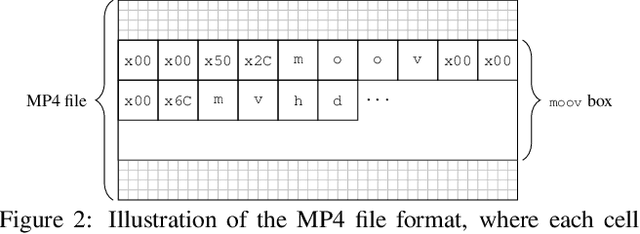

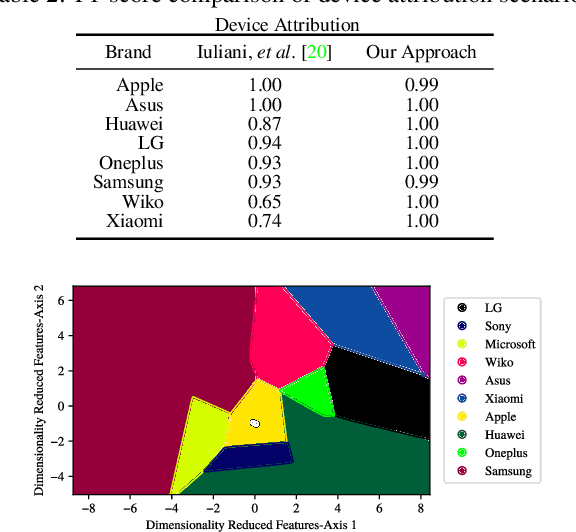

Forensic Analysis of Video Files Using Metadata

May 13, 2021

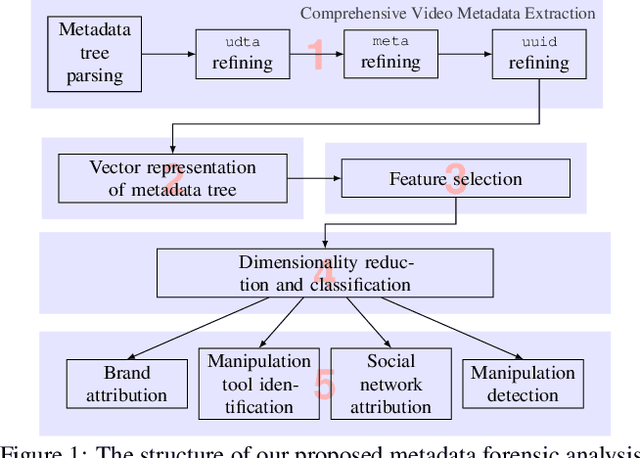

Abstract:The unprecedented ease and ability to manipulate video content has led to a rapid spread of manipulated media. The availability of video editing tools greatly increased in recent years, allowing one to easily generate photo-realistic alterations. Such manipulations can leave traces in the metadata embedded in video files. This metadata information can be used to determine video manipulations, brand of video recording device, the type of video editing tool, and other important evidence. In this paper, we focus on the metadata contained in the popular MP4 video wrapper/container. We describe our method for metadata extractor that uses the MP4's tree structure. Our approach for analyzing the video metadata produces a more compact representation. We will describe how we construct features from the metadata and then use dimensionality reduction and nearest neighbor classification for forensic analysis of a video file. Our approach allows one to visually inspect the distribution of metadata features and make decisions. The experimental results confirm that the performance of our approach surpasses other methods.

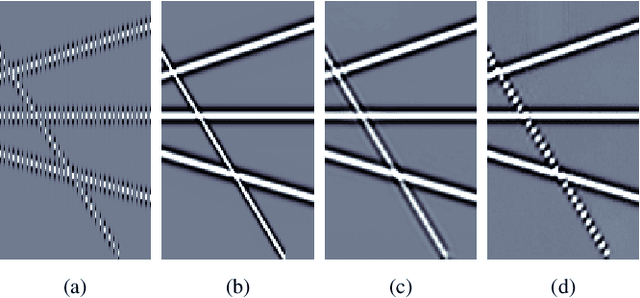

Anti-Aliasing Add-On for Deep Prior Seismic Data Interpolation

Jan 27, 2021

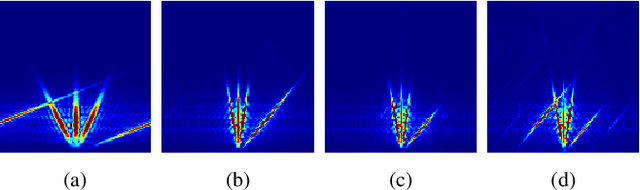

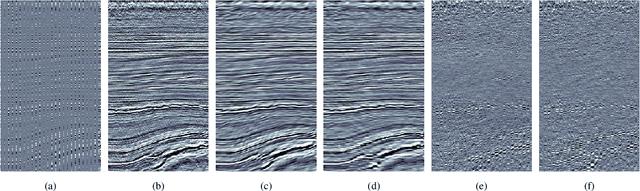

Abstract:Data interpolation is a fundamental step in any seismic processing workflow. Among machine learning techniques recently proposed to solve data interpolation as an inverse problem, Deep Prior paradigm aims at employing a convolutional neural network to capture priors on the data in order to regularize the inversion. However, this technique lacks of reconstruction precision when interpolating highly decimated data due to the presence of aliasing. In this work, we propose to improve Deep Prior inversion by adding a directional Laplacian as regularization term to the problem. This regularizer drives the optimization towards solutions that honor the slopes estimated from the interpolated data low frequencies. We provide some numerical examples to showcase the methodology devised in this manuscript, showing that our results are less prone to aliasing also in presence of noisy and corrupted data.

DIPPAS: A Deep Image Prior PRNU Anonymization Scheme

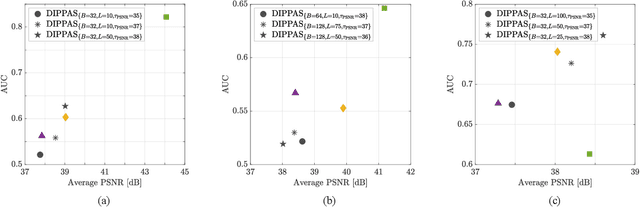

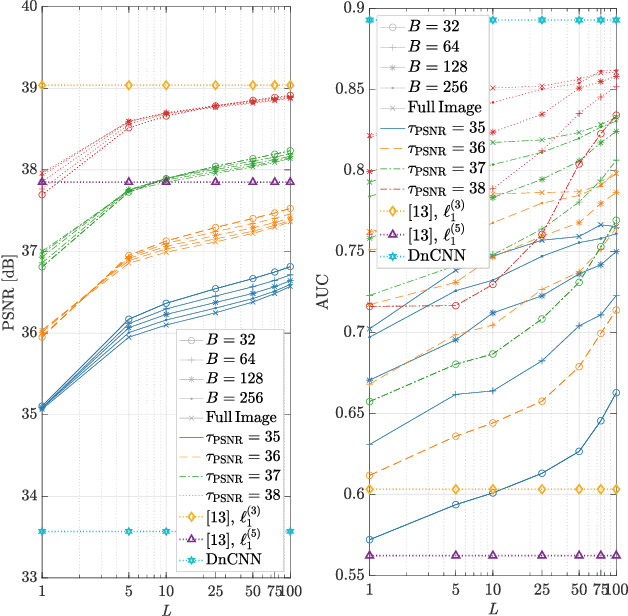

Dec 07, 2020

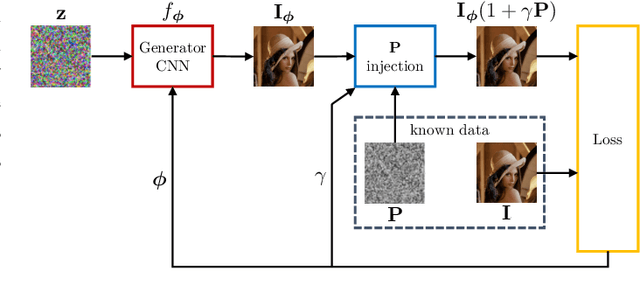

Abstract:Source device identification is an important topic in image forensics since it allows to trace back the origin of an image. Its forensics counter-part is source device anonymization, that is, to mask any trace on the image that can be useful for identifying the source device. A typical trace exploited for source device identification is the Photo Response Non-Uniformity (PRNU), a noise pattern left by the device on the acquired images. In this paper, we devise a methodology for suppressing such a trace from natural images without significant impact on image quality. Specifically, we turn PRNU anonymization into an optimization problem in a Deep Image Prior (DIP) framework. In a nutshell, a Convolutional Neural Network (CNN) acts as generator and returns an image that is anonymized with respect to the source PRNU, still maintaining high visual quality. With respect to widely-adopted deep learning paradigms, our proposed CNN is not trained on a set of input-target pairs of images. Instead, it is optimized to reconstruct the PRNU-free image from the original image under analysis itself. This makes the approach particularly suitable in scenarios where large heterogeneous databases are analyzed and prevents any problem due to lack of generalization. Through numerical examples on publicly available datasets, we prove our methodology to be effective compared to state-of-the-art techniques.

Training Strategies and Data Augmentations in CNN-based DeepFake Video Detection

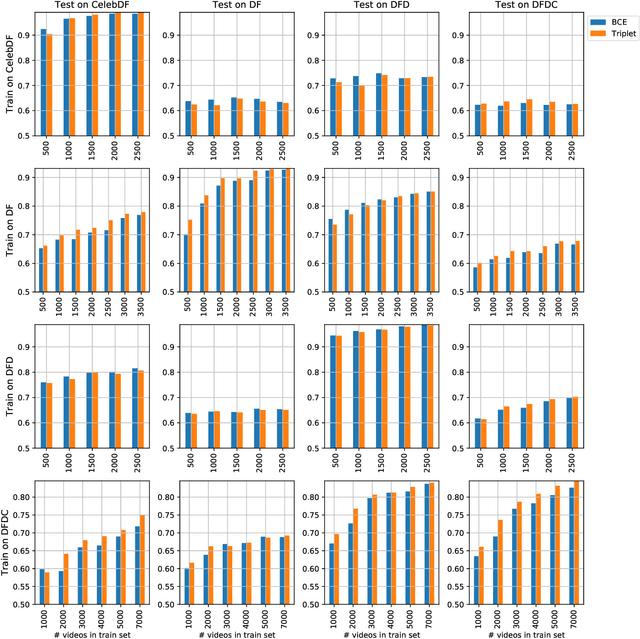

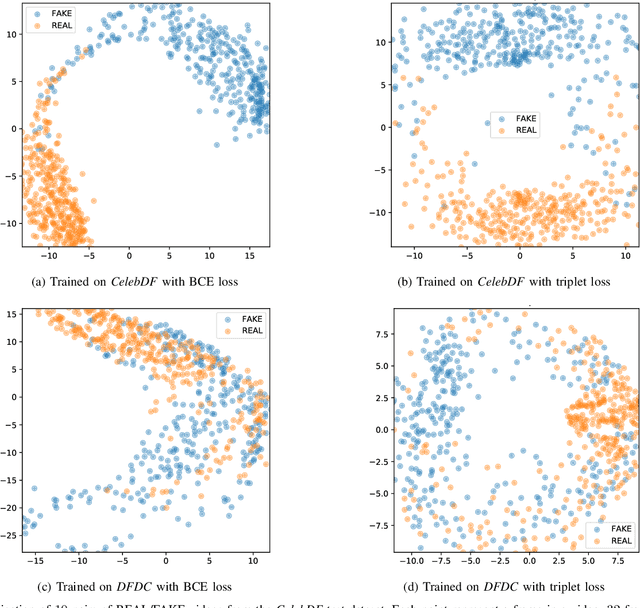

Nov 16, 2020

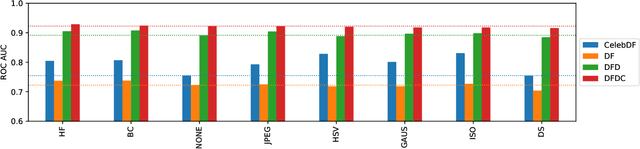

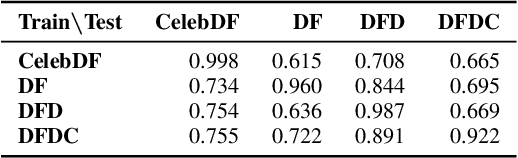

Abstract:The fast and continuous growth in number and quality of deepfake videos calls for the development of reliable detection systems capable of automatically warning users on social media and on the Internet about the potential untruthfulness of such contents. While algorithms, software, and smartphone apps are getting better every day in generating manipulated videos and swapping faces, the accuracy of automated systems for face forgery detection in videos is still quite limited and generally biased toward the dataset used to design and train a specific detection system. In this paper we analyze how different training strategies and data augmentation techniques affect CNN-based deepfake detectors when training and testing on the same dataset or across different datasets.

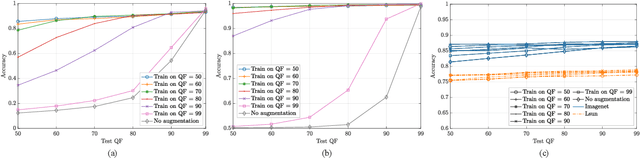

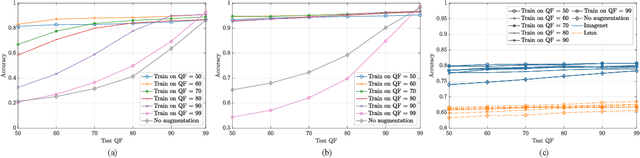

Training CNNs in Presence of JPEG Compression: Multimedia Forensics vs Computer Vision

Sep 25, 2020

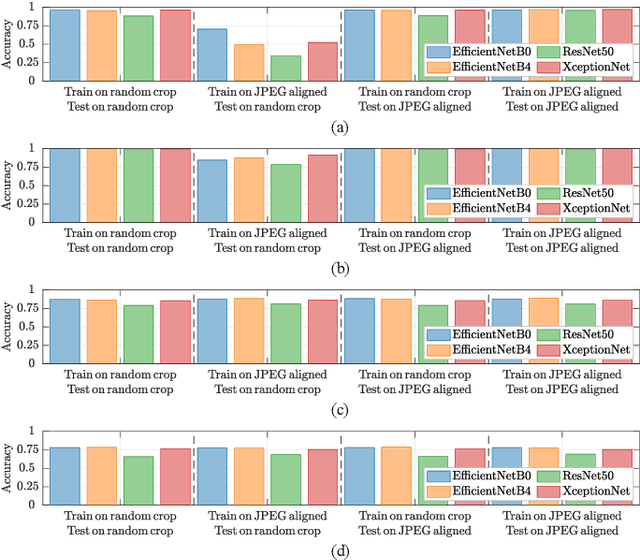

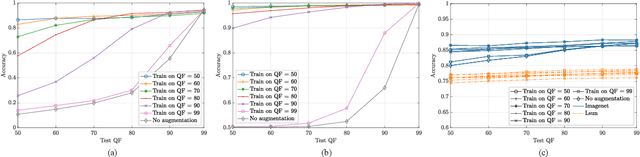

Abstract:Convolutional Neural Networks (CNNs) have proved very accurate in multiple computer vision image classification tasks that required visual inspection in the past (e.g., object recognition, face detection, etc.). Motivated by these astonishing results, researchers have also started using CNNs to cope with image forensic problems (e.g., camera model identification, tampering detection, etc.). However, in computer vision, image classification methods typically rely on visual cues easily detectable by human eyes. Conversely, forensic solutions rely on almost invisible traces that are often very subtle and lie in the fine details of the image under analysis. For this reason, training a CNN to solve a forensic task requires some special care, as common processing operations (e.g., resampling, compression, etc.) can strongly hinder forensic traces. In this work, we focus on the effect that JPEG has on CNN training considering different computer vision and forensic image classification problems. Specifically, we consider the issues that rise from JPEG compression and misalignment of the JPEG grid. We show that it is necessary to consider these effects when generating a training dataset in order to properly train a forensic detector not losing generalization capability, whereas it is almost possible to ignore these effects for computer vision tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge