Sofiya Garkot

Screen2AX: Vision-Based Approach for Automatic macOS Accessibility Generation

Jul 22, 2025Abstract:Desktop accessibility metadata enables AI agents to interpret screens and supports users who depend on tools like screen readers. Yet, many applications remain largely inaccessible due to incomplete or missing metadata provided by developers - our investigation shows that only 33% of applications on macOS offer full accessibility support. While recent work on structured screen representation has primarily addressed specific challenges, such as UI element detection or captioning, none has attempted to capture the full complexity of desktop interfaces by replicating their entire hierarchical structure. To bridge this gap, we introduce Screen2AX, the first framework to automatically create real-time, tree-structured accessibility metadata from a single screenshot. Our method uses vision-language and object detection models to detect, describe, and organize UI elements hierarchically, mirroring macOS's system-level accessibility structure. To tackle the limited availability of data for macOS desktop applications, we compiled and publicly released three datasets encompassing 112 macOS applications, each annotated for UI element detection, grouping, and hierarchical accessibility metadata alongside corresponding screenshots. Screen2AX accurately infers hierarchy trees, achieving a 77% F1 score in reconstructing a complete accessibility tree. Crucially, these hierarchy trees improve the ability of autonomous agents to interpret and interact with complex desktop interfaces. We introduce Screen2AX-Task, a benchmark specifically designed for evaluating autonomous agent task execution in macOS desktop environments. Using this benchmark, we demonstrate that Screen2AX delivers a 2.2x performance improvement over native accessibility representations and surpasses the state-of-the-art OmniParser V2 system on the ScreenSpot benchmark.

A Conversational Brain-Artificial Intelligence Interface

Feb 22, 2024

Abstract:We introduce Brain-Artificial Intelligence Interfaces (BAIs) as a new class of Brain-Computer Interfaces (BCIs). Unlike conventional BCIs, which rely on intact cognitive capabilities, BAIs leverage the power of artificial intelligence to replace parts of the neuro-cognitive processing pipeline. BAIs allow users to accomplish complex tasks by providing high-level intentions, while a pre-trained AI agent determines low-level details. This approach enlarges the target audience of BCIs to individuals with cognitive impairments, a population often excluded from the benefits of conventional BCIs. We present the general concept of BAIs and illustrate the potential of this new approach with a Conversational BAI based on EEG. In particular, we show in an experiment with simulated phone conversations that the Conversational BAI enables complex communication without the need to generate language. Our work thus demonstrates, for the first time, the ability of a speech neuroprosthesis to enable fluent communication in realistic scenarios with non-invasive technologies.

High throughput screening with machine learning

Dec 15, 2020

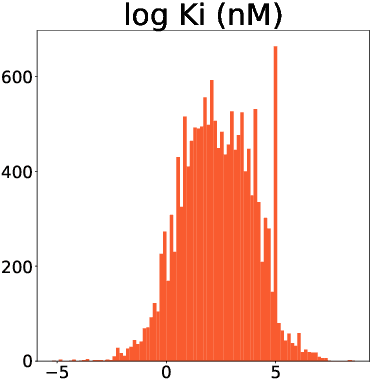

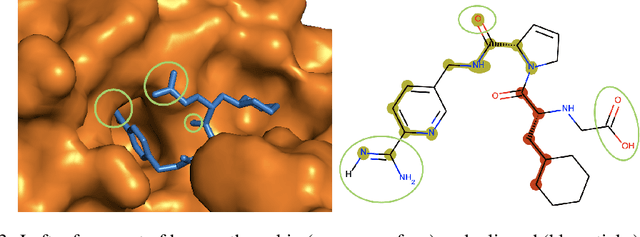

Abstract:This study assesses the efficiency of several popular machine learning approaches in the prediction of molecular binding affinity: CatBoost, Graph Attention Neural Network, and Bidirectional Encoder Representations from Transformers. The models were trained to predict binding affinities in terms of inhibition constants $K_i$ for pairs of proteins and small organic molecules. First two approaches use thoroughly selected physico-chemical features, while the third one is based on textual molecular representations - it is one of the first attempts to apply Transformer-based predictors for the binding affinity. We also discuss the visualization of attention layers within the Transformer approach in order to highlight the molecular sites responsible for interactions. All approaches are free from atomic spatial coordinates thus avoiding bias from known structures and being able to generalize for compounds with unknown conformations. The achieved accuracy for all suggested approaches prove their potential in high throughput screening.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge