Siddharth Siddharth

GuideAI: A Real-time Personalized Learning Solution with Adaptive Interventions

Jan 28, 2026Abstract:Large Language Models (LLMs) have emerged as powerful learning tools, but they lack awareness of learners' cognitive and physiological states, limiting their adaptability to the user's learning style. Contemporary learning techniques primarily focus on structured learning paths, knowledge tracing, and generic adaptive testing but fail to address real-time learning challenges driven by cognitive load, attention fluctuations, and engagement levels. Building on findings from a formative user study (N=66), we introduce GuideAI, a multi-modal framework that enhances LLM-driven learning by integrating real-time biosensory feedback including eye gaze tracking, heart rate variability, posture detection, and digital note-taking behavior. GuideAI dynamically adapts learning content and pacing through cognitive optimizations (adjusting complexity based on learning progress markers), physiological interventions (breathing guidance and posture correction), and attention-aware strategies (redirecting focus using gaze analysis). Additionally, GuideAI supports diverse learning modalities, including text-based, image-based, audio-based, and video-based instruction, across varied knowledge domains. A preliminary study (N = 25) assessed GuideAI's impact on knowledge retention and cognitive load through standardized assessments. The results show statistically significant improvements in both problem-solving capability and recall-based knowledge assessments. Participants also experienced notable reductions in key NASA-TLX measures including mental demand, frustration levels, and effort, while simultaneously reporting enhanced perceived performance. These findings demonstrate GuideAI's potential to bridge the gap between current LLM-based learning systems and individualized learner needs, paving the way for adaptive, cognition-aware education at scale.

Enhancing Public Speaking Skills in Engineering Students Through AI

Nov 07, 2025

Abstract:This research-to-practice full paper was inspired by the persistent challenge in effective communication among engineering students. Public speaking is a necessary skill for future engineers as they have to communicate technical knowledge with diverse stakeholders. While universities offer courses or workshops, they are unable to offer sustained and personalized training to students. Providing comprehensive feedback on both verbal and non-verbal aspects of public speaking is time-intensive, making consistent and individualized assessment impractical. This study integrates research on verbal and non-verbal cues in public speaking to develop an AI-driven assessment model for engineering students. Our approach combines speech analysis, computer vision, and sentiment detection into a multi-modal AI system that provides assessment and feedback. The model evaluates (1) verbal communication (pitch, loudness, pacing, intonation), (2) non-verbal communication (facial expressions, gestures, posture), and (3) expressive coherence, a novel integration ensuring alignment between speech and body language. Unlike previous systems that assess these aspects separately, our model fuses multiple modalities to deliver personalized, scalable feedback. Preliminary testing demonstrated that our AI-generated feedback was moderately aligned with expert evaluations. Among the state-of-the-art AI models evaluated, all of which were Large Language Models (LLMs), including Gemini and OpenAI models, Gemini Pro emerged as the best-performing, showing the strongest agreement with human annotators. By eliminating reliance on human evaluators, this AI-driven public speaking trainer enables repeated practice, helping students naturally align their speech with body language and emotion, crucial for impactful and professional communication.

The World of AI: A Novel Approach to AI Literacy for First-year Engineering Students

Jun 06, 2025Abstract:This work presents a novel course titled The World of AI designed for first-year undergraduate engineering students with little to no prior exposure to AI. The central problem addressed by this course is that engineering students often lack foundational knowledge of AI and its broader societal implications at the outset of their academic journeys. We believe the way to address this gap is to design and deliver an interdisciplinary course that can a) be accessed by first-year undergraduate engineering students across any domain, b) enable them to understand the basic workings of AI systems sans mathematics, and c) make them appreciate AI's far-reaching implications on our lives. The course was divided into three modules co-delivered by faculty from both engineering and humanities. The planetary module explored AI's dual role as both a catalyst for sustainability and a contributor to environmental challenges. The societal impact module focused on AI biases and concerns around privacy and fairness. Lastly, the workplace module highlighted AI-driven job displacement, emphasizing the importance of adaptation. The novelty of this course lies in its interdisciplinary curriculum design and pedagogical approach, which combines technical instruction with societal discourse. Results revealed that students' comprehension of AI challenges improved across diverse metrics like (a) increased awareness of AI's environmental impact, and (b) efficient corrective solutions for AI fairness. Furthermore, it also indicated the evolution in students' perception of AI's transformative impact on our lives.

Utilizing Deep Learning Towards Multi-modal Bio-sensing and Vision-based Affective Computing

May 16, 2019

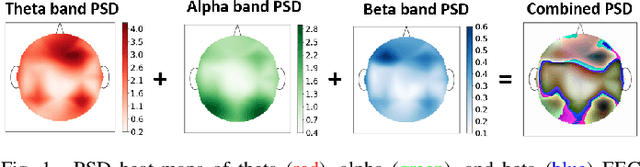

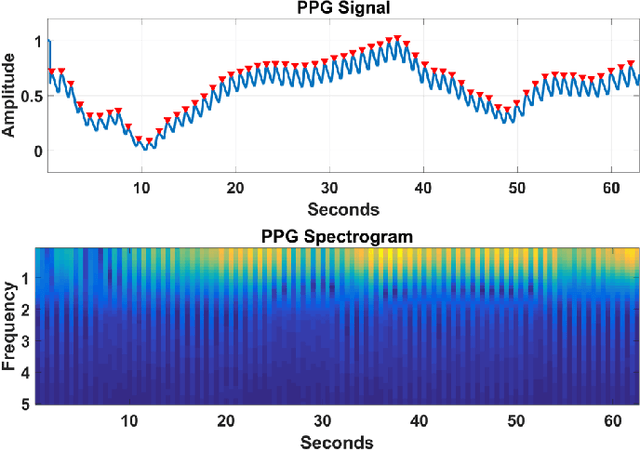

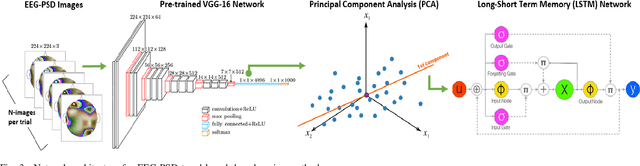

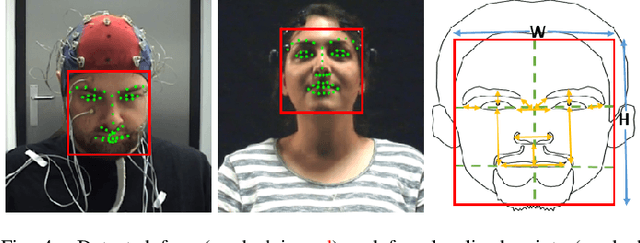

Abstract:In recent years, the use of bio-sensing signals such as electroencephalogram (EEG), electrocardiogram (ECG), etc. have garnered interest towards applications in affective computing. The parallel trend of deep-learning has led to a huge leap in performance towards solving various vision-based research problems such as object detection. Yet, these advances in deep-learning have not adequately translated into bio-sensing research. This work applies novel deep-learning-based methods to various bio-sensing and video data of four publicly available multi-modal emotion datasets. For each dataset, we first individually evaluate the emotion-classification performance obtained by each modality. We then evaluate the performance obtained by fusing the features from these modalities. We show that our algorithms outperform the results reported by other studies for emotion/valence/arousal/liking classification on DEAP and MAHNOB-HCI datasets and set up benchmarks for the newer AMIGOS and DREAMER datasets. We also evaluate the performance of our algorithms by combining the datasets and by using transfer learning to show that the proposed method overcomes the inconsistencies between the datasets. Hence, we do a thorough analysis of multi-modal affective data from more than 120 subjects and 2,800 trials. Finally, utilizing a convolution-deconvolution network, we propose a new technique towards identifying salient brain regions corresponding to various affective states.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge