Shiyun Zhao

TongSIM: A General Platform for Simulating Intelligent Machines

Dec 23, 2025Abstract:As artificial intelligence (AI) rapidly advances, especially in multimodal large language models (MLLMs), research focus is shifting from single-modality text processing to the more complex domains of multimodal and embodied AI. Embodied intelligence focuses on training agents within realistic simulated environments, leveraging physical interaction and action feedback rather than conventionally labeled datasets. Yet, most existing simulation platforms remain narrowly designed, each tailored to specific tasks. A versatile, general-purpose training environment that can support everything from low-level embodied navigation to high-level composite activities, such as multi-agent social simulation and human-AI collaboration, remains largely unavailable. To bridge this gap, we introduce TongSIM, a high-fidelity, general-purpose platform for training and evaluating embodied agents. TongSIM offers practical advantages by providing over 100 diverse, multi-room indoor scenarios as well as an open-ended, interaction-rich outdoor town simulation, ensuring broad applicability across research needs. Its comprehensive evaluation framework and benchmarks enable precise assessment of agent capabilities, such as perception, cognition, decision-making, human-robot cooperation, and spatial and social reasoning. With features like customized scenes, task-adaptive fidelity, diverse agent types, and dynamic environmental simulation, TongSIM delivers flexibility and scalability for researchers, serving as a unified platform that accelerates training, evaluation, and advancement toward general embodied intelligence.

Spatial and Temporal Consistency-Aware Dynamic Adaptive Streaming for 360-Degree Videos

Dec 20, 2019

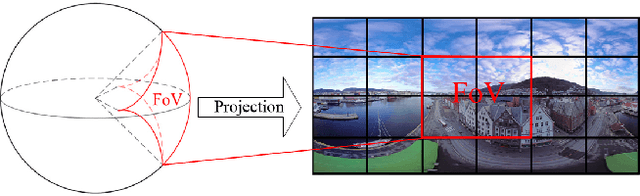

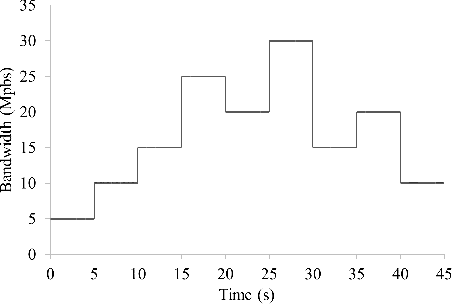

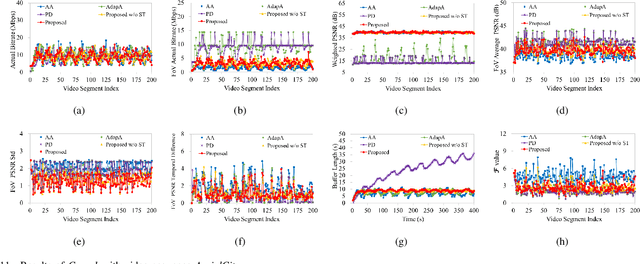

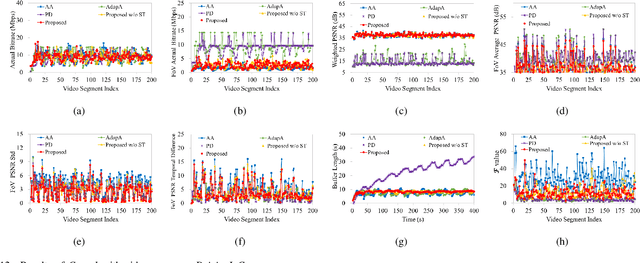

Abstract:The 360-degree video allows users to enjoy the whole scene by interactively switching viewports. However, the huge data volume of the 360-degree video limits its remote applications via network. To provide high quality of experience (QoE) for remote web users, this paper presents a tile-based adaptive streaming method for 360-degree videos. First, we propose a simple yet effective rate adaptation algorithm to determine the requested bitrate for downloading the current video segment by considering the balance between the buffer length and video quality. Then, we propose to use a Gaussian model to predict the field of view at the beginning of each requested video segment. To deal with the circumstance that the view angle is switched during the display of a video segment, we propose to download all the tiles in the 360-degree video with different priorities based on a Zipf model. Finally, in order to allocate bitrates for all the tiles, a two-stage optimization algorithm is proposed to preserve the quality of tiles in FoV and guarantee the spatial and temporal smoothness. Experimental results demonstrate the effectiveness and advantage of the proposed method compared with the state-of-the-art methods. That is, our method preserves both the quality and the smoothness of tiles in FoV, thus providing the best QoE for users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge