Shi

Human-AI Interaction Alignment: Designing, Evaluating, and Evolving Value-Centered AI For Reciprocal Human-AI Futures

Dec 25, 2025Abstract:The rapid integration of generative AI into everyday life underscores the need to move beyond unidirectional alignment models that only adapt AI to human values. This workshop focuses on bidirectional human-AI alignment, a dynamic, reciprocal process where humans and AI co-adapt through interaction, evaluation, and value-centered design. Building on our past CHI 2025 BiAlign SIG and ICLR 2025 Workshop, this workshop will bring together interdisciplinary researchers from HCI, AI, social sciences and more domains to advance value-centered AI and reciprocal human-AI collaboration. We focus on embedding human and societal values into alignment research, emphasizing not only steering AI toward human values but also enabling humans to critically engage with and evolve alongside AI systems. Through talks, interdisciplinary discussions, and collaborative activities, participants will explore methods for interactive alignment, frameworks for societal impact evaluation, and strategies for alignment in dynamic contexts. This workshop aims to bridge the disciplines' gaps and establish a shared agenda for responsible, reciprocal human-AI futures.

Can an AI agent hit a moving target?

Oct 06, 2021

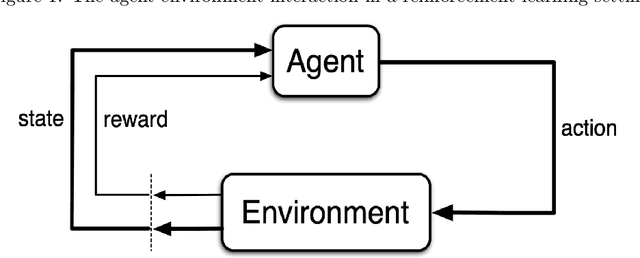

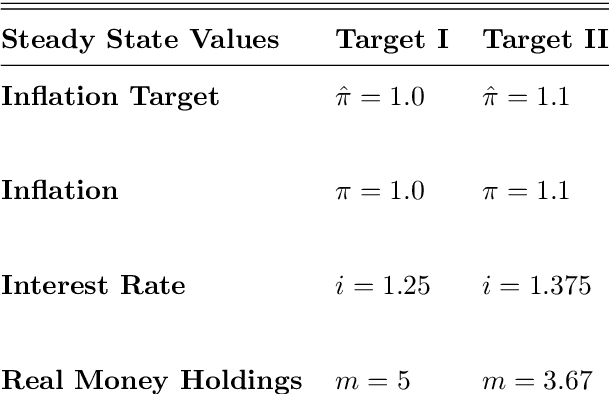

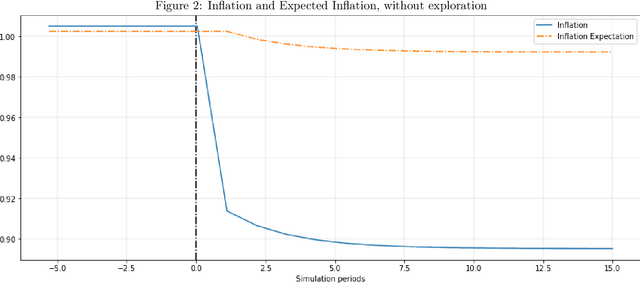

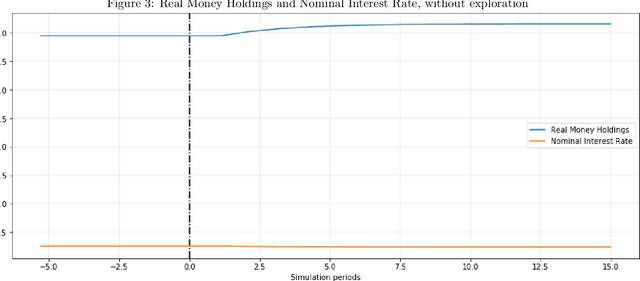

Abstract:As the economies we live in are evolving over time, it is imperative that economic agents in models form expectations that can adjust to changes in the environment. This exercise offers a plausible expectation formation model that connects to computer science, psychology and neural science research on learning and decision-making, and applies it to an economy with a policy regime change. Employing the actor-critic model of reinforcement learning, the agent born in a fresh environment learns through first interacting with the environment. This involves taking exploratory actions and observing the corresponding stimulus signals. This interactive experience is then used to update its subjective belief about the world. I show, through several simulation experiments, that the agent adjusts its subjective belief facing an increase of inflation target. Moreover, the subjective belief evolves according to the agent's experience in the world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge