Shetal Shah

Locally Pareto-Optimal Interpretations for Black-Box Machine Learning Models

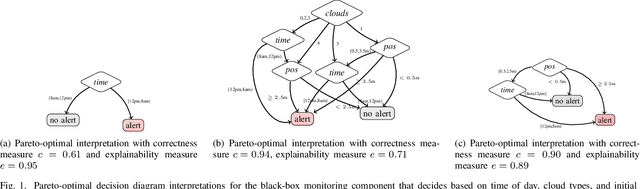

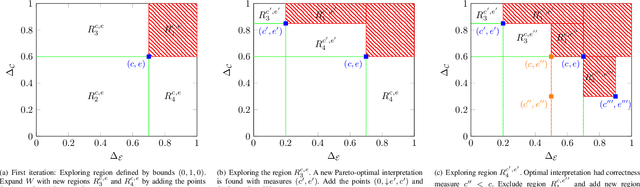

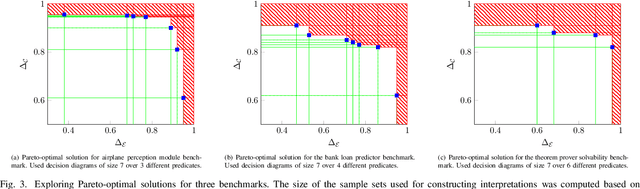

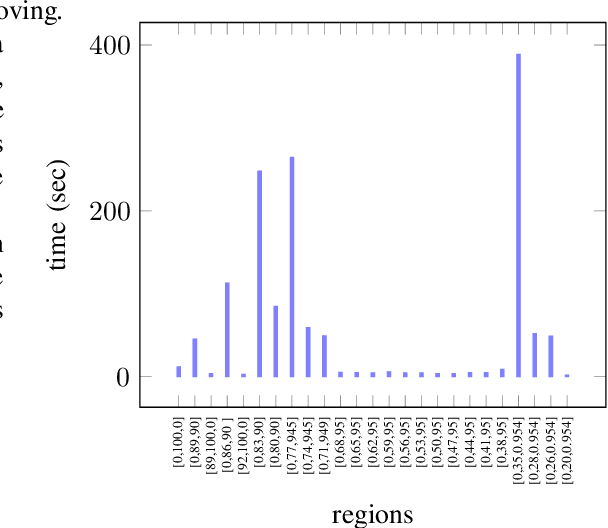

Aug 21, 2025Abstract:Creating meaningful interpretations for black-box machine learning models involves balancing two often conflicting objectives: accuracy and explainability. Exploring the trade-off between these objectives is essential for developing trustworthy interpretations. While many techniques for multi-objective interpretation synthesis have been developed, they typically lack formal guarantees on the Pareto-optimality of the results. Methods that do provide such guarantees, on the other hand, often face severe scalability limitations when exploring the Pareto-optimal space. To address this, we develop a framework based on local optimality guarantees that enables more scalable synthesis of interpretations. Specifically, we consider the problem of synthesizing a set of Pareto-optimal interpretations with local optimality guarantees, within the immediate neighborhood of each solution. Our approach begins with a multi-objective learning or search technique, such as Multi-Objective Monte Carlo Tree Search, to generate a best-effort set of Pareto-optimal candidates with respect to accuracy and explainability. We then verify local optimality for each candidate as a Boolean satisfiability problem, which we solve using a SAT solver. We demonstrate the efficacy of our approach on a set of benchmarks, comparing it against previous methods for exploring the Pareto-optimal front of interpretations. In particular, we show that our approach yields interpretations that closely match those synthesized by methods offering global guarantees.

Synthesizing Pareto-Optimal Interpretations for Black-Box Models

Aug 16, 2021

Abstract:We present a new multi-objective optimization approach for synthesizing interpretations that "explain" the behavior of black-box machine learning models. Constructing human-understandable interpretations for black-box models often requires balancing conflicting objectives. A simple interpretation may be easier to understand for humans while being less precise in its predictions vis-a-vis a complex interpretation. Existing methods for synthesizing interpretations use a single objective function and are often optimized for a single class of interpretations. In contrast, we provide a more general and multi-objective synthesis framework that allows users to choose (1) the class of syntactic templates from which an interpretation should be synthesized, and (2) quantitative measures on both the correctness and explainability of an interpretation. For a given black-box, our approach yields a set of Pareto-optimal interpretations with respect to the correctness and explainability measures. We show that the underlying multi-objective optimization problem can be solved via a reduction to quantitative constraint solving, such as weighted maximum satisfiability. To demonstrate the benefits of our approach, we have applied it to synthesize interpretations for black-box neural-network classifiers. Our experiments show that there often exists a rich and varied set of choices for interpretations that are missed by existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge